Editing Workflows Mature as Vendors Add AI-Powered Features

As cloud and IP become more widespread, editors are looking to enhance collaboration

With nonlinear editing now about 35 years old, even the most experienced practitioners have known little else—and it is, by any measure, a mature market. It also faces fundamental change, from users in unanticipated fields, revolution in the fundamentals of computing and a changing audience and market for video itself.

“What it means to be in video has developed a wider definition,” Meagan Keane, director of product marketing for Adobe’s video portfolio, said. “Our customer base is so diverse. When we look at our users, there’s everything from top features and TV to docuseries, broadcast news, sports, commercials, content creators and everything in between.”

Despite that diversity, Keane said, the needs of users are often constant. “The strategy for Premiere as a single application is that users are needing it to be more end to end,” she said. “Maybe that’s because you’re a one-person band and doing everything, or you’re a craft editor and you pass off to finishing, sound—but there’s still an expectation from the director that the first cut you see looks and sounds beautiful.”

Staying in the Zone

As excitement around machine learning subsides to a more sustainable level, Keane described an approach to AI that will be familiar to many in the industry: “assistive AI—there’s generative extend. [Adding] two seconds of audio or 10 seconds of video doesn’t seem like a big deal, but from the editor’s perspective, it’s huge. That keeps the editor in the creative flow, not having to go and find B-roll or reverse or slo-mo, or room tone nobody recorded.”

Allowing creatives to stay in the zone as machines pick up the donkey work is a common thread, but the maturity of the market can be a caveat on the desire to add new things. Adobe’s approach is gradual, Keane said.

“The way we’ve been addressing the mature app and legacy code for both Premiere and After Effects is that as we’re updating [features] in the app we’re addressing legacy code that may be there,” she said. “It’s not a full app rewrite all at once, it’s addressing layers within the app—they’re being modernized or evolved. And how do you do that without disgruntling experienced users?”

Often, though, that tension can be resolved in mutually beneficial ways. “Our high-end users see it as valuable that we’re also used by the content creators,” Keane said. “Data shows that the next-generation consumption patterns are going more and more short form. A huge percentage of box office ticket sales are now driven by short-form content—trailers on YouTube, TikTok, Instagram. A lot of the big tentpoles [are thinking], ‘How do we do this well, how do we connect with that short-form audience in a way that’s meaningful for our big name releases?’ ”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Grass AMPPs It Up

Grass Valley’s Edius is perhaps identified most with broadcast edit, but remains far from immune to revolution in the wider world. Michael Lehmann-Horn, CEO of distributor Magic Multimedia, described Edius as “a different product to what Grass Valley normally sells. A camera will always be a camera—that’s hard to do in software. The editor came historically from the company Canopus, which is a Japanese company, and in the past it’s been a little bit separated. In the last year, they’re working much more closely, because they’ve seen that integrating editing in their [SaaS] AMPP solution is interesting.”

That kind of integration, Lehmann-Horn said, makes software more usable in more places. “You can use AMPP for editing; you have the [asset manager] Framelight X which also includes the editor,” he said. “Since about a year ago, they’ve been really pushing to integrate Edius into the AMPP workflow—but that said, Edius doesn’t need AMPP, it runs on a standalone PC, too.”

Much as service-oriented solutions have become popular, concerns over economics have recently moderated the uptake of cloud computing in media production. There are ways to address the problem, though, as Lehmann-Horn explained.

“Edius can work without a good GPU; systems in the cloud are expensive if you add GPU,” he said. Concerns over data-handling fees are also addressed. “What people used to do is download the file, change it and upload it again, that costs a fortune,” he said. “With Edius, you go to where your footage is, make the change—there's no up-and-down.”

While upscale GPU resources have brought blinding performance to certain aspects of editing, Lehmann-Horn pointed out the value in keeping things more lightweight.

[Adding] two seconds of audio or 10 seconds of video doesn’t seem like a big deal, but from the editor’s perspective, it’s huge.”

—Meagan Keane, Adobe

“We have been working with Hans AI and Akon—they have AI-based tools for denoising,” he said. “The fun stuff is, if you look at [other solutions] you may have very high quality denoising but it’s consuming a lot of energy. The tool from the library from Akon is 50K, it’s a small file. It can be processed by any CPU, even embedded CPUs, and that’s the point. If you’re using a lot of energy, that’s not the future of AI.”

In a more general sense, though, Lehmann-Horn proposes that “the future of editing is having more information about your footage. Editing is searching files, the right clips … analyzing it and building something. If you’re always doing the same kind of work, you’d like to have an assistant making the timeline for you.

“One car-part manufacturer was selling like 12,000 different types of towing attachments,” he added. “They needed 12,000 videos. We took the data from an Excel file … wait a bit and you have 12,000 video files!”

Expanding Collaboration

The word “collaboration” might increasingly describe working with a machine, rather than another human. As software matures, though, expanding feature sets require diverse skillsets. Bob Caniglia, director of sales operations for Blackmagic Design in North America, explained the evolution of the company’s Resolve editing platform in this way: “As Resolve expanded into audio and effects the need to do collaboration expanded more and more. You can begin with the free version. I know a lot of people who share projects and they’re not necessarily using Resolve Studio, they’re using Resolve which is the free version.”

Worries over exit fees are addressed by Blackmagic’s cloud solutions. “Our cloud service gives people the ability to move files through without storing,” Canigila said. “It can be a less expensive way to move files.”

Security, too, is a concern, especially in the world of high-end drama from which Resolve originally hails.

“If some people are cautious about putting files in the cloud, we still can share the Resolve projects and then they’re all working on the same project at the same time,” he said.

“Another thing that’s as simple as can be is the Blackmagic cloud app,” Caniglia added, describing another aspect of integration. “If you’re out in the field shooting and the camera has a locked-off shot the journalist can use a cellphone to shoot another angle and send that back to the station.”

As the company reaches increasingly for the broadcast market, Resolve’s live capabilities have grown accordingly. “When we started talking about a replay system I didn’t think any of us thought it would run through Resolve, but when it came out we thought it made a lot of sense,” Caniglia admitted. “That’s one of the tools we threw in there recently… Resolve becomes a live replay system.”

Caniglia is clear that updating software with the legacy of Resolve, and without compromising performance, demands careful engineering. “Resolve has been optimized and reoptimized a number of times,” he said. “When Blackmagic bought Da Vinci, the code was clunky, but it didn’t matter because the hardware was the fastest on the planet. Every time they talk about speed improvements, it’s about keeping it going so you don’t feel like you’re waiting around for things.”

Legacy Media Composer

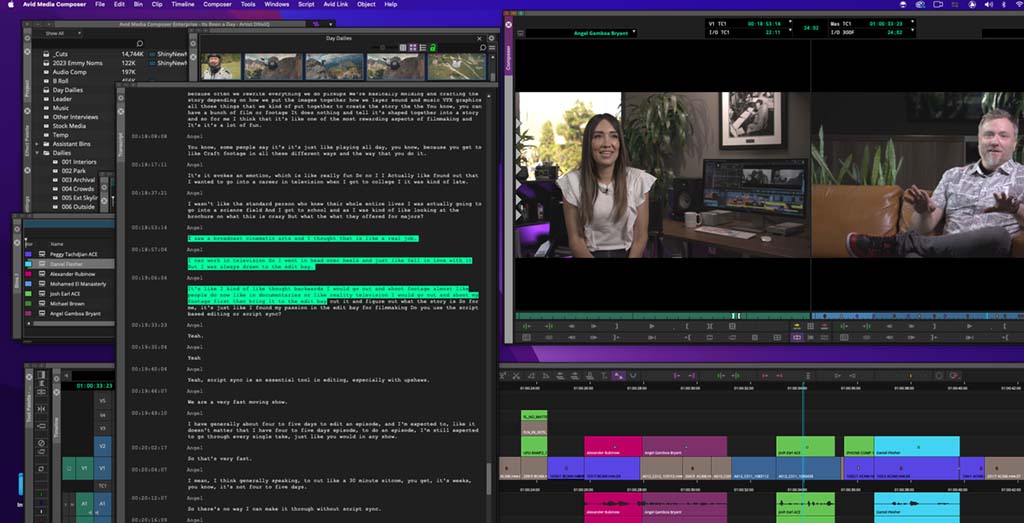

Avid’s Media Composer must rank among the most mature of its breed, and it accordingly has faced keen pressure to innovate while sidestepping the wrath of a hugely established user base. Michael Krulik, Avid’s product evangelist for Media Composer, remembers a long history.

“It’s been over 35 years since we came out in ’89, and people still talk about Media Composer … even for basic trimming, people historically have said we have the best tools for that,” Krulik said. “That’s what people are doing. They’re cutting.”

“The big enhancements we’ve done AI-wise in Media Composer are to do with the transcription tools,” Krulik added. “They provide transcription for source clips and transcription of the sequence. If you search for a word, it jumps to that point in a clip and you can edit the transcript text directly from your sequence. There’s also a single-click subtitle creation. What they don’t want is the machine making creative editorial decisions for them. Searching, suggesting possibly, may be good, but when I talk to a lot of editors, producers, post supervisors, there will still be a human element to it.”

Bringing the benefits of modernity to such an established art form has required something that Krulik describes as something of a carrot-and-stick process. “What’s changed tech-wise is being able to finish on software in a way you couldn’t years ago,” he said. “People want to be able to work faster. What happens with that is when you make a change … people slowly adjust, but development isn’t going to happen with the older software. So, if they want any of the [new tools], if they want the Pro Tools-Media Composer interop, they’re not going to get that with the old version.”

Making collaboration features useful to that kind of audience, Krulik said demands respect for the traditional approach. “As far as remote and collaboration goes, they still like the idea of being in person, of shouting down the hall, ’Hey come and look at my cut!’ There are tools like Avid Huddle that lets you share through Teams. You can get talent from anywhere. You’re not required to be in L.A. or to drive an hour and a half, one way, to work behind a desk, then miss being with your family and putting your kids to bed.”

Krulik concluded by pondering a harsh reality that continues to plague creatives worldwide. “It’s a mental thing, but also you need to have work to be able to do this,” he said. “I think the biggest issue at this point is that editors want to get back to work. A lot of people who are working, and they’re very thankful to be working, but a lot of people who aren’t are wondering when they’re going to get their next gig. Hopefully this year, production becomes a bit stronger.”