A Decade of Changes in Storage

How the continual evolution of storage management progressed in the last 10 years

IT departments continue to look for ways to improve benchmarks for end-user devices throughout the enterprise, and in the media-domain that is no different. For media, especially editorial production, the need to create content that is funneled from various resources depends upon the workstation or server capabilities, but more importantly on the storage systems’ abilities to deliver the data faster and unencumbered.

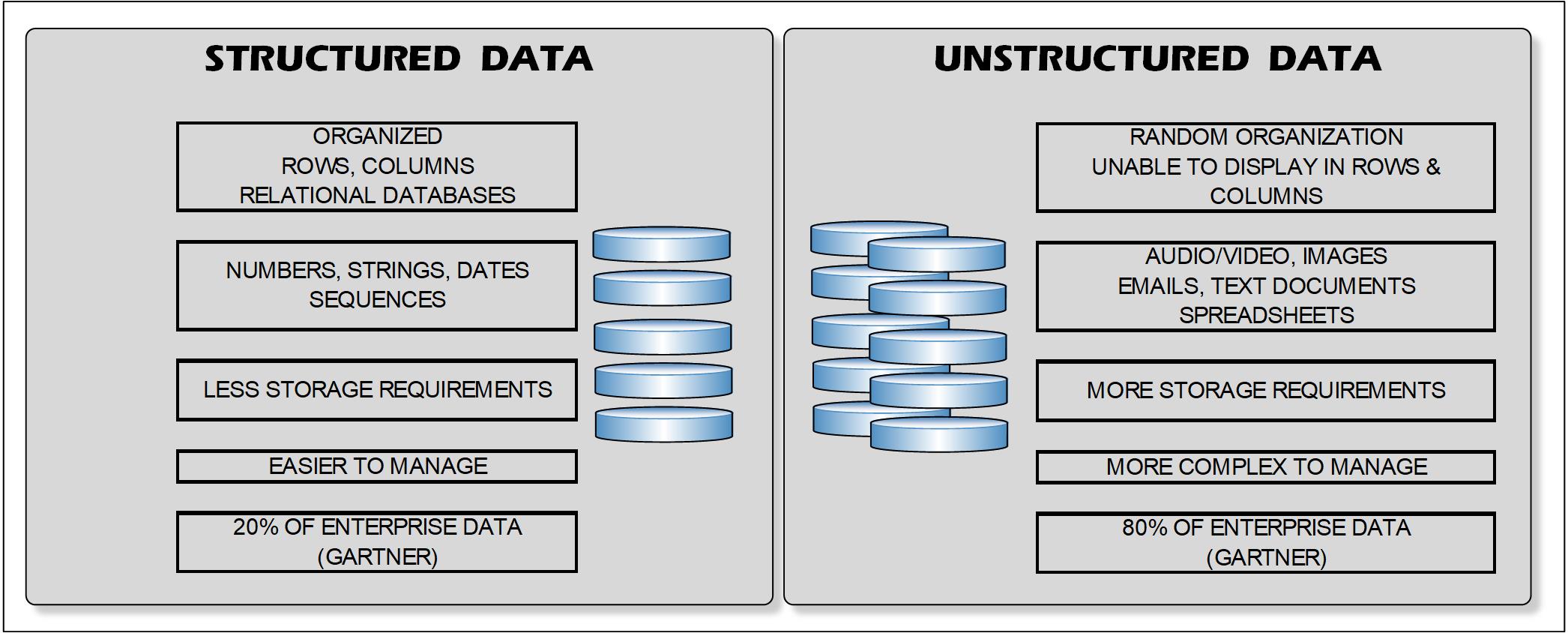

A decade ago, the hot topics for maximizing storage capacity were centered on the thoughts and needs of “enterprise data storage.” There seemed to be little distinction between “storage for media” purposes and “storage for enterprise data,” despite the radical differences between media’s needs for high accessibility, large contiguous file sizes and uninterrupted delivery to editing workstations. Data was distinctively divided between “structured” as in transactional data and “unstructured-data” found in video and audio media (see Table 1). Approaches to managing these divisions varied depending upon the storage platform (NAS, DAS or SAN) and the volume of data to be managed on a usage level.

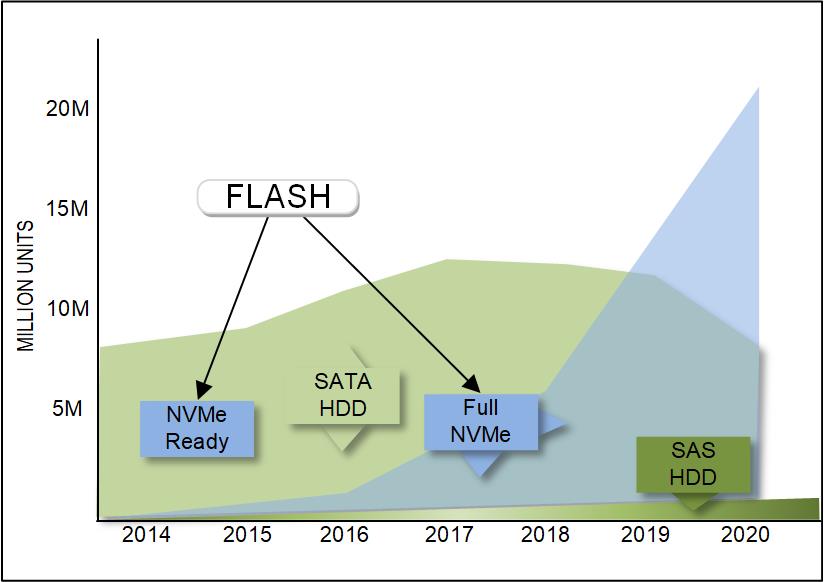

The stack of requirements for data storage management and efficiency included tiered storage, data migration tool sets and techniques such as data reduction and thin provisioning. Storage resource management was also emphasized during those times, ahead of the full acceptance and availability of solid state drives (SSDs) or NVMe (non-volatile memory express) devices (Fig. 1).

Initially, tiered storage was proclaimed as a first step for many organizations. Tiered storage was regulated by a process that took the least used data and moved it to a lesser accessible format such as linear tape. That model gradually evolved from on-prem physical tape libraries to deep archives in the cloud. Sometimes tape was retained for disaster recovery and legal reasons. Over time, and in some cases, the cloud would all but eliminate the on-prem tape medium.

PROACTIVE SERVICES

Storage resource management (SRM) uses a proactive approach that optimizes the speed and efficiency of the “available drive space” on a SAN (storage area network). For transactional data, the focus was on administrative procedures, which would automatically perform data backup, recovery and analysis. SAN solution sets often required a higher level of administrative maintenance, so SRM was furthered by implementing a combination of vendor APIs and a collection of the usual tools for systems management including SNMP, storage management initiative specifications (SMIS) and RESTful web services.

Utilization pattern data also emerged during this 2010 period. The tool set helped administrators determine how their storage systems managed input/output (I/O) requests. Through these tools, the systems could be improved by adjusting the way the drives were being utilized per the workflow groups to which they were assigned. Plug-ins were used to track real-time and trending patterns over any level of granularity (days, weeks or months) that the administrator wanted. For example, in a high-level rendering process for visual special effects, the demands on drive I/O would be continual but in editorial or color grading, the demands may be somewhat reduced.

LESSONS LEARNED

Many changes occurred in storage media between 2000–2010. The industry had started shifting SSD applications to include NVMe (2009) as the front end or cache portions of the drives arrays themselves, learning new lessons in space, speed and product fabrication. Even the migration from Tier 1 high-performance Fibre Channel (FC) deployment to less expensive Tier 2 SAS drives became more acceptable because improvements in the storage management tool sets allowed a more automated approach to implementation and administration. And once SSD arrived, the division between Tier 1 and Tier 2 almost blurred because I/O performance for SSD nearly equalized FC HDD performance, which the applications developers jumped on as a new and improved way of increasing performance of their own products.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Some of the lessons learned really leveraged rapid changes in drive performance alongside the acceleration of prolific content generation for streaming services—and for good reasons.

FAST FORWARD

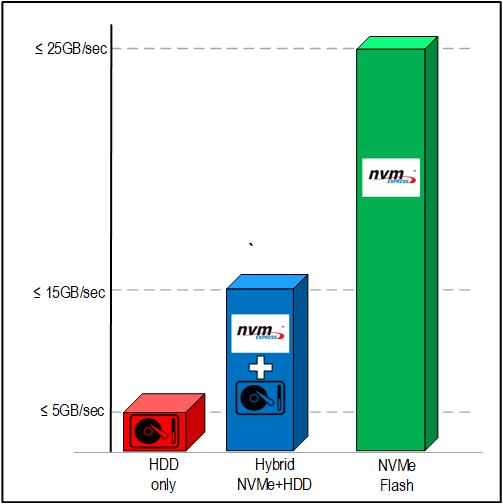

A lot of things have changed over the course of the previous decade not only in storage device technologies, but also in performance, operations and administrative freedom as represented by shortened deployment time and user-sensitive interaction with storage management itself. Fig. 2 shows the relative transfer rate improvements from HDD through today’s NVMe storage sets.

Workflows are individual to the organization. Drive system providers are now focusing more on performance by adding simplicity to installation, configuration and administration. Features such as role-based authentication and single namespace architectures are taking the place of complex administrative activities that traditionally were the Achilles’ heel of the end users and which often kept systems administrators from going home at night or enjoying their weekends.

Software (not hardware) RAID is now supported by separating the controller profiles from the shares or pools of storage itself. Eliminating the dependency among the two systems enables yet another level of performance improvement. Desynchronization and data corruption is further prevented when using software solutions for RAID control itself.

Improvements such as hyperscalability, high availability (HA) and containerization support through application-specific interfaces are now easier and achievable, aided in part by taking the mystery out of the deployment factor. Through the addition of increased RAM and multiple CPU cores, more throughput and reduced latency are each achieved. Distributed file systems and clustering for media-centric storage implementation, typically reserved to high-profile compute intensive systems, is now expected.

Utilization of current optical fiber SFP connectivity—such as QSFP28 100G connections between the server engine node and the drive chassis itself—are pushing I/O (and IOPS) figures upward while being more readily adaptable to the user software systems for applications such as rendering, color grading and editing.

REMOVING RELUCTANCY

Nobody wants to or can afford to wait and with storage bottlenecks virtually eliminated using these newer and faster speedways—throughout the system—they are gaining acceptance across the enterprise.

Traditional IT departments who were reluctant to put different or new solutions in place, especially for M&E users, are changing their vision. Today, for example, deployments of new systems take only hours, not days. GUIs are easier to understand. Systems and their administration are more intuitive and quite different from what were previously used in older, established storage solutions.

Today’s users should expect a storage system to be as straightforward as their iPhone or Android mobile devices. Resiliency should not have to depend upon continual monitoring and tweaking of systems just to keep workflows fluid.

KNOWING THE RIGHT SOLUTION

A key to understanding cost-to-performance models is in knowing the architecture of the storage system that you may need for your particular application. Stakeholders in the organization need to homogenize the users, administrators and technical support personnel in order to reach the right solution. A single drive set may no longer meet all the needs of the organization, however, that decision really depends upon the details of scale, diversity and the performance of the drive set and the solution provider’s unique capabilities for the tasks at hand.

Note: Portions of the technology discussions are courtesy of OpenDrives LLC of Culver City, Calif.

Karl Paulsen is currently the chief technology officer for Diversified and a frequent contributor to TV Technology in storage, IP and cloud technologies. You can reach Karl at diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.