Behind the Technology in Apple Vision Pro

Phil Rhodes explores the evolution that brought Apple’s spatial computing to commercial reality

Apple’s interest in virtual and augmented reality has been fairly clear for a while. It started acquiring companies with expertise in the field at least as early as six or seven years ago, perhaps most prominently the startup Vrvana in November 2017. Whether it’s fair to call Vision Pro a “Vrvana Totem” evolved by Apple’s vast R&D budget is necessarily speculation, but the purchase was certainly part of a trend.

A few months earlier, the company bought SensoMotoric Instruments for its eye-tracking technology. Apple had owned environment-tracking specialists Flyby Media since the beginning of 2016 and augmented reality startup Metaio since the year before. It’s hardly rare for large companies to make that kind of acquisition, but it didn’t take much to connect the dots even then.

The Legacy of Evans and Sutherland

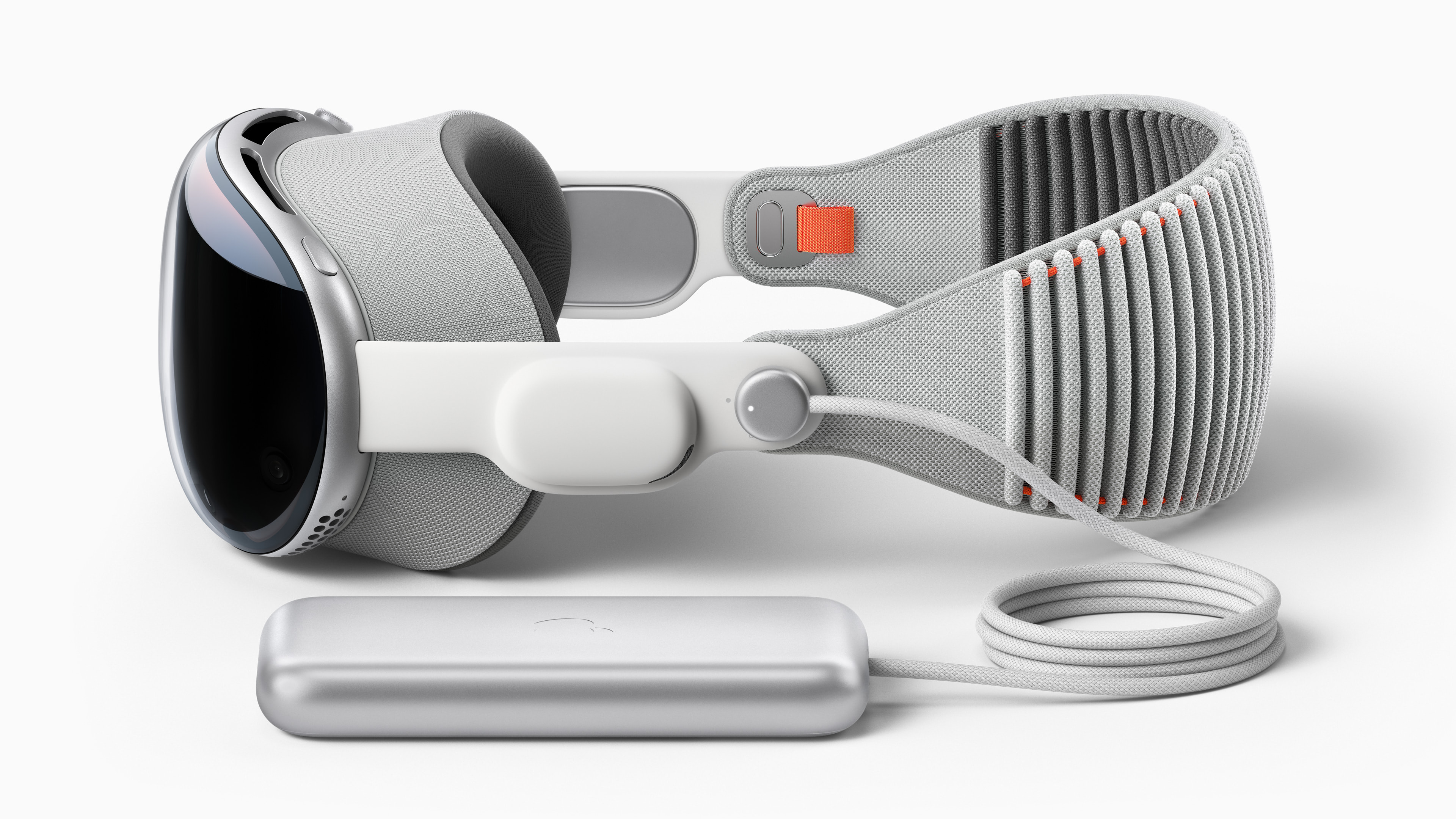

The concept of head-mounted displays in general dates back to at least the 1960s and the work of Ivan Sutherland, whose later collaboration with David Evans would yield things which are still fundamental to computer graphics. Evans and Sutherland equipment would be key to Tron, for instance. Apple, meanwhile, would quite rightly object to the idea that its Vision Pro device is a mere display. It incorporates several displays, loudspeakers, cameras for conventional imaging, motion tracking and eye tracking, as well as all the computing resources to make those things useful.

(Read: I wore the Apple Vision Pro — and it's truly amazing (and a bit unsettling))

The company has been particularly keen to emphasize the system’s low latency, with sub-ten-millisecond numbers suggesting that it should be capable of avoiding the lag between real-world movement and display updates which sometimes make devices like this distracting to use. Ultimately, latency in digital imaging affects everyone from video gamers to camera operators watching a viewfinder. Back in the days of analog electronics, the time between a photon entering a lens and another exiting a display was often mere nanoseconds—the propagation delay of a few dozen transistors, but no longer.

There’s an argument that digital technology has made hardware designers too relaxed, waiting for an entire frame to be downloaded from a camera’s sensor before starting work on it. Do that, and the minimum delay is one full frame, or about 17 milliseconds at 60 frames per second. It doesn’t take much for that to become a few frames, which is sluggish enough to risk seasickness in the context of a headset.

This is particularly relevant to Vision Pro, which creates its augmented reality by taking pictures of the real environment, superimposing virtual elements, and then displaying the result to the user. The advantage is that virtual objects appear solid, without the ghostly transparency of augmented-reality devices which reflect virtual objects into the user’s field of view. The disadvantage is that latency must be low, and considering there’s 3D rendering to be done, that’s not necessarily easy.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

There’s nothing preventing a camera from sending the picture in much smaller pieces, for vanishingly low latency. It’s just rarely done like that. Exactly how far into the weeds Apple has gone on this is something only its engineers know, but it’s no surprise that Vision Pro uses some custom hardware. The semiconductor Apple calls R2 presumably incorporates quite a lot of digital signal processing to handle the eye, feature and gesture tracking from the device’s twelve cameras, but we can guess with some confidence that the whole architecture of the device is designed, wherever possible, for low latency.

Micro-LED

As to Vision’s CPU, notice that Apple recently moved its last computer range to linger with Intel processors, the upscale Mac Pro, to its own ARM-based M-series device. There are both business and technical reasons to do that, but the company has probably been most vocal about reduced power consumption for its laptops. Some promotional hyperbole was inevitable, and when the first M1 CPUs emerged there absolutely were Intel-compatible options with competitive power-to-performance numbers (the surprise was that they came from AMD.)

Power concerns are less relevant to a performance-centric, mains-powered workstation like Mac Pro, but they certainly are relevant to the M2-based Vision Pro. With the device relying on external power, every joule saved is worth its weight in lithium ions.

The displays being driven by this combination of hardware are described by Apple as “micro OLED,” which is a comparatively recent development. The term “micro-LED” is a more common term for highly miniaturised arrays of LED emitters; essentially a microscopic version of the video walls used for virtual production. Micro-LED is a promising technology that might replace LCD and OLED entirely, offering more brightness and contrast than either, but it exists outside the lab. An OLED emitter, meanwhile, is still a diode that emits light, but it involves some rather different physics. The terms are not interchangeable.

So “micro-OLED” means a very small OLED, possibly based on Sony technology. A lot of head mounted displays use LCD display panels simply because they’re available, and they’re available because of the huge demand for marketable resolution figures in the cellphone market.

The problem is that even the best LCDs aren’t really what a headset designer really wants. 3800 by 1440 pixels and 500 pixels per inch, as achieved in the Galaxy S23 Ultra, is more than enough for a phone. It’s still too few pixels, and in too large a format, to be ideal for a device like Vision Pro, which wants to fill the observer’s field of view using small, lightweight hardware.

The compromise is between field of view and resolution. Like most headsets, with a 100-degree field of view, Vision Pro does not fill the user’s entire visual field. Vision Pro’s 4K displays are competitive for a current release, but the human visual field is almost 180 degrees wide. That’s a lot to cover considering there are speculative designs which discuss 35 pixels per degree. That’s the number discussed by Pimax’s Reality 12K QLED, which was intended to hit CES 2022, though it’s now projected for 2024. The 12K number, in that case, means one 6K LCD display per eye.

An Impressive Piece of Technology

If 12 cameras seems like a lot, consider that many of them will be in at least stereo pairs. The inside-out motion tracking, which locates the headset by observing the environment, might account for several. The eye tracking requires cameras, and the gesture recognition too.

Add cameras for general-purpose visible light imaging and it’s not hard to reach a dozen. These will presumably be cameras of the general type used on cellphones, representing another technology which has benefited hugely from advancements in that field.

Vision Pro is an impressive package of technology. There’s a lot in it and what’s there is high end, as it should be for the price. While it’s a cutting edge VR/AR device, the cutting edge is in rapid motion and it’s certainly not the first such device to be cutting edge at launch. In the end, the success of any computer hardware ultimately depends on what the software does with it. It depends how reliable the gesture recognition is, how accurate the motion tracking is, how well those displays work, and what developers do with them.

Apple certainly knows that the price tag precludes Vision Pro becoming a mass-market item, and that might make success difficult to identify, let alone predict. What matters is whether the hardware works well enough for the software to be popular and effective. The company has certainly given itself the best possible chance.