M&E Not Ready to Fully Embrace AI in 2024

Industry approached the technology with apprehension, tinged with cautious optimism

Talking about artificial intelligence in 2024 is akin to talking about the internet in 1994—everyone’s excited about it and thinks it will have an enormous impact but is unsure about its future. Thirty years ago, the internet was a thing; today, IP technology has permeated our lives to the point that no one talks about “the internet” and pretty soon that will be the case with AI.

But 2024 clearly showed we aren’t there yet. AI has dominated the conversation around media and entertainment technology in much the same way it did in 2023—when the world was just beginning to understand how emerging platforms like OpenAI were revolutionizing how we learn and communicate.

The Tools

How companies approached AI this year depended on their status; while big tech companies such as Google, OpenAI and Amazon rolled out new AI generative video platforms, other more traditional players added incremental—but not yet revolutionary—improvements in AI that focused on enhancing real content while also taking steps to protect copyrighted material.

This week Google announced Veo 2, an update to its Veo video generator it introduced earlier this year. Veo 2, Google claims, “creates incredibly high-quality videos in a wide range of subjects and styles,” adding that “in head-to-head comparisons judged by human raters, Veo 2 achieved state-of-the-art results against leading models.”

In addition to its ability to lessen the probability of “hallucinations” that plague current GenAI platforms, Google says its “commitment to safety and responsible development has guided Veo 2.”

“We have been intentionally measured in growing Veo’s availability, so we can help identify, understand and improve the model’s quality and safety while slowly rolling it out via VideoFX, YouTube and Vertex AI,” the company said. Just like the rest of our image and video generation models, Veo 2 outputs include an invisible SynthID watermark that helps identify them as AI-generated, helping reduce the chances of misinformation and misattribution.”

Google’s update is expected to compete head-on with OpenAI’s new Sora generative AI video tool which it made available last week, 10 months after its soft beta launch in February. The new version—Sora Turbo—that OpenAI says is “significantly faster” than the model it previewed earlier this year is being released as a standalone product at Sora.com to ChatGPT Plus and Pro users.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Sora generates realistic videos from text, with users able to generate videos up to 1080p resolution, up to 20 seconds in length and in wide-screen, vertical or square aspect ratios. The platform allows users to use their own tools to extend, remix and blend or generate entirely new content from text.

ChatGPT Plus customers can generate up to 50 videos at 480p resolution or fewer videos at 720p each month via their accounts while the Pro plan includes 10 times more usage, higher resolutions and longer durations. OpenAI said it’s working on tailored pricing for different types of users, which it plans to make available early next year.

Earlier this month, Amazon rolled out “Amazon Nova Reel,” which it describes as a “state-of-the-art” video-generation model that allows customers to easily create high-quality video from text and images. Amazon Nova Reel currently generates 6-second videos and, in the coming months, will support the generation of videos of up to 2 minutes long.

Nova Reel is designed for content creation in advertising, marketing or training, Amazon said. Customers can use natural language prompts to control visual style and pacing, including camera motion, rotation and zooming.

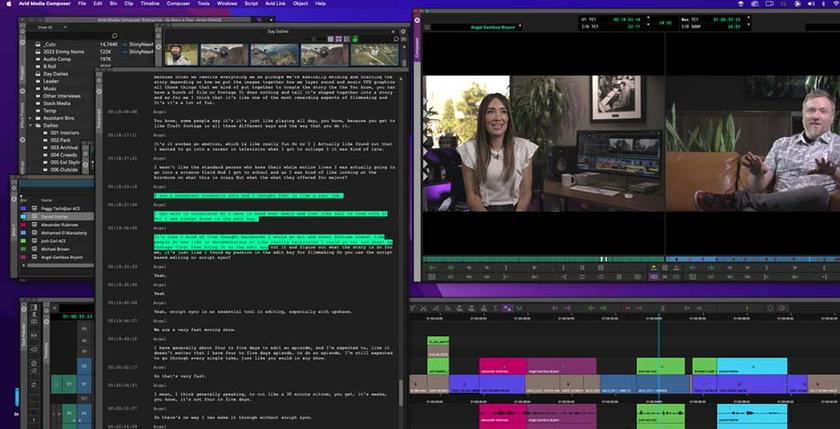

Adobe—whose Photoshop is among the most popular tools for creating AI-generated content—announced the addition of a version of generative AI to its Premiere Pro media editing platform in October. However, the “Generative Extend” AI beta is not full-on generative AI, but rather a feature that allows creators to extend clips to cover gaps in footage, smooth out transitions or hold onto shots longer for perfectly timed edits.

To protect its Firefly generative AI from violating copyrighted material, the company said it trains Firefly generative AI models on licensed content, such as Adobe Stock or public-domain content, and its features are developed under its AI Ethics principles of accountability, responsibility and transparency—aka what it calls “Content Credentials.”

Outside Hollywood, the advertising world has embraced AI wholeheartedly; in a recent survey from Frequency, a provider of advertising sales automation and workflow software, 80% percent of digital ad professionals said they now use AI tools for media buying and campaign management.

That enthusiasm is also evident in media creation as well. An example of this is Waymark, a developer of AI video-creation technology, which recently launched “Variations,” which generates video ads in multiple lengths and aspect ratios with a single click for all devices and platforms, maintaining a cohesive look and feel, the company said.

Waymark said its AI-powered video platform enables creators to generate high-quality commercials for local businesses in five minutes or less. Creators can convert their Waymark videos and convert them into additional lengths such as 30- 15- and 5-second clips, as well as complementing formats such as 16x9 and 4x5, with a single click, creating an “omnichannel” video campaign, the company said.

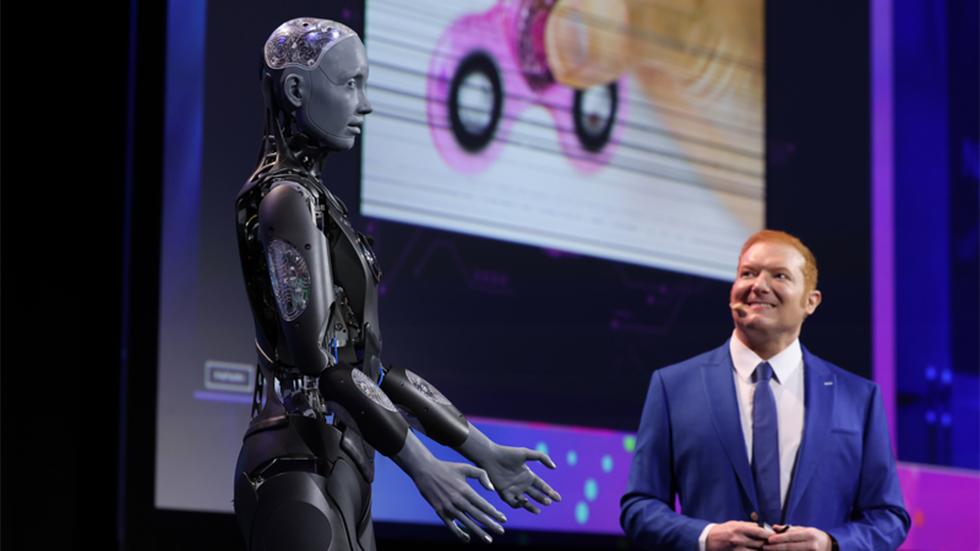

The ’Tudes

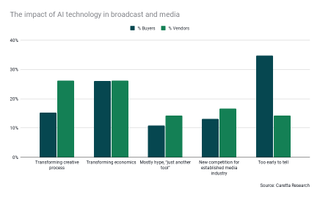

Enthusiasm over the possibilities of AI can often be overwhelmed by a sense of apprehension or dread over the unpredictability of its impact on the future of media. This was evident in a survey conducted by TVBEurope and TV Tech last summer on our readers’ attitudes towards AI.

The survey, carried out by Caretta Research, shows many broadcasters currently use AI in “back-office” processes, with 85% of respondents stating they believe AI will have a “significant impact” on the media and broadcast industry, particularly when it comes to transforming the economics of their business.

However, despite the cautious optimism expressed by respondents, there is a feeling that it is still “too early to tell” exactly how AI will impact the M&E industry, particularly among those who are buying technology and an attitude that the most effective use of AI is where it has already found success in recent years: speech-to-text and automation of software-based processing.

When asked about what’s hampering them from adopting AI, 43% of respondents who buy technology cited the unavailability of people who understand how to harness the technology, closely followed by 41% who said they were concerned about biased results.

(TV Tech and TVBEurope held a webinar with Caretta on the results last month, which can be viewed here.)

A similar study from Google showed how media companies are adopting AI first in backend office, HR and sales and marketing tools, while taking a “wait and see” approach towards incorporating it into media creation.

Nearly two-thirds of respondents who identified where gen AI is having the greatest effects pointed to increased conversion (66%), creating new products and services (65%), improved leads and new customer acquisition as well as increased revenue (both 63%).

However, only 35% of 169 respondents answering the question reported using gen AI in the production process.

“Gen AI adoption has been much lower in production compared to customer service and marketing. This is because integrating gen AI into complex production processes might necessitate greater technical investment and a higher tolerance for disruption,” the report said.

In its 2024 Tech, Media & Telecom Report issued last month, Deloitte found that despite the ill will that lingers over the 2023 writers and actors strike—particularly over AI—studios are taking a serious look at gen AI as pressure mounts for media companies to see profits from their streaming businesses.

“Revenues are high, but operating expenses and the costs of production, marketing, and advertising have typically become higher,” the company said in its report. “This is often true for many studio streamers that are funding their streaming services without profit while losing revenues from declining cable-TV subscriptions and advertising. Inflation, higher interest rates, and the impacts of the COVID-19 pandemic have further inflated costs, and studios now also compete with social media, user-generated content, and video games for consumer attention and revenues.”

In a blog from the Brookings Institution last April, one director perhaps best summed up the skepticism towards AI in Hollywood in 2024:

“If you were to take a technology like this and say, ‘We’re going to give this to artists and make their lives easier and make their artistic power even greater,’ I would say, ‘Oh, that’s really interesting,’ ” Raphael Bob-Waksberg, creator and showrunner for the Netflix series “BoJack Horseman,” was quoted. “But I don’t trust the companies to do that … When you look at the larger applications of these technologies, companies and studios never want to use it to empower artists to make cooler stuff for the same amount of money. They want to make things cheaper, cut the artists out, pay people less and use these technologies in a way that doesn’t make the work better.”

The Rules

As any Beltway watcher will tell you, even-numbered years tend to produce the least amount of legislative results in Washington, and 2024 was no different.

Despite predictions of “deep fake” videos flooding the politi-sphere during the 2024 campaign season, it’s hard to believe that the most publicized violation was over some Biden robocalls made during the lead-up to the New Hampshire primary last February.

And it wasn’t even the generative AI voice that landed the consultant (who worked for President Joe Biden’s Democratic primary opponent, Dean Phillips) in hot water—it was transmission of inaccurate caller information. In an effort to show how serious it is about AI, however, the Federal Communications Commission came down hard, fining him $6 million.

Concerns over AI in political ads prompted FCC Chair Jessica Rosenworcel to propose rules that would require candidates and broadcasters if AI is used to alter political ads. Introduced in May—effectively the third quarter of a long political campaign—it went nowhere fast.

It also drew the ire of Commissioner Brendan Carr, who has been nominated by President-elect Donald Trump for chair in the new year.

“There is no doubt that the increase in AI-generated political content presents complex questions, and there is bipartisan concern about the potential for misuse,” Carr said at the time. “But none of this vests the FCC with the authority it claims here. Indeed, the Federal Election Commission is actively considering these types of issues, and legislators in Congress are as well.

“But Congress has not given the FCC the type of freewheeling authority over these issues that would be necessary to turn this plan into law. And for good reason,” Carr added. “The FCC can only muddy the waters. AI-generated political ads that run on broadcast TV will come with a government-mandated disclaimer but the exact same or similar ad that runs on a streaming service or social media site will not?

“I am also concerned that it is part and parcel of a broader effort to control political speech,” Carr concluded.

Given Carr’s attitude, it’s unlikely AI will be top of mind in the new administration. Nevertheless, organizations like the National Association of Broadcasters continue to lobby Congress for protections against using broadcasters’ content to feed large language models without remuneration as well as protect against fake AI images.

“I have seen the harm inflicted on local broadcasters and our audiences by Big Tech giants whose market power allows them to monetize our local content while siphoning away local ad dollars,” LeGeyt told TV Tech recently. “The sudden proliferation of generative AI tools risks exacerbating this harm. To address this, NAB is committed to protecting the unauthorized use of broadcast content and preventing the misuse of our local personalities by generative AI technologies.”

Rick Kaplan, the NAB’s chief lobbyist, echoed LeGeyt’s concerns in a blog post last fall, urging Congress to adopt rules that cover more than just broadcast.

“To truly tackle the issue of deepfakes and AI-driven misinformation, we need a solution that addresses all platforms, not just broadcast TV and radio,” Kaplan said. “Congress is the right body to create consistent rules that hold those who create and share misleading content accountable across both digital and broadcast platforms. Instead of the FCC attempting to shoehorn new rules that burden only broadcasters into a legal framework that doesn’t support the effort, Congress can develop fair and effective standards that apply to everyone and benefit the American public.”

Washington’s intransigence didn’t stop one particularly important state from stepping in though. In September, California Gov. Gavin Newsom signed a new law that would allow actors to refuse to honor contracts if studios don’t make specific commitments to prevent their likenesses from being duplicated by AI. The law is set to take effect in 2025 and has the support of the California Labor Federation and the Screen Actors Guild-American Federation of Television and Radio Artists, or SAG-AFTRA.

Newsom’s statement illustrated the importance the state puts on the Hollywood industry. “We continue to wade through uncharted territory when it comes to how AI and digital media is transforming the entertainment industry, but our North Star has always been to protect workers,” Newsom said. “This legislation ensures the industry can continue thriving while strengthening protections for workers and how their likeness can or cannot be used.”

Tom has covered the broadcast technology market for the past 25 years, including three years handling member communications for the National Association of Broadcasters followed by a year as editor of Video Technology News and DTV Business executive newsletters for Phillips Publishing. In 1999 he launched digitalbroadcasting.com for internet B2B portal Verticalnet. He is also a charter member of the CTA's Academy of Digital TV Pioneers. Since 2001, he has been editor-in-chief of TV Tech (www.tvtech.com), the leading source of news and information on broadcast and related media technology and is a frequent contributor and moderator to the brand’s Tech Leadership events.