Tech Gains Strengthen the Case for On-Set Virtual Production

The tech is there, but is the expertise?

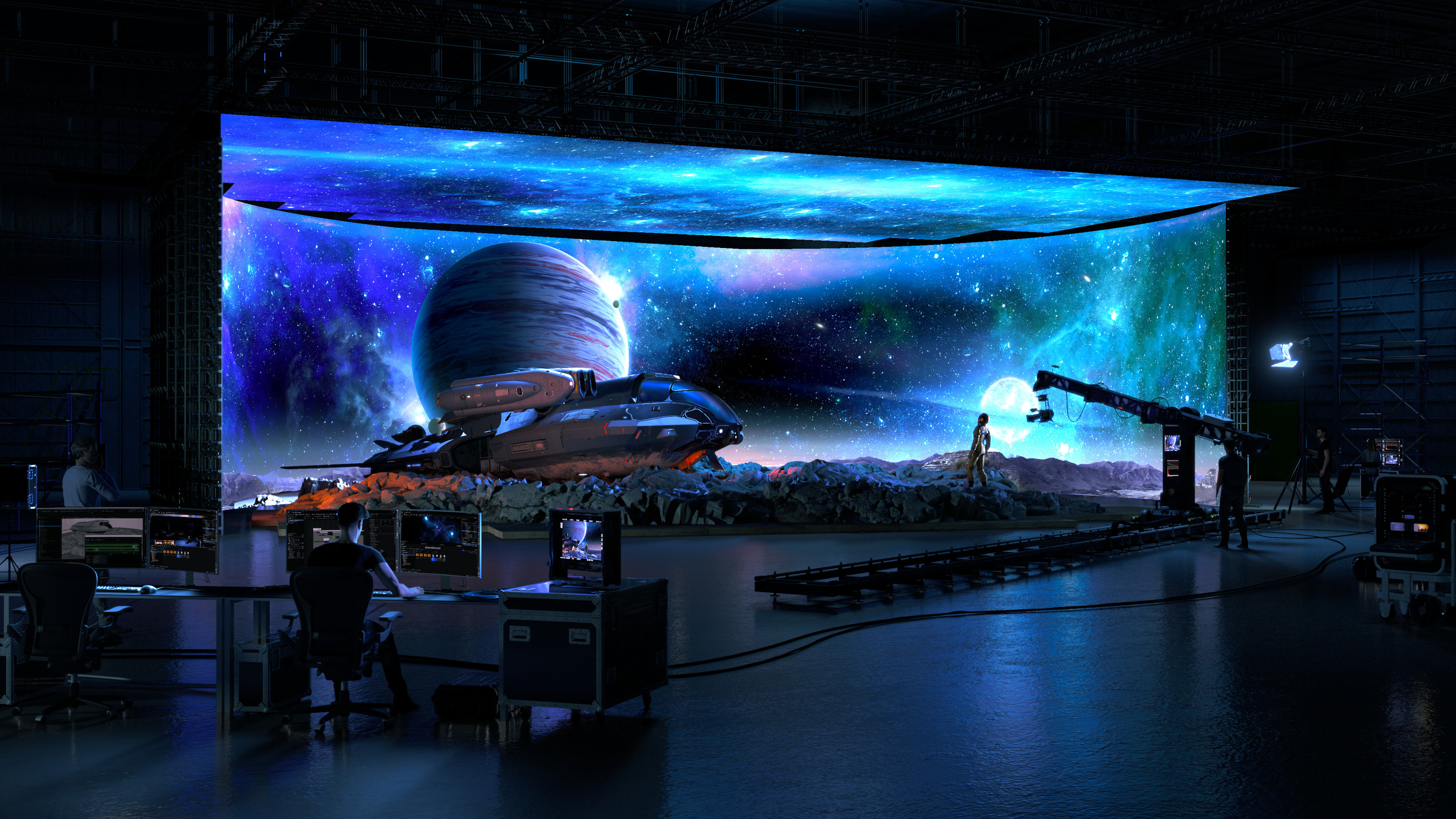

Recent innovations in live on-set virtual production (OSVP) have gone a long way toward addressing concerns about workforce skillsets and costs fueling producers’ lingering resistance to upending old ways of doing things.

As 2024 gets underway, the gains are impacting progress at both ends of the production spectrum and all points in between. At the high end, content producers now have access to an unprecedented array of purpose-built OSVP studios in the United States and beyond, many of which just came into operation over the past year or so. Featuring massively scalable LED volumes, these facilities are supporting projects that routinely set new OSVP benchmarks for use case versatility in film, broadcast, and ad productions.

Meanwhile, in-house productions are making use of LED wall building blocks linked to camera-to-computer rendering “cabinets” that can be stacked to support virtually any volume space. Workflow management systems harmonize dynamic elements across the physical and virtual spaces, including robotic cameras, 3D extended reality (XR) objects, lighting that illuminates the physical space in synh with on-screen luminosity, and much else.

Procedural and Cost Concerns

But, for all these gains, the next-gen approach to VP can still be a hard sell when it comes to convincing project managers to relinquish heavy reliance on postproduction in favor of spending upfront on expertise and technology that significantly reduces the need for location-based production.

The point is made by Gary Adcock, a leading voice in next-gen VP production who leverages expertise in lens and camera manufacturing to help producers navigate tough use cases.

Adcock says it’s often hard for people to embrace the idea of basing production on a roadmap that front ends preparations for dealing with all the nuances of visual effects, coloration, lighting, etc. that have traditionally been reserved for post.

“You have to think about what the end point is and work backwards to make sure what you’re setting up in VP will take you there,” Adcock explains. “For people who have spent a long time in film, that can be a hard transition to make.”

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Especially when they must get budgetary approval to cover the upfront costs. “Our rough planning is every three meters of wall has its own computer rendering system,” Adcock explains. “When you start getting into 100-meter walls and every three meters you have to do that, all of a sudden you have a tremendously expensive outlay for computer systems.”

Those devices can run $25,000 to $40,000 each or more, he notes. And then there’s the cost of licensing and running in-camera VFX software such as the commonly used Epic Games-supplied Unreal Engine, which combines, compresses, unifies frame rates, and parses out the moment-to-moment scene-specific raw CGI and camera-captured video footage for synchronized rendering on each wall segment.

But such costs pale next to what it can cost for a location-based shoot, especially if the in-camera VFX approach can deliver truly realistic visuals that would otherwise be impossible. For example, Adcock notes, no one can tell there are no real cars involved in the climatic wild car chase at the end of “The Batman,” released in 2022. “We’ve gotten to the point where we can mimic the reality of the world in a way that’s necessary for the types of things that are done for filming and television,” he says.

Recruitment Challenges

On top of these incremental costs, there’s a need to hire people with new skillsets and/or retrain existing staff to work in this new environment. This is made harder by the difficulties the industry is having with bringing new people on board, notes Ray Ratliff, evangelist for Vizrt products utilizing extended reality (XR) that have helped propel the company to the forefront in next-gen VP.

A significant challenge to the industry is the lack of new people joining the broadcast engineering workforce."

Ray Ratliff, Vizrt

“A significant challenge to the industry is the lack of new people joining the broadcast engineering workforce,” Ratliff says. He knows this from first-hand experience with customers whose leaders “share the challenges of recruiting new talent from universities and broadcast-adjacent technology fields.”

Vizrt is doing its part to remedy the situation, Ratliff adds. “Vizrt works with universities in many parts of the world to expose technology students to the broadcast field and nurture their interest in the field,” he says. “We also have opportunities for internships and development programs that we are also in discussions to expand to further support building the broadcast engineering talent pipeline.”

AI to the Rescue

The heavy lifting is getting less burdensome thanks to advances in the use of AI, which Ratliff says Vizrt is leveraging “to eliminate complexity and bring more value to our customers.” One of the more dramatic cases in point involves a solution to one of the most vexing issues with in-camera VFX, namely, enabling realistic immersion of on-screen personalities into the virtual environment.

Vizrt’s latest version of its Viz Engine real-time graphics rendering platform incorporates a new AI-driven function called “Reality Connect” into its workflows. Utilizing AI algorithms in conjunction with continuously updated 3D models of people on set enabled by Viz Engine’s integration with Unreal Engine, the solution delivers “significant improvements in reflections and shadow in the virtual environment,” Ratliff says.

AI is providing many other ways to save staff time and reduce the level of expertise required to execute tasks. For example, Vizrt is using the technology to track objects in a scene, obviating the need to implement multilayer tracking systems.

Ratliff also points to the recently introduced Adaptive Graphics tool, which eliminates the need to manually configure graphics for each type of display used in a production by allowing designers to build a graphic once and set parameters for automatic adaptation to different displays.

Building OSVP Expertise

But even with such advances there remains a big gap to be filled when it comes to much-needed OSVP expertise. Closing that gap is a front-burner priority at Sony Electronics (SE) and Sony Pictures Entertainment (SPE), according to Jamie Raffone, senior manager of cinematic production at SE.

The two divisions are teamed using SE components to support in-camera VFX on Sony Innovation Studio’s Stage 7, a giant volume facility at SPE’s Culver City, Calif. production lot under management of Sony’s Pixomondo (PXO) unit.

“Everybody [in production] wants to understand not just what the costs are but what it takes to make use of this technology,” Raffone says. It’s not just a matter of retraining personnel, which is essential; it’s also about educating decision makers, she notes.

“There’s not a single role that says ‘yes’ or ‘no’” to using volume facilities in production,” she says. There needs to be decision-making expertise at multiple levels of management, “from vice presidents to directors to producers to people overseeing corporate investments.” At the ground level, once a go decision is made, there’s a need for knowledgeable oversight that “starts with script evaluation and getting the staff together to execute the project.”

Sony is playing an active role in filling these knowledge gaps, Raffone adds. “Sony is aligning with specific workflow integrators, designers, and consultants, so that when a company wants to use the Sony ecosystem, they have a partner guiding them through the entire workflow. There’s so much more to this than buying equipment.”

Simplifying Broadcasters' Use of OSVP

Making the right equipment choices is fundamental to expanding OSVP adoption. Looking at how this point has resonated in the live broadcasting arena, Mike Paquin, senior product manager for virtual solutions at Ross Video, says use of the technology “is starting to become more mainstream, in part because we’re making it more user friendly.”

Automated orchestration of components is a big part of this, he adds. Under the command of Ross’s widely used Overdrive automated production control system, the company’s VP controller Lucid orchestrates every in-house or third-party component that’s been integrated to operate in the VP workflow, including switchers, robotic cameras, graphics platforms, and Ross’s Unreal-powered Voyager XR rendering system.

With its acquisition of G3 LED in 2021, Ross also became a leading supplier of LED panels that can be seamlessly stacked for use in any scenario, including giant volumes with pixel pitches as small as 1.56 mm encompassing floors, walls and ceilings. “We’re one stop,” Paquin says, “the only things we don’t provide are lenses and cameras.”

These integrated solutions have gone a long way toward simplifying OSVP for broadcasters, Paquin notes. “We’re helping people do stuff every day without an army of people,” he says. “Instead of needing one studio for the new set, another for sports, separate ones you have to set up for special events, you can flip a whole set from one use to another during a commercial break.”

Will in-camera VFX be the live broadcasting norm everywhere in two or three years? “That’s my goal,” Paquin says.

Fred Dawson, principal of the consulting firm Dawson Communications, has headed ventures tracking the technologies and trends shaping the evolution of electronic media and communications for over three decades. Prior to moving to full-time pursuit of his consulting business, Dawson served as CEO and editor of ScreenPlays Magazine, the trade publication he founded and ran from 2005 until it ceased publishing in 2021. At various points in his career he also served as vice president of editorial at Virgo Publishing, editorial director at Cahners, editor of Cablevision Magazine, and publisher of premium executive newsletters, including the Cable-Telco Report, the DBS Report, and Broadband Commerce & Technology.