Active Archives for the Future

Karl Paulsen

A lot of growth is happening in the storage space for media and entertainment. The broadcast central equipment room (CER) is bulging at the floor tiles for more space to house IT-centric equipment, including servers, core network switching equipment and storage sleds packed with disk drives. It seems more like conventional broadcast equipment continues to shrink in size, quite possibly to compensate for all the extra IT parts and pieces needed in the CER.

Besides the enduring question about how to handle the metadata, what we’ve been finding is that organizing storage systems for this industry is as much a random equation as it is a unique trial by fire approach. Product developments, technologies, and swings in facility decisions on what resolution and where to store their data are all contributing to this moving target. When this column first started appearing in the early 1990s, we had simple sets of storage subsystems. There were the dedicated high performance drives for storing full bandwidth “D1” or “D2” signals on 25-second (NTSC) digital disk recorders; proprietary multiplatter Fujitsu drives (for the Quantel Harry); and we had arrays of 4 GB hard drives that kept motion-JPEG files on 12-hour or less capacity video servers. In each case, drives were qualified and provided directly by the vendors. They worked on either product-specific operating or filesystems. And we had no interoperability between one storage system and another.

WHAT A DIFFERENCE TWO DECADES MAKE

We’ve seen times change dramatically in just 20 years. With virtualization and cloud storage, we’re embarking on new directions for handling “big data,” at both the local facility level and at the archive level. The term “active archive” is starting to emerge as a growing alternative to past generational attempts to manage data across many forms or tiers of storage. Disk storage systems for backups have successfully been implemented at facilities that need rapid, untethered accessibility to content at all times. Medium and long-term storage of data, at a tier-3 or deeper level, still depend on magnetic tape, which provides for much less cost to the user versus spinning magnetic media (disks), and allows for multiple copies to be stored at differing locations.

Tape users suffer from challenges that include accessibility, media reliability, an assurance of data integrity, and various management, security or protection costs (affecting the overall total cost of ownership or TCO). Latency in retrieving the data can be cumbersome especially when the archive is located in a salt mine or a secure vault managed by a third party. Refreshing the tape library before the media goes stale is an exhausting and never ending challenge. Migrating from one’s legacy format of tape media to a current technology continually affects operating expenses and can result in the updates never occurring until it’s too late.

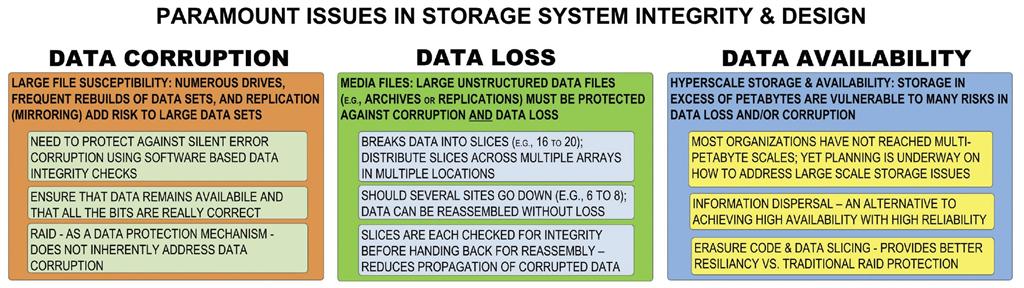

New storage practices go beyond traditional RAID and employ software-based storage management for handling unstructured data systems of petabyte or larger proportions. This table outlines three key issues surrounding data integrity for disk-based data storage systems.

(Click to Enlarge)

What are the options when you need to maintain petabyte scale archives on costly spinning disks? How does the operator validate and justify the costs so as to provide a highly reliable, long-term archive that can guarantee accessibility, integrity, scalability and do so in an automated fashion? One way is to take a different approach to the traditional means of storing and replicating the data across multiple sets of mirrored RAID arrays.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

RAID is costly. It requires a minimum set of drives to achieve “protection” and those drives will always have a finite life expectancy that will require replacements and will pose a risk to the operations during that replacement cycle. As those drives reach multiple terabyte scales, the rebuild time and potential for secondary (or more) failures during those rebuild cycles grows to a level that all the data must then be replicated to another site, another mirror or to another form-factor such as the cloud, tape or both, posing yet another set of problems to absorb.

ON THE FLY

For a data archive system to be effective, it must be capable of scaling “on the fly.” This means that nodes must be able to be added seamlessly, and via systems that support auto-detection without manual intervention. The systems (disks and nodes) must be self-healing and self-monitoring, with the ability to reduce intervention as the storage platform grows.

A properly configured archive should be engineered to run on low power drives (some systems run on a little as 7 Watts per terabyte). In the not too distant future, the drives or nodes may employ a power-down mode, further decreasing the power loading, reducing the cooling and extending the life of the drives. Current systems, employing object-based storage, spread data across 16 to 20 drives (per node) and allow for as many as 4 to 8 drive failures per node before there is a risk of data loss, and do so without using any form of conventional RAID configuration. These systems utilize technologies called erasure-coding that provide for the highest level of data durability (exceeding “10 to 15 nines” or beyond).

What essentially happens in this relatively new archive model is that organizations can now build “active archives” on a single tier of reliable, highly efficient and easily accessible disks; while still keeping another backup set on physical removable media if so desired. However, we must clarify that this is an archive model and not a high bandwidth multi-dozen workstation “edit in place” model that one might find in a file-based workflow environment with many users accessing the storage system simultaneously. For the latter form of storage, production will need high spindle counts working in parallel, which still remain the norm and should not be confused with the need for an archive.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his current book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.