Clearing video’s Internet path

Networks, content providers and production companies have long been interested in delivering high-quality, high-definition video over the Internet to PCs and mobile devices. However, technical issues have prevented the dream from becoming a reality. While the Internet is an effective means of transport for a range of applications and services, anyone who has used it to watch a YouTube video or an episode of their favorite television program can attest that it’s not always a great way to broadcast HDTV.

Internet video transport is unreliable and non-deterministic, rendering it largely unsuitable for broadcasters that need to deliver high-quality video. This is often blamed on a lack of bandwidth. However, in fact, today’s Internet has plenty of capacity to transmit broadcast-quality live HD video; it’s the existing Internet protocols that are the problem. The bandwidth on “first mile” and “last mile” downlinks is more than sufficient to carry broadcast-quality HD traffic. However, connecting the first and last miles via traditional Internet IP frequently results in service problems such as start-up delay, buffering and frame freeze. If a video stream doesn’t start within seconds, the screen freezes or the stream starts buffering, viewers quickly become frustrated and abandon the video.

Broadcasters that want to transport high-quality video over the Internet need a solution that uses the end-to-end bandwidth already available and delivers outstanding performance with superior reliability. Specifically, the solution should ensure split-second latency, and near-zero packet loss, and deliver the highest video quality — up to 1080p/60f/s — without any sacrifices to delivery time or resolution.

The challenges

From a technical perspective, the challenges involved in delivering broadcast-quality HDTV over the Internet are due to the larger amount of information involved; how packet loss, latency and jitter are handled; the requirement for uninterrupted video streaming; and the way that Internet transport protocols work.

The Internet’s TCP transport protocol was written decades ago, when physical network conditions were far more subject to transmission errors, delivery delays and broken links. While ensuring guaranteed delivery of data over unreliable connections, TCP is not time-sensitive, and it does not maximize use of the network bandwidth. Tests reveal that with only 0.1-percent of packet loss over the Internet, TCP-based video streaming solutions drop the available bandwidth for video by 80 percent. In short, TCP has not been designed optimally for real-time video transport.

Today, popular video streaming and transport methods over the Internet make only 15 percent to 50 percent of the available bandwidth for the user’s video. Live content and mobile access add further challenges to make these video streams not viewable.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

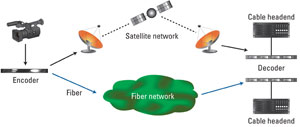

Figure 1. Even when using dedicated landlines, latency is still a major factor in delivering HD-quality video. This is even more the case when satellites are in play.

Selecting among existing Internet protocols to optimize reliability has meant increasing latency and adding overhead to the video stream. For example, the Real-Time Streaming Protocol (RTSP) does not define, exactly, how video can be streamed without startup delay, buffering or pauses, or lowering the video resolution. Other TCP-based streaming protocols have suffered from maximizing efficient use of available bandwidth in the midst of constantly changing Internet conditions due to varying degrees of packet loss, latency and jitter.

One impact has been high latency — delivery delays due to the video stream arriving to, going through and exiting the Internet. Some of this is inescapable due to transit time and distance. You would see it even at the speed of light. Latency is also a major factor even when using conventional dedicated landlines, and even more so where satellite relays, in orbit around the Earth, are used. (See Figure 1.)

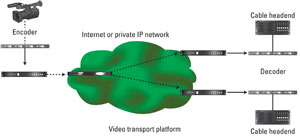

Some of the delay is caused by the processing required to push a signal through the Internet’s hardware. First, encoders need to compress SDI video into H.264 or MPEG-4 content and turn it into packets. Routers must then switch packets from one link in the Internet to the next, before decoders finally de-packetize the stream at the other end. (See Figure 2.) Additionally, network devices may also be performing compression, adding encryption or other security measures so that unauthorized users can’t watch it. All of this adds latency.

Figure 2. Another source of delay is a signal’s push through Internet hardware. Content has to be packeted, sent and then unpacked.

Another source of delay is the need to buffer, which prevents video streams from starting up fast and can take anywhere from several seconds to minutes. Even the delay-before-play, which happens when a viewer selects an on-demand video through a cable box, is faster than the buffering needed to play H.264 content over the Internet.

A third source of delay is packet loss. IP video streams need to be assembled and played in the right order at the right time. Some applications, like e-mail, web surfing, file transfers and peer-to-peer downloads, can tolerate packets that do not arrive in real-time, or arrive in the wrong order. Multimedia applications can be designed to be “lossy,” with some packet loss, or to require the entire original signal to be received with no packet loss. Even telephone calls can handle some level of packet loss while still providing good service. However, broadcast-quality HDTV needs the entire original stream with near-zero packet loss. Packet loss creates overhead. The network has to re-request the missing packets, or request extra packets in advance, forcing buffering and overhead, which holds up the delivery of the video stream until the missing packets are accounted for. This affects real-time performance, which can suffer for a variety of reasons. One, the application may need enough time to buffer so it can accommodate packet loss, which could take up to several minutes. Or, it needs more bandwidth from the end-to-end network to compensate for the extra packets captured. Or, it needs to be video-aware and ensure that the more important packets such as I-frames are always captured, while some other less-important frames may be missed. As a result, the video will be distorted periodically, creating a frustrating delivery and viewing experience.

The solution

To date, there have been interesting solutions to the problems noted above, yet none of them make the most efficient use of available bandwidth. For example, common approaches such as FEC and Scalable Video Coding (SVC) are designed for short-video interviews and multi-way video conferencing, respectively. They are designed to minimize latency while adding excessive overhead to the available bandwidth for video but not ensuring near-zero packet loss. This overhead, which does not guarantee packet recovery, may also not be suitable for continuous-play, broadcast-quality video where the video bit rate approximates the available bandwidth. Such alternative video transport methods can provide low latency but cannot guarantee recovery from packet loss due to unpredictable behavior of the network and statistical nature of its packet-recovery mechanism. These solutions are not designed for 24/7 continuous play or viewing a two-hour movie.

What is needed is a platform that addresses different video applications, such as over-the-top HD video viewing, 24/7 satellite or fiber-alternative video distribution, low-latency field reporting, sports, and mobile broadcasting. It needs to address fundamental challenges associated with transmitting high-quality video over the Internet, whether it is designed for low-latency or flawless HD movie viewing. The optimal platform must be able to deliver pristine, uninterrupted, low-latency video despite the Internet’s inherent varying and nondeterministic network conditions.

Summary

Today, new technologies are emerging that address the needs described above. These revolutionary video transport platforms are based on the RTSP framework and leverage the efficiencies of the standard UDP-over-IP protocol to maximize use of available bandwidth. Additionally, methods are being designed to address packet loss, latency and jitter to ensure on-time and reliable streaming and delivery of video over the Internet, dynamically resolving issues in sub-millisecond response time.

Without adding overhead to physical bandwidth, these new video transport methods provide low end-to-end latency, eliminate jitter, smooth video flow, and recover and re-order packets so the video is restored to its original form — all before it starts. These solutions are enabling broadcasters to finally use the Internet to deliver high-quality video, at the highest quality, more affordably and effectively than ever before.

—Israel Drori is founder and CEO of ZiXi.