Computers and Networks: Compression, Computers and Networks

Compression is one of the enabling technologies that allow computers to process video.

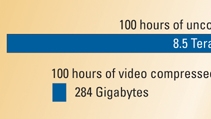

It is vital because it conserves precious storage space. Uncompressed video files are big. How big? Well, 100 hours of uncompressed video (the average size of an active commercial library in a local television affiliate) requires about 8.5 terabytes. That's a lot of storage.

Figure 1. A visual representation of 30:1 compression. One hundred hours of uncompressed video would occupy about 8.5 terabytes of storage. At 30:1 compression, the same 100 hours would occupy about 284 gigabytes.

But storage is only one dimension of the problem. Moving uncompressed files over conventional 10BaseT or even 100BaseT networks can take hours. Also, video can crash other applications trying to coexist on a network. Applications may crash due to timeout errors as they wait for an application at the other end to respond. As more video users are added to the network (instead of using simple point-to-point connections), conventional “dumb” hubs must give way to switching technology to increase available bandwidth to each user. Gig-E or 1000BaseT makes things more tolerable, but large transfers can still bring traffic on these networks to a crawl.

When computer scientists and video engineers first tackled the problem, they applied compression/decompression schemes, also called codecs, that had been used in the traditional IT sector. These early codecs allowed for bit-rate reductions on the order of 50 percent, or 2:1. These schemes reduced the number of bits transmitted by looking for repetition of bit sequences. For example, a scene of a person sitting in front of a white background contains a lot of repetitive information. These codecs look for repetition of strings of bits and compress the data by saying, in effect, “repeat this pattern of bits N times.” Such codecs are lossless.

As the name implies, lossless codecs compress a file and then uncompress it so that the final result is an exact, bit-by-bit copy of the original. If you have an exact copy of the original, then by definition there are no compression artifacts. Unfortunately, the compression ratio of lossless codecs seems to be limited to somewhere between 2:1 and 3:1. Past this point, codecs must achieve some of the bit-rate reduction by “throwing out” bits that are deemed unnecessary. And as soon as you start throwing out bits, you no longer have a perfect, lossless copy.

Most users are willing to sacrifice the perfect copy at the receiving end in exchange for an additional 30 percent to 40 percent bit-rate reduction. However, as soon as you employ these schemes, you introduce artifacts. Lots of effort goes into trying to hide the artifacts from the end viewer. Engineers have engaged in a very interesting interplay between physics and biology to achieve this. Without straying too far from the subject, it is important to realize that the human being at the end of the chain is a vital part of the whole system. The eye and brain have certain characteristics that make them less sensitive to missing bits in some areas than others. Engineers developed compression techniques to take advantage of the specific characteristics of the human visual system.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

With the advent of more complex compression algorithms, bit-rate reductions up to 100:1 and beyond are now possible, but users have to make tradeoffs between bit-rate reduction and artifacts, editibility and delay. A decision about compression quickly becomes a balancing act. One combination of compression choices might be acceptable for transmission from a station to its viewers, but might be unacceptable for a transmission of a sporting event from the field to the station. To further complicate the discussion, there is the issue of time delay through the compression chain. As a general rule, the more aggressive the compression, the more delay involved. A large amount of delay may be acceptable for one-way transmission, but may make a circuit unusable for a two-way live interview. For example, in a live-interview situation, the average untrained person is able to communicate over a circuit with a delay up to about 850 ms. Delays larger than that result in the person repeating a question or reply before the person at the other end has a chance to respond. When delays become larger than this, trained on-camera talent is required.

Figure 2. Decisions made about pre-filtering can be as important as decisions made about compression.

But storage is only one dimension

So how much compression can you use and still have “acceptable” artifacts and delay? The answer, of course, depends on a number of questions. Are you designing a one-way circuit? Are you planning to edit the video at the receiving end? Are you planning to re-compress the video for onward transmission? What is the end-viewer environment? To give you some frame of reference, bit-rate reductions of about 30:1 result in what may be described as an acceptable combination of level of artifacts and delay for some applications. Going back to our original figure of about 8.5 terabytes for 100 hours of uncompressed video, if a 30:1 compression ratio is acceptable, total storage can be reduced to about 284 gigabytes. (See Figure 1.) For many applications, it may be much easier to justify the cost of the smaller system.

Decisions made about the video being fed into the compression system can be as important as decisions about the compression system itself. Compression schemes reduce repetition in the data. If a scene is highly repetitive (a plain background behind a stationary speaker) the scene can be highly compressed without generating observable artifacts. If a scene has very little repetition (sports sequences with lots of action) it will be more difficult to compress. Noisy video images are some of the most difficult to compress because of the random pattern of the noise. For this reason, many compression systems use pre-filtering to increase performance. These pre-filters can be quite complex in their own right, using the characteristics of the human visual system to hide the information they are filtering. In addition to filtering noise, pre-filters may also reduce the bandwidth of the video signal fed to the compression engine so that the compressor has less information to compress. There is a direct interaction between choices made in pre-filtering and choices made in the compression engine itself. It can take quite a bit of work to achieve the right combination of pre-filtering and compression. Fortunately, most manufacturers have done a lot of research in this area. Researchers have learned that simple bandstop filters or other non-intelligent pre-filtering algorithms produce poor results. After several years of development, engineers have come up with a number of more complex filtering techniques that are almost imperceptible to the average viewer.

There are many excellent articles and texts on compression. Dave Fibush, Chairman of the SMPTE Technology Committee on Compression, has written an excellent overview of video compression, which is available at www.tektronix.com/Measurement/App_Notes/backgrounders/vidcomp.html. A much more technical paper on desktop compression systems is available at crl.research.compaq.com/who/people/ulichney/papers/swcodecs96.pdf.

What about more conventional computer compression methods? Here the list seems endless. There are the Windows Media Format, TIF, GIF, MP3, ZIP, and on and on. The best thing to say is that there are many codecs, new codecs come out every day, and the situation is likely to stay this way. The good news is that vendors have developed plug-ins that allow them to support a wide variety of codecs.

But, despite the fact that compression has been one of the key enabling technologies, its importance may be waning. Imagine a time of infinite bandwidth and free disk drives. In such a world, compression becomes unimportant. You may think that this view is crazy, but a number of top CTOs believe that, while disk and bandwidth will not be free, eventually their cost will become so low that other factors will become much more important. As one CTO explained recently, “If you can build a multiple Gig-E network for several thousand dollars, and if you can get disk storage for $50 per gigabyte, why worry about compression? Just build a faster network with bigger servers.” We will see what the future holds. For now, it's hard to imagine a world where costs are so low that compression becomes unimportant.

Brad Gilmer is president of Gilmer & Associates, executive director of the AAF Association and technical moderator of the Video Services Forum.

Send questions and comments to:brad_gilmer@primediabusiness.com