Considerations for IP storage technology

Clouds, and the services hosted therein, including archiving, come in three flavors: private, public and hybrid. In archiving terms specifically, public, cloud-based archiving involves the use of a third-party storage service, for example. These companies will store and manage assets in remote storage centers, and provide all of the security, failover and disaster-recovery facilities as part of the service. Data transfer can be arranged in a number of ways, depending on bandwidth availability. However, although these services can be customized per user, they tend to lend themselves more to enterprise-level data storage, and broadcast-specific issues such as larger file sizes (and associated transfer times) or content security may make these services less attractive. A private cloud archive, such as the one shown in Figure 1, is created and maintained within the organization that uses it, and can range from a simple, single-server, disk-only archive servicing a single department to a network of globally linked archive centers with a central database, all sharing and managing assets within what remains essentially a single closed system.

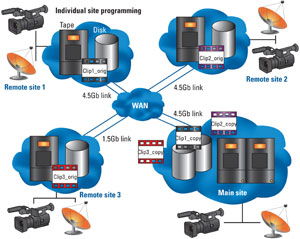

Figure 1. A private cloud archive provides standard archive functionality, as well as disaster-recovery facilities for multiple remote sites.

A hybrid archive is generally offered by a service provider (often as part of a public cloud archive service), but with a selection of storage locations; rapid-access content might be stored locally on the customer’s own servers, while material that will be rarely used but still requires retention might be stored in the third-party’s storage farms.

Using these definitions, private cloud archives are currently the most common form of broadcast archive, as this covers all company-internal archives. There is no set rule on the size, schema or architecture of a private cloud archive. As with any broadcast system, the archive will be designed to facilitate the needs of each individual organization, and is likely to grow as the requirements placed upon it change. The provision of service agreement around which an archive is designed will be different for each organization, which can produce radically different designs and interpretations. However, there are a number of considerations common to all private cloud-based archives, and some of these are set out here.

Physical architecture

When building an archive within an existing operation containing standard broadcast systems (e.g. automation, asset management), the archive can generally operate across the same communications infrastructure as the rest of the organization, as long as this contains sufficient bandwidth to accommodate archive transfers.

A recent archive installed by a national broadcaster in Europe across four geographically separated sites used the existing WAN to replicate and centralize archive data.

A combination of 4.5Gb and 1.5Gb connections are able to stream a mixture of HD and SD live feeds and other content, along with an average of around 200TB per month of archived/restored data between the sites. The archive system is also able to schedule transfers, if required, so as not to impinge on live content at busy times.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

One item to note on transfer speeds across WANs is that when archiving directly to tape, data transfer speeds have to be kept above a minimum in order not to cause undue stress on tape-drive write heads. Data speeds slower than the optimum for a given tape drive mean that the tape drive has to constantly stop and re-start during the write process, causing a condition known as shoe-shining as the tape slips over the heads; this can impact drive and tape quality and life span. This minimum speed varies with drives, but generally it is recommended to “feed” a drive at no less than 40MB/s.

Size — physical storage

The hardware required for the archive will depend on the agreed-upon levels of provision. Typically, both disk and tape storage will be used, and the total amount will, naturally, depend on the amount of data that is to be processed. However, the size of the archive will be defined not only by standard archive operation considerations (i.e., how much content is moved in a single operation from source to tape or disk), but also on supplemental services (e.g. disaster recovery, content migration), and retention policies.

At our multi-site European broadcaster, the archive cloud handles the 200TB per month of archive/restore data transfers across total disk storage of 600TB, with a DR/deep archive tape library containing 3000+ LTO-5 tapes.

Size — storage control

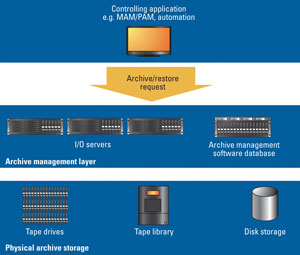

Figure 2. The greater the number of archive disk and tape storage devices need to be driven, the more storage control servers are required for the data I/O.

The next consideration is the controlling application. In our private cloud archive, the content management application sits between the production/management application layer and the storage itself, as shown in Figure 2, and requires its own hardware. The amount of hardware used by the controlling application is directly related to the amount of data throughput that has already been calculated: Depending on the software used, high-end servers may be capable of driving two to five tape drives each, with a data throughput of up to 140MB/s per drive. This is not an exact science, however; concurrent disk and tape transfers, disk cache sizes per server, and, of course, the ever-present spectre of bandwidth are all contributory factors that must be considered.

Content management software compatibility

When considering the archive management software, perhaps the prime consideration is its interoperability with the rest of the systems and hardware. Compatibility with the immediately superior control application layer is, of course, essential, in particular with reference to the management of metadata across multiple management systems and transfer media, but other implications may also need to be considered. For example, it may be advantageous to an organization to choose an archive management application that is built to support new overlying or supporting technologies as they appear. Generally, any new hardware is supported by most main archive management vendors as a matter of course, but as IT comes to play an ever-increasing role in the broadcast environment, newly introduced technologies may need to be supported by systems already in place.

In a real-world example, a facility provides audio-visual services to other broadcast organizations nearby. It offers archive-as-a-service (in this sense, it is offering a broadcast-centric, semi-public cloud archiving service), and in order to achieve this, it uses archive management software that is compatible with several different breeds of control systems that instigate the archive and restore operations directly from the (remote) client sites. In addition, the software also has to be suitably designed so that the main facility can plug in its own billing software (in this instance, using a simple XML-based API), which automatically calculates monthly service fees based on usage data extracted directly from the archive. Open communication interfaces such as this also allow facilities to construct their own LOB-specific applications, enabling them to completely customize their archive and restore operations to create unique workflows.

Archiving-as-a-service, as discussed above, begins to approach the definition of public cloud archive services, and although our example is still broadcast-centric, by taking the archive out of the private cloud, wider frameworks may have to be considered. Public cloud storage in general is becoming increasingly standards-driven, and some customers may request compliance in their cloud storage applications. Some mainstream storage bodies such as Storage Networking

Industry Association (SNIA) are defining cloud storage-specific standards (e.g. Representational State Transfer [REST] and Cloud Data Management Interface [CDMI]) that seek to define common interfaces and transfer/management protocols for all cloud storage applications. Although these standards are data-generic (rather than broadcast-centric), as they become the norm in general IT, it is possible that broadcast customers will also seek to implement solutions that apply the same levels of standardization to broadcast data and storage processes.

In addition, when providing disaster recovery as a wider archive service, absolute definitions such as recovery time objectives (RTO) and recovery point objectives (RPO) must also form part of the service-level agreement, and the ability to provide these depends on the capability of the archive hardware and software.

—Nik Forman is marketing and branding manager for SGL.