Defining Intelligent High-Performance Platforms

Last time we took a cursory look at high-performance storage systems, platforms that have emerged because the media, and other industries, have grown to depend on spinning disks as their principle means for short- and near-term digital storage. Spinning disks are replacing tape for most everything other than original acquisition or long-term, deep archive purposes.

Traditionally, NAS and SAN were the two primary digital media storage architectures. Many of those storage systems, whether purchased discretely and integrated into a workstation environment or as part of a total solution inside a videoserver and/or editing system, have sufficient capability to serve most of the needs of traditional broadcast and production services entities. However, that perspective is changing quickly. As stated previously high-definition, 3D-stereoscopic and higher-resolution imaging is impacting all aspects of the media and entertainment industry. This kind of growth drives the deployment of newer, more sophisticated high-performance storage platforms; those necessary to develop and produce all their forms of content.

SAN OR NAS?

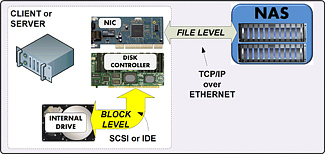

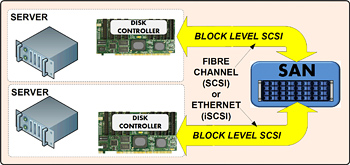

To briefly review the discussion points from last time, users must first determine if a SAN or a NAS solution is sufficient. SANs will utilize high-performance Fibre Channel RAID arrays with managed switches between array components. A NAS solution usually employs less costly SATA drives, and won't require the complications of a SAN architecture. Users may also employ a combination of SAN and NAS, depending upon the degree of deployment, requirements for storage tiering, and the overall mix of new or legacy components in the system.

Under the hood, these new platforms must address multiple file systems operating harmoniously on the same storage platform. With new workflows requiring multiple simultaneous sessions that use the same files and data come the expectations of multiple versions of files that used the same original content.

This raises the probability of file corruption (e.g., lost pointers to some file locations) during exchanges, replication, mirroring, reads or writes. High-performance/high-accessibility storage platforms will utilize "Snap Shot" or "Checkerboarding" to act as a short term backup. Snap shots are "images" of either the most recent file activities, portions of the entire store or file system metadata sets that are duplicated so that the latest sets of transactions or processes can be recovered should metadata about those files be misplaced or some other unexpected impact occur.

The snap shot frequency, i.e., how often and how large a file system image is captured, becomes an important feature to consider when the value of the assets and the deadlines for the delivery grow exponentially.

Replication—the duplication and/or scattering of the same file data multiple times across a store—is another protection scheme and bandwidth throughput accelerator employed when several users must perform different activities simultaneously.

Fig. 1: Network attached storage (NAS) access, i.e., the IIO requests, are at the FILE level. Replication differs from conventional mirroring with the later process managing reads, writes, metadata and versioning based upon parameters set by the system administrator. Mirroring is generally just a complete copy of everything on the drive—so if the first set of data is bad, likely the second will be also.

Lastly we discussed data migration and tiered storage whereby short-term dormant material is moved from fast (expensive) storage to slower (more cost sensitive) storage via a policy engine or other software application. This is often where the employment of a SAN and a NAS configuration might occur.

ACTIVE OR INACTIVE?

To continue our discussion of high-performance storage from a life cycle perspective, data can be described as being in two general states—active or inactive.

Active data is any data that is being routinely used or accessed by applications or that the storage platforms are being read from or written to. Inactive data is data that hasn't been addressed for some time period or isn't expected to be accessed for a long period—sometimes referred to as archival data.

Another pair of terms used in describing the data life cycle is persistent and dynamic.

Dynamic data are those bits that are in a state of change. In a post-production environment, the data used in the processes of editing, compositing or audio sweetening is dynamic—changing nearly all the time. This regularly or constantly changing data poses risks to the users (or systems) should any of it get out of sync with the rest of the systems.

When protecting dynamic data, conventional straight "mirroring" of that data becomes of little value if the metadata or block location pointers in the file allocation table are misaligned or corrupted.

The other type, persistent data, is that set of bits that is non-changing. These might be the master files or the acquisition (original material) that is protected from being erased, lost or corrupted. It might also be the completed clip, such as that data on a commercial play-out server.

Again, if the file location pointers are damaged, this non-changing persistent data might as well be erased—so it is important to have a high-performance storage system that can effectively and efficiently manage active, non-active, persistent and dynamic data.

Next there is data that is online, which may include active dynamic or active persistent data; and data that is offline —for example, the copy on the disaster recovery site's storage platform or the data tape at the offsite ("deep") archive.

Offline data would be more routinely managed by asset management software that can place, create proxies, copy for protection or recover and then restore to the online, active state. The online data would be more properly handled by the storage platform management tools associated with the active dynamic or persistent data sets.

Fig. 2: Storage area network (SAN) access at the BLOCK level—faster access, more specific, no network overhead, better suited for direct reads or writes to/from the drive.TAILOR-MADE

The elements that compose the intelligent storage platform consist of the hard drives, the array management structure, storage accelerators and the engines that handle replication or metadata, and the network subsystems that facilitate the data paths between the physical storage components (i.e., hard drives, tape drives).

Intelligent storage platforms isolate the functionality of the actual applications (i.e., ingest or play-out servers, workstations), from the storage platform activities. This concept mitigates bottlenecks such as diminished access or latency by allowing a secondary system to handle the traffic more efficiently all around.

The intelligence designed into a high-performance storage platform is often tailor-made to suit both the architecture of the stores themselves and the uses expected by the enterprise itself. If the enterprise needs remote mirroring of its data, the storage platform should be capable of handling those functions including automatic node fail-over and fail-back, rollback to the last checkpoint (through snap shot or checkerboarding), or resynchronization should a data link fail.

Intelligent storage platforms employ flexible policy- and priority-based user interfaces that allow the administrator to change designations, scale the storage and adjust data structures or replication settings as their workflows change, without any downtime.

A concluding thought reiterates what was mentioned last time. Making your storage solution extensible for the future takes careful upfront planning. Knowing what might be required of your storage platform, such as tiered storage, maximum capacities of the volume, file system addressing and allocation, antivirus protection and the like is just as important as sizing the amount of storage required.

There are companies with simple storage solutions that can fill short-term needs and there are those with deep and complex capabilities that are just as simple to use, but are built for the future. Hopefully these last two installments have opened the doors to the future needs of your all digital, all file-based storage environment.

Karl Paulsen is chief technology officer for AZCAR Technologies, a provider of digital media solutions and systems integration for the moving media industry. Karl is a SMPTE Fellow and an SBE Life Certified Professional Broadcast Engineer. Contact him atkarl.paulsen@azcar.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.