Exploring Storage System Efficiency

Media storage efficiency is a growing topic for many organizations, yet especially important to those who are facing space, power and operating-cost constraints.

A recent project involving storage system migration to a new site found that while the IT servers, storage systems and network switches could fit into just a few racks, the ability to power and efficiently cool those few racks became a governing factor.

The facility was forced to increase the rack count and modify the physical layout of the new technical center to spread the cooling and power demands out accordingly.

Watts per cubic foot or "power density" is becoming a very real concern for CapEx as well as OpEx, both initially during build-out and continuing throughout the life of the facility.

LOOKING FOR SOLUTIONS

With storage requirements pushing the envelope, operators are looking outside the data center and to the cloud for their storage solutions. Depending solely on the cloud to meet all those requirements has its own set of issues, especially for media-related data with its enormous file sizes and substantial accessibility requirements.

Repeatedly transferring active data from cloud storage to temporary local storage for editing, and then putting it back into the cloud is not a practical solution unless you have large amounts of fiber-optic bandwidth and sufficient time in your production workflow for those transfer activities on a recurring basis.

Storing accessibility-sensitive media remotely, whether at a disaster recovery site or some other form of archive, will inhibit workflow and reduce productivity.

This is, in part, why organizations find they must continue to expand their local data center storage capacities as the volumes of files continue to grow. When production and editorial staff insist on using higher resolution, mezzanine level compression (e.g., DNxHD or ProRes 422HQ) the size of the files will continue to increase well beyond the current 35-to-50 Mbit dimensions used by many for HD production.

File-based workflows depend upon accessible, high-availability, disk-based storage. Such storage can be augmented by tape libraries, optical storage and even cloud storage. Still, most production needs storage that is "electrically" close to the action, which usually means physically close.

Storage solutions routinely need to access multi-hundred terabyte file caches as active storage. This drives storage management efficiencies, not just for the content, but also for the methods of storage and its protection.

(click thumbnail)

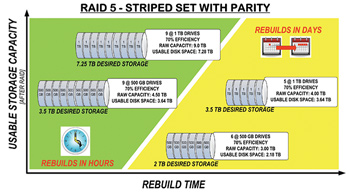

Fig. 1: Relative rebuild times, ranging from hours to days, it can take to recover a single failed drive in a RA ID 5 set when compared with the drive size and number of drives in the array. The disk-drive data density explosion is not new, an evolution not without its own set of growing pains. Storing huge amounts of data on a single device becomes a double-edged sword.

With a terabyte considered almost "minimal," economic reasons find these 1 TB and above capacities usually as SATA, a less-expensive and often at the lower-end of the food chain drive in terms of reliability.

Compared with Fibre Channel and enterprise class SAS hard drives, SATA drives exhibit higher errors and are prone to shorter life spans. SATA drives are economical for PCs and smaller RAID sets, but that's where the enjoyment of these now generically large drives ends.

REBUILDING WITH RAID

RAID continues to serve the storage industry well. Built on the concept of assembling a group of drives so as to protect against data loss, RAID also yields improved performance. RAID has retained its position for storage systems, but as single drive capacities increase, RAID now comes with a price.

Protecting against a drive failure is one thing, however today the choices in RAID structure are equally as important as those original values that RAID provided for.

When a drive fails in a RAID group, the RAID-architecture's algorithms will use the parity information previously created to reassemble the corrupted data and restore it to the replaced drive.

When earlier RAID sets employed smaller per-disk capacities, recovery times were measured in terms of hours. With today's high-capacity drives, RAID rebuild times are now being counted in terms of days.

RAID rebuild times create data risks whereby during this period the system is no longer fully protected. Even with dual parity (i.e., RAID 6) the risk is reduced, but not entirely eliminated. Should another drive fail during a rebuild process, the impact affects all the data on the store (note that for RAID 6, both parity drives must fail for a total loss of data).

So the problem gets worse not just in terms of time and potential data loss risk, but storage performance (in most cases) can significantly impact applications and users during RAID rebuild periods.

With today's larger disk-drive capacities, manufacturers have ways to mitigate these RAID issues through erasure-coding, wide-striping and more. Adding a solid-state cache (SSD) helps toward faster rebuild times along with other degrees of protection and performance improvements.

Storage systems configured specifically for media-centric, file-based workflows provide extra protective measures, insuring performance sustainability by managing drives as subsets through replication and by altering the size and number of drives per RAID set.

These principles help to alleviate secondary performance reduction impacts. Nonetheless, users should be cautious of storage that is not particularly tuned for media applications or continued high-performance levels during rebuild or failover periods.

Karl Paulsen, CPBE, is Diversified System's senior technologist, a SMPTE Fellow, IEEE Member and an SBE Life Member. Contact him at kpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.