The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In this second installment on aspects of file-based workflow, the topic turns to the human factors affecting implementation and how to implement software systems in hardware-based broadcast solutions. (Check out the first part of this article series, "File-based workflow: Bits and bytes made into programs," in the April issue of Broadcast Engineering.) Make no mistake, the differences are substantial, and the learning curve is steep.

There are aspects of file-based workflow that make it quite different from analog or tape-based workflows at the every level. It is important to note that the business itself is also affected directly by the decision to move to new technology. But in this case, the business systems, which previously could often work with paper-based approaches, can no longer avoid being tightly integrated with the new technology.

PBS solution

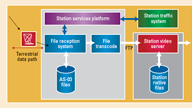

A case in point is the implementation of "NRT," non-real-time transfers of content from Alexandria, VA, to affiliated stations, now under way at PBS. (See Figure 1.) Files are "pitched" to the stations over an IT-based satellite file transfer system. Once received, the files are cached on IT servers, a process that is part of PBS’ Station Services Platform (SSP), before they are moved to the station’s video servers. The more interesting part of the technology is the movement of metadata. The metadata — including accurate start of message (SOM), duration and program details like series and episode number — are distributed with the content and then parsed directly to the local traffic system from the SSP. Upon receiving the metadata, traffic has the choice of marking the content for movement to the air server and then sending a "dub list" directly to the SSP, which executes an FTP transfer of the content to the station’s video server. All this happens without any time spent in MCR to find and mark the content because the metadata flows with the content.

It is important to note that the SSP contains a critical bit of technology that allows seamless interoperation between PBS and all of the stations. The SSP contains a transcoding engine that takes the AMWA AS-03-compliant files and makes them compatible with the station’s local video server. No action is required by the station, and no special versions are needed for each station. This tight integration of several processes — transmission of the file, local cache, transcoding and FTP — constitutes an automated workflow that immediately affects PBS’ business operation in a material way. It saves labor and will eventually enable PBS to cut the amount of satellite bandwidth needed to move real-time content by shifting distribution to non-real-time file transfers.

A consortium including Warner Brothers, CBS and Ascent Media is doing something similar for long-form commercial content in a system it calls "Pitch Blue." Though different in the details, the operation is mostly the same. Content is sent as real-time MPEG streams to a local cache server. No record list is needed. Content destined for each station is simply delivered. This is similar to various commercial delivery services except that the end-to-end workflow was taken into account and no ingest operation is required.

Pitch Blue uses transport stream recorders where PBS is using true file transfer hardware. Both have self-healing capabilities should packets be lost in transmission, and both use terrestrial data paths to make requests for lost packets and allow the distant operators to check the status of content and the health of the transmission system. In both cases, FEC is used to allow some packet errors to be corrected without retransmission requests. Both systems are ultimately backed up by the ability to send the content in real time, live or for local recording by existing methods.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

This kind of holistic integration of the file-based approach with existing business operations is important. As the number of streams transmitted increases, it will become harder to run efficient operations using old methods. Adoption of file-based methods is inevitable, in part because the hardware used for tape-based approaches is beginning to disappear slowly. At NAB this year, there was a recorder shown that uses LTO-4 tapes and an internal MPEG-2 encoder to record files directly on tapes which may be directly usable in some archive implementations.

One thing should be clear: File-based workflow is quite distinct from tape-based approaches because it uses an IT infrastructure. This means designing, operating and maintaining the system is an IT issue, with constraints real-time video puts on network topology, bandwidth and security. For instance, a single uncompressed SMPTE 292 (1.485Gb/s) signal requires at least 10GigE. Because television files seldom need more than 440Mb/s, common GigE hardware suffices for faster-than-real-time transfers of files. Because broadcast files are considerably skinnier, the overhead available is more than adequate to move files several times faster than several times real time or to move multiple files over one link at the same time.

Securing content is critical. Systems connected to outside networks, especially ones not locked down tightly, are dangerous at best and should be avoided. It is important to explain to IT designers that all aspects of topology and security need to be vetted before starting a complex file-based workflow system design to avoid discussions about common IT security tactics that don’t work well with real-time video services. In an ideal world, the storage system would be infinite in size and speed and never require upgrading. In reality, economics require tiered storage.

Video server storage systems are expensive because they need to support many simultaneous I/O ports at high bandwidth. A better approach is to use nearline storage (spinning disks of a less expensive, lower performance type) and deeper archive with removable media such as LTO tapes. While this approach lowers costs, it also introduces storage management issues and complexity. The result is a need to have a system that manages the tiered storage, including offline storage of content on shelves or in remote locations. An archive manager product becomes a critical element of file-based workflow implementation.

An important part of file management is managing the metadata. The system must keep track of the content itself, the metadata describing it and its heritage, and also when and how it has been used and the expiration dates for the rights. Modifying the content in one database often requires changes to another related database. Keeping them all in sync requires good planning at the time of implementation and a locked-down approach to details in operation.

Though these systems are complex, they have become more affordable. Indeed, it is inappropriate not to protect the content and the metadata equally. The Advanced Media Workflow Association (AMWA) is working to add standardized data protection to files. The protection would be added to the essence, the metadata and the entire file wrapper. (See Figure 2.)

Let’s assume you are tasked with investigating and implementing a file-based workflow. Common sense should prevail, but first one should admit that implementing software systems is not like hanging gear in a rack and running a bunch of coax. It requires meticulous planning in the systems and in understanding what needs to be done. Defining a new system as a replacement for an old system may lead to the conclusion that you have to find a file-based approach for each step in a tape-based workflow. The first task should be to understand the steps in the existing workflow and what drives them. Using the PBS example from earlier, it is clear that the old workflow was to issue a record log, record a show from a satellite receiver, ingest the tape in the server (or at least mark it for playback by automation) and report back to traffic on paper any errors. In a file-based workflow, the equivalent may happen entirely in traffic.

The people factor

It is likely that any new implementation plans will include changes in how people work. Make no mistake; the management of people’s expectations is a critical part of any system plan. People resist change. Any significant new process, especially one not easily understood, is hard to accomplish without conflict. It is critical to involve everyone who has a stake in the outcome early in defining what the system should do in complete detail.

Equally important is the need to involve potential partners supplying software to the project. Set up a clear and direct line of communication with the people who will actually be doing the implementation. Assemble them all in a room and review the plans in detail. Articulate not how you want it wired, but rather how you need it to work. Use clear language to discover all of the interface points between software systems. Get everyone to understand what communications pass across every interface, especially security and topology issues. Each problem needs to be assigned to someone to solve. The entire team, including vendors, needs to communicate regularly and adhere to a schedule for completion.

One project I have been involved in recently included moving content from an old server system to a new one. The complications seemed easy to overcome, but issues such as updating the automation database on the new system and interfaces to traffic and archive management (both old and new systems) had to be detailed. Most importantly, the essence and metadata needed to be moved seamlessly from one system to the other. Every step turned out to be complicated, with multiple vendors interfacing at several critical points to be sure the content moved efficiently and was transcoded into MXF. We were fortunate to have an SQL database expert on staff during the project, and we had the luxury of a test system on which we could test transcoded essence as we tried different settings in the transcode process.

SOA

IT technology has also offered us a tool that is particularly well-suited to developing workflows. Service-oriented architecture (SOA) is not itself a workflow; rather, it allows individual processes to be "plugged in" using Web services interfaces. Tasks and metadata are passed over standardized protocols from one process to the next. An SOA management plane allows process monitoring and defining the workflow across multiple applications. For instance, content may need to be moved from an archive, analyzed for file structure, moved to the appropriate transcoder drop folder, transcoded to iPhone format and delivered to a Web server for consumer access. One might manage all of the steps manually or define the workflow in SOA and allow the content to move from one application to the next without intervention, reporting back at each step any errors to the SOA system while modifying metadata in relevant databases along the way.

The AMWA and the EBU recently issued a Request for Technology to standardize the essence and metadata interfaces between components in a workflow system. The project, "Joint EBU – AMWA Task Force on Frameworks for Interoperable Media Services (FIMS)" seeks not to redefine basic SOA technology, but rather to define how messages are passed so that common APIs can be used by vendors, simplifying the implementation of SOA in many facilities.

New channels

One of the strongest drivers for file-based workflow is the explosion in content delivery methods and formats. If a station has to deliver to multiple destinations, it is much more complicated if content is not moved as files. Transcoding content to many output formats can be done in one holistic workflow, rather than as separate serial processes. Consider for a moment the problem of deleting media and all of the relevant metadata or keeping proxy files up to date when new versions are created. Metadata must link to all of the relevant content without error. Complex file-based workflow systems require careful planning and implementation to ensure the integrity of the whole system is not compromised. Reconstructing the correct relationships later can be difficult.

Broadcasters recognize the need to move to file-based workflows. They represent effective and cost-efficient ways of doing business. It just requires good planning, flawless execution and great attention to detail in operation.

John Luff is a broadcast technology consultant.