How Access Time Affects System Performance

System performance is dependent upon the various components, software and applications that make up a system. When the system's components properly relate to one another, they then make a tightly integrated solution more plausible. Like in MTBF (mean time before failure), system performance is only as good as its weakest link; if one component performs poorly, the overall system is then dragged down to that lowest common denominator.

When video servers were introduced, one of the performance questions became "can the server play back-to-back spots of short duration?" For selected forms of video compression—which at that time were few—the requirements for two-to-three-second segments could be handled because each server channel actually processed the MPEG streams separately—sequentially toggling between one and the other. The clean "switch" between one clip and the next was handled at the video level, not at the file level. Splicing, at that time, did not exist.

Video servers used devices called charge pumps that preloaded the first few frames of MPEG video into a buffer, priming them for decode with a minimal amount of latency. The next clip in the segment would be primed into the other charge pump. The two video clips were then synchronized so that a clean video switch would occur on playout.

LAG IN SYSTEM ACCESS TIME

The time to get the file from the hard drive to the charge pumps could be termed the "access time." In a two-channel MPEG-2 stream, disk access was not a serious factor that impacted performance, even with older 4 GB Fibre Channel drives running around 5000-7200 RPM in a modest RAID 3 or RAID 5 configuration. Drive sizes have since grown to 3TB, buffer memory from a few hundred megabytes to dozens of gigabytes, and arrays from a few drives to hundreds per system. What we've not seen is a proportionate reduction in system access time—a performance issue that plagues systems whether locally confined or geographically extended across networks—including the cloud.

Not only is file-based media being affected, application performance handling the media is impacted. System performance can be influenced by day and time loading. Systems can slow because of network overloading based upon the number and types of services being handled. Processor limitations can also reduce throughput. Even if these events can be properly handled, I/O bottlenecks can pop up as more calls to the applications are made, forcing the components to work harder in order to allow the application to run faster.

Finding an appropriate resolution to performance or I/O bottlenecks can be a perplexing task; often adding costs that cannot be fully realized. Providing the right balance of components to achieve the best performance for the budgets available can be even more challenging. Unfortunately, the physics of hard disk drive technologies also pose limits on access time.

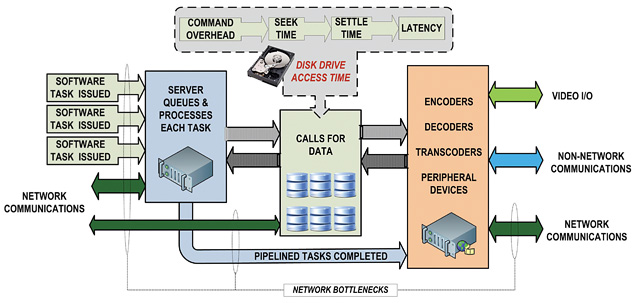

Fig. 1: Performance efficiency: The actual output of a system compared with the desired or planned output, expressed usually as a percentage Improving I/O performance, without bottlenecks, requires that the system use a high-performance solution with adequate cache memory and core processors. It further requires a high bandwidth network with storage devices exhibiting a high degree of bandwidth and a minimal amount of latency.

For hard drives, the total access time is found by summing the command overhead time with seek time plus settle time plus latency. Command overhead—the time between issuing a command and when the actual command is executed—is continually being improved through predictive algorithms. For example, predictive head positioning based upon previous event sequences, as when retrieving a contiguous set of video frames from a video file, can reduce seek and position times dramatically.

HARDWARE & SILICON ISSUES

Latency and seek time become the biggest inconsistency when dealing with a physical hard drive because the heads don't know the next direction to move so as to read the next data from the platter. Drive latency, i.e., time spent waiting for the drive to rotate from where the head currently is to where it then needs to be to read the next data, is a huge factor—even though the times appear "small." Access time, typically in the 2-to-5 millisecond time range, varies with the rotational speed of the drive platter itself. Physics won't allow access time to reduce significantly anytime in the near future. However, through the use of solid state Flash drives, access time can be mitigated—a topic for a later time.

Core processor throughput, another access issue, is impacted by drive latency factors. A processor can perform millions of operations during the hard disk's 2-5 milliseconds of data access time. IT servers will build up huge queues for disk drives but must throttle back their processing power while other I/O operations in the queue occur. The impact is called "I/O-wait time" whereby the processor needs to wait for data to be returned from the drive before doing its next set of operations. The processor cannot go about doing other calculations; thus, wait time drives down overall system performance.

Tiny fragments of wait time may not have a monumental impact on a single thread of processing, but when multiplied by hundreds to thousands of processes per frame of video, it is relatively easy to see that another philosophy needs to be developed which balances the performance equation in a cost effective and reliable way. We wait for the next evolution of storage technology to address this issue.

Karl Paulsen, SMPTE Fellow and SBE CPBE, is Diversified Systems' chief technologist. Read more about other storage topics in his recent book "Moving Media Storage Technologies." Contact Karl at kpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.