JPEG 2000 over IP

Last month, we talked about Ethernet and IP and the advantages of a layered network stack. Let’s look at an application that uses these layers — the streaming of JPEG 2000 over IP.

JPEG 2000

JPEG 2000 (J2K) is one of a number of compression formats that are used by professional media companies every day all over the world. The purpose of this article is not to go into detail about how J2K works — there are many excellent tutorials and books on this subject — but, there still are a few things you should know.

First, J2K is generally used when high quality is required, such as for backhaul of national sporting events or for transfer of content between production facilities. Second, it can be configured to provide lossless compression, meaning that it is possible to prove that the video, after a compression/de-compression cycle, is mathematically identical in every way to the video prior to compression (lossless compression). Finally, the J2K specification does not cover audio; it only tells you how to compress video images.

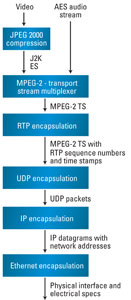

Figure 1. This shows the transport of J2K with audio over IP, illustrating how a layered approach is applied in a working scenario.

In the 1950s, AT&T (note the capital letters — we are talking about the old telephone company) built a nation-wide, terrestrial, video network for the big three networks. This was an RF-based analog system that remained in place for many years. In the 1960s, AT&T launched communications satellites, and AT&T and other satellite operators added video capability to these platforms over time. As a result, in the 1980s, satellite became the dominant long-haul technology. During the dot-com boom, tens of thousands of miles of fiber optic cable were installed all over the country. The boom was followed by a bust, but the fiber was already in the ground. Thanks to this, megabit and now gigabit networking has become available on long-haul networks — at surprisingly reasonable prices in some cases.

If you remember from last month’s article, we said that one of the keys to networking is layering and encapsulation. Packetized networks use packets composed of a header and a payload section. The header contains information that is used to perform functions associated with that layer of the network functionality, and the payload section contains the information we want to transport across the network. Each layer performs a specific function. Let’s look at a specific example — the transport of J2K with audio over IP — to see how a layered approach is applied in a working scenario.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Figure 1 shows the protocol stack used in this case. We start with live professional video and audio — perhaps the output of a sports production truck. The video out of the truck is compressed using J2K, resulting in something called a JPEG 2000 Elementary Stream (ES). The audio at the side of the truck is already an AES stream.

Using MPEG-2

As mentioned earlier, the JPEG standard says nothing about audio. Fortunately, we can use a portion of the MPEG-2 specification to multiplex JPEG-2000 ES and AES audio into a single MPEG-2 Transport Stream (TS) in a standardized way. This is an important point: The MPEG-2 specification covers all sorts of things besides compression. So, even though we feed this J2K video through equipment that is following the MPEG-2 specification, it is important to realize we are using J2K compression that is then fed into an MPEG-2 multiplexer, where it is combined with the AES audio. The result is a single MPEG-2 TS.

The MPEG-2 TS contains information that helps receivers reconstruct timing between video and audio streams. While this is vital to reproducing video and audio, these timestamps do not provide everything we need in order to deal with what happens in the real world on long-haul IP networks. Let’s look at some of these networks’ characteristics.

As IP packets travel over a network, they can take different paths from a sender to a receiver. Obviously, the inter-packet arrival time is going to change. In some cases, packets can arrive out of order or even be duplicated within the network. Having information about what has happened to packets as they transit the network allows smart receiver manufacturers to do all sorts of things in order to ensure that video and audio at the receive end are presented in a smooth stream. What we need is a way to embed information in the packets when they are transmitted, so that we can adjust for network behavior at the receiver.

RTP

Real-time Transport Protocol (RTP) allows manufacturers to insert precision time stamps and sequence numbers into packets at the transmitter. If we use these time stamps to indicate the precise time when the packets were launched, then at the receiver we can see trends across the network. Is network delay increasing? What are the implications on buffer management at the receiver? This information allows receivers to adjust in order to produce the continuous stream at the output. RTP sequence numbers are simply numbers that are inserted in the RTP header. The numbers increase sequentially. At a receiver, if you receive a packet stream in the order [1], [2], [4], [3], you know immediately that you need to reorder packets 3 and 4 in order to present the information to the MPEG-2 TS de-multiplexer in the order in which it was transmitted.

The next layer is User Datagram Protocol encapsulation. MPEG-2 packets are 188 bytes. This data needs to be mapped into packets for transmission. The newly created SMPTE 2022-6 standard describes how to do this. UDP is designed to provide a simple scheme for building packets for network transmission. Transmission Control Protocol (TCP) is another alternative at this layer, but TCP is a much heavier implementation that, for a variety of reasons, is not well suited to professional live video transmission.

UDP packets are then encapsulated in IP datagrams, and at the IP layer, network source and destination addresses are then added. What this does is allow the network to route data from one location to another without the use of external routing control logic.

Finally, the IP datagrams are encapsulated in Ethernet packets. The Ethernet layer adds the specification of electrical and physical interfaces, in addition to Ethernet addressing that ties a specific physical device to an address, something IP addressing does not do.

Hopefully, this real-world example helps you to understand that layered systems are critical to the success of modern networked professional video, and that each layer adds something unique to the system.

—Brad Gilmer is executive director of the Video Service Forum, executive director of the Advanced Media Workflow Association and president of Gilmer & Associates.