Managing Valuable Space Through Provisioning

Karl Paulsen You’re just about to do a software update or start a new project and you find you’re too close to the storage limits to continue. The data you’re about to use won’t fit on the local drive, transportable or even the allocated space on the video server’s storage array.

Now you must begin the process of purging data, the one task you dread and have procrastinated about until it’s now too late. It happens to all of us, but in some cases, it could have been prevented.

Storage management or under-provisioning should never be a workflow stopper, but it often is. When storage saturation occurs you can either buy more storage or you can free space by dumping files. From a cost containment perspective, deleting unnecessary, outdated or unwanted files continues to be the most effective means of controlling storage space.

However, manually deleting files is time consuming and unproductive, often leading to the wrong files being deleted without notice or intention.

Depending upon the type of data stored, administrators can turn to technologies that IT-departments often utilize, such as data compression, data deduplication or head off the process by using “thin-provisioning.”

Thin-provisioning essentially virtualizes storage capacity, much like the virtualization of server capacity. Operationally, an administrator creates a pool of virtual storage, which is then doled out to users based upon their needs, the project or the available storage. The storage allocation may also support both the working applications and the data content.

Storage provisioning may be simple or complex in its capabilities. Even the simplest of media asset workflow managers can include provisioning, which is advantageous in environments where there are multiple workstations that would be shared with many more potential users.

The concept of storage provisioning is somewhat psychological. Users know they only have so much space, so they will inherently manage that space more efficiently. Administrations may only allocate a small pool of actual storage, often less than what is probably needed. This drives users to be cognoscente of retaining unnecessary files, duplicates, versions and the like.

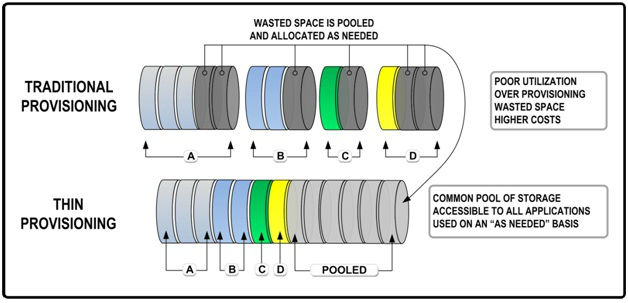

Thin-provisioning works in a collaborative system where shared (pooled) storage supports several users on any number of workstations (see Fig.1).

Fig. 1: Thin provisioning reduces storage waste by pooling unused storage so that it can be allocated on an as-needed basis. Thin provisioning should support file-based volumes and block-based LUNs; as well as provide the ability to dynamically resize to support storage growth on a “just-in-time” basis. Workflow-wise, as one person completes their task, they then free up their storage so the provisioning agent can then recapture the user’s storage into the overall pool, restoring its available to others almost immediately.

Managing storage through thin-provisioning does require a conscious level of tracking, alerting and sometimes even direct human interaction. The drawback comes when the thinly provisioned system simply runs out of storage—for whatever the reason.

At this point the entire workflow may stop, not just for one server or user, but for everyone. Administrators often have another storage pool hidden away, which can then be allocated until the overall situation becomes manageable.

When a system is thinly provisioned to manage both raw workspace storage and the storage necessary for the actual applications, there can be risks to everyone on the system should a drive failure occur.

BRINGING DOWN THE HOUSE

Typically, in conventional disk arrays, if the capacity on one or even two disks is exceeded, the remaining disks may be able to pick up the load, allowing the applications to run unimpeded. Because thinly provisioned systems simultaneously pool storage from multiple disks while managing multiple applications, there is a risk (without careful monitoring) that one failure could bring down the whole house.

Planning is essential when looking to include the benefits of thin-provisioning, where different scales will apply to each particular application. For example, in craft editing that occurs on a regular schedule, it’s possible to predict and allocate storage based upon routine workloads from the workgroup.

However, in a pure project environment, more care must be given to how much is allocated, for what period and under which circumstances. For the latter case, this is where an integrated asset management and workflow manager component makes good sense.

While not necessarily applicable to media-centric workflows, yet more applicable to research or statistical analysis, filling a thinly provisioned array with rapidly accumulating data or consolidating data negates the benefits of this technology mainly because pre-allocation of that data space is impractical.

There are other data reduction techniques that support efficient storage management; however, for media applications, these technologies are not as practical as the forms of provisioning just outlined. Data deduplication is the task of removing duplicate files from a system and using pointers to reference the single instance of that unaltered file. Most media application managers found in professional NLEs and MAMs handle this process internally. When a third-party storage pool is in use, there might be merit to having data deduplication storage management available.

Compression is the other technology typically used to increase storage space. In this context, compression refers to “data compression” and not the media industry’s understanding of “video compression.” Fortunately, or unfortunately depending upon your perspective, most media files are already in a compressed “video state;” so taking advantage of data compression techniques will not make any serious difference in the space availability of video or moving media storage systems.

In most case, the users of digital editing and media asset management systems already enjoy the benefits of storage management afforded them by virtue of the actual platforms or applications that support their NLEs or MAMs. For those without a MAM and that use a variety of storage arrays and systems for all their media applications, the topics presented here have merit and may be worthy of further exploration.

The bottom line is that effective management of the storage pool requires long-term planning and short-term awareness in order to avoid the traps of over-provisioning a storage system.

Karl Paulsen (CPBE) is a SMPTE Fellow and chief technology officer at Diversified Systems. Read more about these and other storage topics in his book “Moving Media Storage Technologies.” Contact Karl at kpaulsen@divsystems.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.