Planning MAM Architectures

Media asset management (MAM) systems derive data from many different activities within the digital workflow. For MAM consistency it is imperative to track what happens each time a file is generated, manipulated and moved through the workflow.

Those paths, functions and activities—elements of the overall system specification—define storage capacity, ancillary components and the performance of the media processing devices such as render engines, transcoders and the like.

In planning an enterprise MAM, it is critical to detail which activities require resources from servers, storage systems and applications. For example: during live ingest from SDI video sources, all devices having contact with the SDI stream need to be accounted for. If there is a video capture card in a PC-workstation, most likely it will have a MAM software plug-in that exports metadata, which that card captures and/or generates.

This card can also create a proxy, which the MAM system treats as a separate file with separate, but linked, metadata. Each element is tracked in the MAM database and later used for search and file retrieval purposes.

FILE ALLOCATION

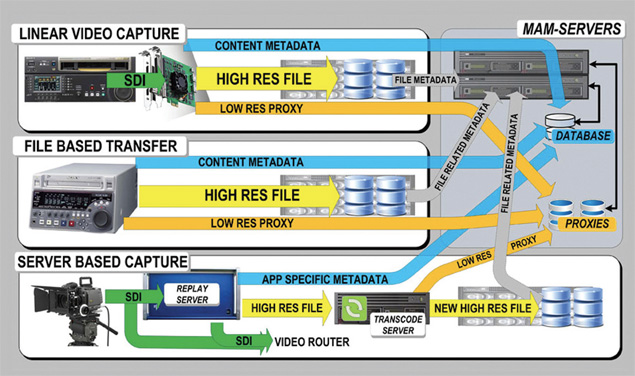

When ingest occurs on an intelligent device such as a replay or video server, usually there are MAM elements integral to that device, which would be useful to the enterprise MAM. Should external disk caches be employed, they too will have a file system with a file allocation table, which knows the details of the file and associated metadata. This is also linked to the enterprise MAM. Fig. 1 shows a sampling of the proxies, essence and metadata paths typical to a MAM ingest architecture.

Fig 1: Details of a typical set of ingest processes showing SDI and file-based ingest and proxy generation with content and file-related metadata paths between the ingest components and the storage subsystems.

Ingest processes may utilize outboard transcode engines to convert incoming files to a native house format, generate a low-resolution proxy or wrap the file to an alternate container (such as MXF, GXF or QT) format. A transcode engine will often use watch folders to receive files and replace them once their operations are completed. Media-aware MAMs may not employ watch folders; they just know via metadata (e.g., in MXF as XML) what they are supposed to do with each file based upon pre-defined workflow profiles.

When the MAM is responsible for capturing the original file, creating a working EDL file, generating a proxy/transcoded file and more—each set of processes (unwrap, transcode and rewrap) are activities that require computer resources. Each activity is accounted for in the MAM server and at the enterprise (also known as "central") storage level to assess overall bandwidth and performance requirements.

Each path the files traverse across each processing resource is defined in the MAM architecture. Average and peak data volumes between resources (ingest servers, transcoders, processors, storage, etc.) are added up.

The type of transfer and its interconnect topology are defined relative to the number of primary (and redundant) Fibre Channel ports, GigE and 10 GigE ports, or any other control paths necessary. All expected bandwidths (2, 4 or 8 Gbps) for Fibre Channel, are allocated so network switches can be properly sized and distributed.

PRODUCTION CLASS STORAGE

MAM systems charged with handling production workflows and the management of those assets must integrate with "production class" storage. In one operational model, editing may be confined to local storage housed in or adjacent to each edit workstation. Another operation may have the edit platform with self-contained high-performance storage arrays versus an "edit-in-place" method based upon a central storage pool.

Self-contained subsystems will have their own "mini-MAM" that tracks edit-specific activities, file content or information and application-specific metadata. The enterprise MAM parses the mini-MAM metadata and ensures that it remains linked—in some common definition—to the central file system's master database. Architecturally, multiple MAM servers built upon IT-class servers will handle these activities. MAM servers, comprised of combinations of render engines, database servers (such as SQL), client-interfaces, etc., collectively enable these activities in series, in the background or concurrently with other operations. Fig. 2 provides a representation of an enterprise MAM system.

Fig 2: High level example of a complete IT-centric enterprise class media asset management (MAM ) system.

The throughput of the MAM/workflow depends on the number of independent or concurrent operational functions, the makeup of the files and the volume of data that moves stage-to-stage throughout the content life cycle. The number of streams handled is also identified in the workflow specification so that servers, storage and applications can manage them in a timely manner without bottlenecks. Planning-wise, when activities must occur in real time, make that a clear priority. Should ancillary functions such as backup, transcode for archive or browse, occur in other than real time, make those needs unambiguous.

These functions and factors apply equally to transmission and live play-out operations.

Figuring this all out is not an easy task for the novice. Users should work with experienced systems engineers who know how to clearly establish the operating parameters and expectations as part of defining not only MAM functionality, but also storage and server performance requirements.

In a future article, we'll reverse engineer how a MAM/storage architecture works and look at what impacts occur when changing from one coding format to another, including where it affects the physical requirements of a complete solution.

Karl Paulsen, CPBE, is a SMPTE Fellow and senior technologist at Diversified Systems. Read more about other storage topics in his latest book "Moving Media Storage Technologies." Contact Karl at kpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.