Preparing for Data Migration, Part 2

We continue from last month's introduction on data migration. We surmised that as organizations employing media evolve, they find their storage environment becoming inadequate to support their workflows. Sometimes their first approach is to add more storage; in other cases they look to replacing the storage platform all together.

When facilities have a modest amount of physical storage, a moderate degree of work activity or employ only simple direct attached storage (DAS); moving the data to new storage won't present much of a challenge logistically or financially. However, when you reach the scale of a-few-to-many hundreds of terabytes of storage, or upwards of 50 or more concurrent users, these decisions take on entirely different perspectives.

WHAT TO CONSIDER

Fueled by collaborative working environments, enormous quantities or increased sizes of files, data migration today encompasses a broader set of considerations than it ever had to five years ago. When data migration happens, it usually includes a technology refresh aimed at providing efficiencies as well as capacity increases or platform changes. This opportunity doesn't come often, and as such can be fraught with risks if all the aspects are not considered.

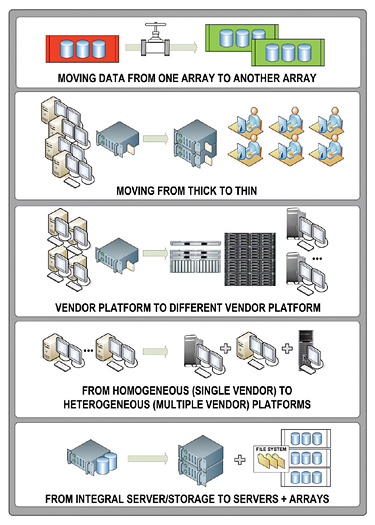

Fig. 1: Possible migration environments that facilities may face as they grow or production and distribution needs expand. Fig. 1 depicts various migration environments that facilities may face as their businesses grow or as their production and distribution needs expand. It should be evident that these changes will affect varying levels of the system whenever one looks at moving data to augmented storage, when replacing storage altogether or when changing operating systems and hardware platforms.

As these media-centric systems expand, not only does the program-like content expand, so do the databases that support them. It is important to understand not only the audio-video nature of these files, but the interrelationships that these systems have with metadata, clips lists, EDLs, business processes and the like.

One of the checks often overlooked is how storage is managed for efficiency. This should be examined long before deciding to wholesale replace your storage platform simply because you've found it lacks needed capacity. Be certain your current workflow practices are not exacerbating the storage capacity issues. Take a look at storage management practices that can alleviate extraneous and duplicated sets of large files. Understand how versioning of your content impacts duplicative file sets. Look at places where sets of clips differ in context by only a few seconds, such as a tag at the end or a graphic overlay in the middle.

If there is a separate file for each version in rough cut form, it is likely there are multiple copies of the "final" release version also being kept. Often production workflows create multiple copies of the same content and don't realize it, taxing storage systems tremendously. There are software tools that can help manage these inefficiencies and should be considered as part of the solution to any storage update.

OUT WITH THE OLD

If you're going to replace your current storage system, don't migrate your old, often bad, practices. Bad habits are generally created because of poor system management where at first the installation initially looked fine, but over time short cuts occurred and data management suffered. Data migration is one of those times when you should fix the issues that might have contributed to the reasoning behind having to replace, rather than simply augment, your current storage environment.

Remediate orphaned storage and look at how to gain efficiencies, optimize performance and make the best use of the new storage platform. Leverage those tasks that can be automated to get rid of the manual processes previously used in data migration, production processes or system reconciliation. Look for tools that accelerate the movement of the data. Such tools and practices will help not only in the current migration, but also for future migrations as storage continues to expand over time.

The tasks of managing risks (such as security leaks and extended downtime) should be part of the migration plan. Care should be exercised when migrating data among varying arrays provided by differing vendors. This process usually finds that permissions and security settings (either temporary or legacy) could be left behind, making your data open to potential theft or corruption.

If you're changing operating systems, moving data among file systems (e.g., NTFS to NFS) could also result in the loss of permission and security settings that remain obscured to the casual user, but quite obvious to the skilled hacker. On another level, permissions could be lost entirely, preventing access to migrated data in the future.

Make certain a complete backup to an archive system is made before the process of data migration begins. Using an archive provides a level of insulation that could allow for data recovery at a later time.

Don't neglect the importance of providing for sufficient training on the new procedures, software and systems. Use all the ramp up and transition time to get everyone familiar with the new systems. Be certain you've provided enough time for debugging prior to abandoning the previous storage infrastructures.

Lastly, look at the total picture. Start at the front end of the process and move through each and every component clear to the back end. Data migration needs to be viewed as an end-to-end solution, encompassing the network infrastructure, software and the workflow models so as to achieve the best value from the new components.

Karl Paulsen, CPBE, is a SMPTE Fellow, technologist and consultant to the digital media and entertainment industry with Diversified Systems. Contact him at kpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.