Selecting Storage Solutions of the Future

The expanding roles that high-definition, 3D-stereoscopic and higher-resolution images play are impacting many aspects of the media and entertainment industry. One change is in the high-performance storage platform. Previously reserved for major film and animation productions, these platforms are taking on new requirements and dimensions, requiring new thinking with careful selection criteria.

From the roots of the simple digital disk recorder, videoservers, data servers and storage platforms now need to address a growing multi-gigabyte file domain. A crucial element in digital production, the store platform’s function is to provide an avenue for producing content in a more efficient and timely manner. Today when an entity decides to embark on a new production or business opportunity, it no longer has the luxury of spending cubic dollars on new technology with the hopes that the opportunity will be fully successful. In short, they generally start out with sufficient resources to provide the services required, and if successful, they grow into the next level and expand those resources to handle that growth accordingly.

FUTURE NEEDS

Selecting a storage platform that will meet the needs of the future can be a daunting task. Several decisions must be made, and most of them can be fraught with questions or confusion.

In the earlier days of digital media storage the limitations centered around the storage capacity that could be handled on the system’s architecture counterbalanced by the costs of that storage. Early videoserver systems had 4 GB drives, used motion JPEG encoding, and, if you were lucky, held 12–20 hours of standard definition video. Sufficient for the early adopters’ commercial and promotional library, these systems required constant management and purging to keep up with increasing demand.

Growth was always a problem. Self-contained videoserver platforms needing capacity increases meant shelving the hard drives, adding secondary array chassis or even a wholesale “forklift-upgrade” that could support larger drives, wider bandwidth and higher throughput. Much of this is old history.

Videoservers today employ a more modular and convertible approach to storage, codecs and I/O-ports. However, on the other side of the fence is an entirely different perspective where live play-to-air capabilities are not the mainstream requirement.

Modern digital production processes manipulate, process and manage huge volumes of full bandwidth files of enormous dimensions. They address dozens or hundreds of workstations running multiple sessions on a schedule that is grueling. Their systems can grow to gigantic proportions and must be able to securely protect the assets they house without falter.

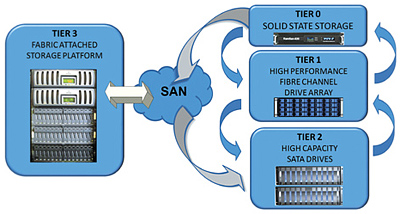

Data Migration: The manual or automated movement of data from costly, high-performance storage platforms to lower-cost storage tiers. Players in this marketplace had mainly relied on traditional NAS or SAN storage for digital media storage. The high-profile digital media-storage providers, those capable of addressing high-volume production needs, also provide storage to industries that routinely handle hundreds of gigabytes of data—in particular the medical, financial and scientific communities. When these “data” system stores are used in the media and entertainment space, the rules often change. These storage systems must be adapted to properly handle those differences as well as protect the investments the users have in those media assets.

Nonetheless, there are baseline performance issues that are common between storage solutions for financial data and for media files. How those issues are engineered, and the methodologies that one supplier uses compared to another is the “secret sauce” that separates one platform from another.

In recent times, continued emphasis on open systems utilizing commercial off-the-shelf (COTS) components as a means to support the cost equation and to mitigate proprietary solutions have been modestly satisfactory. But buyer beware; be aware of the tradeoffs in those solutions. The technologies and applications, which are promoted by company A over company B can make or break the performance of their respective products when (and not “if”) you need to expand or extend that storage architecture.

PLATFORM REQUIREMENTS

To get a handle on why you’d select one storage platform (i.e., “solution”) over another, we’ll look at the requirements placed on a storage platform.

First, determine if you’ll need a SAN or a NAS solution. If high performance, high availability and high throughput is required 110 percent of the time, most likely a SAN is the right choice. SANs utilize high-performance Fibre Channel drives, incorporate sophisticated RAID protection systems in hardware or software, and may need managed switches to fan-out the data buses between array components. The NAS, on the other hand, is simpler to implement, usually uses less-costly SATA drives, is a direct replacement for DAS (direct attached storage) and is expandable, to a degree, without the complications of the SAN-architecture.

Next, the file system or systems you plan to employ today, and the possibilities of those for tomorrow, should be capable of operating harmoniously on the storage platform. You may be happy with CIFS today, but find you need NFS the next. Be certain you don’t run into a roadblock or have to build out a separate store (SAN or NAS) to support both.

High-performance, high-accessibility stores are necessary for multiple sessions. These systems may encounter issues where a file gets corrupted or was improperly or not completely written or read. Advanced stores have a feature called “Snap Shot” or “Checkerboarding” whereby an image of the previous actions or transactions are cached to another portion of the store as a short-term backup. These activities may need to happen quite frequently. When looking at protection schemes in a high-performance storage system, investigate the frequency of this capability, how large a Snap Shot can be taken and how often it can be made.

LARGE PROJECTS

When working on a large project with thousands of very huge individual files, such as a motion picture or long form editorial, it becomes necessary to parse out the same files to multiple users for different post-production activities.

One methodology for this distribution is to replicate these files two or more times on the same store as opposed to simple mirroring. Replication requires managing all the activities, reads, writes, metadata and versioning. It is a complicated task, governed either by the editing systems or by the file management systems on the store or both. If you anticipate this level of activity, or want additional protection against data loss, understand how the potential storage platform handles replication and be sure it is a part of the specification.

Data migration is the process of taking short-term dormant material and moving it off fast storage to slower nearline stores (or eventually to archive). The policy engine that handles this may be internal to the storage platform or managed by another software application. If it is important to your workflow to have migration managed by your asset management system or workflow applications, be sure the storage platform can interface accordingly without incurring performance degradations.

Making your storage solution extensible for the future takes careful upfront planning. There are additional sets of requirements that need consideration such as tiered storage, maximum capacities of the storage array or volume, file system addressing and allocation, antivirus protection and the like.

In a future installment, we’ll examine these parameters and give some added pointers on how to choose or specify high-performance storage.

Karl Paulsen is chief technology officer for AZCAR Technologies, a provider of digital media solutions and systems integration for the moving media industry. Karl is a SMPTE Fellow and an SBE Life Certified Professional Broadcast Engineer. Contact him atkarl.paulsen@azcar.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.