The advantages of object-based storage

The criteria for data storage are scalability, security, permanence and availability. Making an acronym, we get PASS:

- Permanence means that no data is ever lost;

- Availability means that the user/application requirements for access/performance are met;

- Scalability defines the ease of meeting changing requirements;

- Security defines the granularity and durability of access privileges.

Traditionally, storage has been block-based direct attached storage (DAS)/SAN or file-based via NAS or SAN file sharing (SFS) with some kind of metadata controller. Applications can be designed to work with or without a file system. Simply put, block-based applications are faster; file-based are more flexible.

In the past, certain functions (multitrack audio, grading, etc.) required dedicated block storage. Increasing disk, controller and interconnect speeds have decreased the overhead that the file system adds to the aggregate access speeds; thus, block-based storage is no longer required to meet the needs of production.

The evolution of media storage has gone from achieving the required speed, to making it available to multiple users, to ensuring permanence. First we had block-based storage incorporated into applications. Then we had SAN with direct attached client shared access. V arious parity systems were incorporated for redundancy, and different backup schemes were implemented.

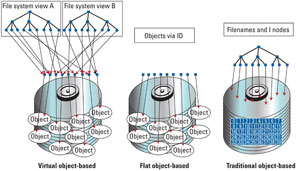

Figure 1. A collection of objects within an object storage device form a flat file space. File structures are abstracted and access the objects via pointers.

Today, these methods have reached a limit. A major cause for this is that the current architecture requires rebuild times greater than the MTBF. In other words, if you lose a disc on a petabyte storage system, the rebuild may not be completed before you lose another disc. Current methods for avoiding this require compromises in PASS. Object-based storage reduces rebuild time dramatically and negates the performance hit. (See Figure 1.)

Object storage device

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Replication is performed by the object storage device (OSD). Clients submit a single write operation to the first primary OSD, which is responsible for consistently and safely updating all replicas. This shifts the replication-related bandwidth to the storage cluster’s internal network. There is a lot of discussion about the net results of this architecture change. One point is that no matter how many copies of the data are distributed across how many discs, there is always a rest risk. Object-based storage can reduce that risk to less than 2 percent of the data on the failed disk instead of the 100 percent in traditional parity systems. This is because replicas are stored at the object level, allowing for two copies with the same net loss of capacity as a single parity drive.

Systems using object storage provide the following benefits: data-aware prefetching and caching; intelligent space management in the storage layer; shared access by multiple clients; scalable performance using an offloaded data path; and reliable security.

Usage

Let’s look at some media use cases and see why an OSD is preferred.

Archiving is easy as there is substantial agreement within the industry that the two main requirements, scalability and permanence, can best be met by object-based storage systems.

Acquisition can take advantage of object-based storage because the nature of the object is stored in the object metadata. This will ensure that the object is always stored in a manner suited to the application. An essence requiring a continuous data rate of 150Mb/s will automatically be stored where this data rate can be provided. Yes, there are other ways to do this, but they are all add-ons inducing administrative as well as performance overhead.

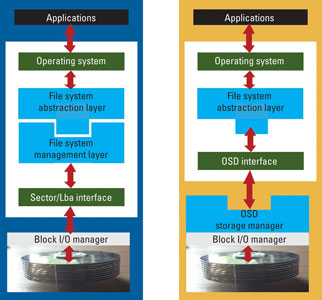

Figure 2. Separating the file system abstraction layer from the storage management component makes many of the problems related to device sharing go away.

Post production requires real-time shared access of the assets. In the OSD model, the protocol is system-agnostic and therefore system-heterogeneous by nature. Since the OSD is the storage device, and the underlying protocol is supported on either a SAN (SCSI) or a LAN (iSCSI), device sharing is simple. (See Figure 2.) Data sharing is accomplished the same way. The objects on an OSD are available to any system that has permission to access them.

Performance

The OSD model is designed to allow performance characteristics of objects to be attributes of the object itself and independent of the OSD where it resides. An HD video file on an OSD may have an attribute that specifies an 80MB/s delivery rate as well as a certain quality of service (i.e. a consistent 80MB/s). Similarly, there could be different attributes for the same object that describe delivery performance for editing rather than playback. In editing mode, the OSD may skip around to many different frames, thus changing the way the OSD does caching and read-ahead. Similarly, for latency and transaction rates, an OSD can manage these more effectively than DAS and SAN because it has implicit and explicit knowledge of the objects it is managing.

Just to put the performance question to bed, one of the cool things about OSD is how it handles zone bit recording. Because discs spin at a constant rate, the transfer rate more than doubles from short inner tracks to long outer tracks. OSD provides a simple method of offering the fastest part of the disc to the data that needs it most while still making the rest of the disk available for other data. In addition, the inherent drawback/advantage that an object cannot be overwritten makes versioning automatic and provides a consistent data state.

Transcoding and confectioneering are both compute-bound, highly automated processes. The ease with which OSD provides access to distributed objects can only improve these workflows.

Playout can certainly profit from the abstraction of the storage from the application, allowing for automated staging of items in the playout list with a minimum of overhead. As the location of all assets is automatically tracked, a misapplied file pointer will become a thing of the past.

One of the major hurdles to monetizing existing content is the tremendous amount of storage required to keep that content online. Estimates show that the cost of managing storage resources is at least seven times the cost of the actual hardware over the operational life of the storage subsystems. This is independent of the type of storage (i.e. DAS/SAN/NAS). The illusion that falling disc prices will actually reduce costs in mission-critical applications does not account for the costs of keeping the data available for on-demand applications.

Management

Storage resource management has been identified as the most important problem to address in the coming decade. The DAS and SAN architectures rely on external storage resource management that is not always entirely effective and has never been standardized. The NAS model has some management built in, but it too lacks standards. The OSD management model relies on self-managed, policy-driven storage devices that can be centrally managed and locally administered. The execution of the management functions (i.e. backup, restore, mirror, etc.) can be carried out locally by each of the OSDs without having to move data through an external managing device.

DAS was designed for direct attachment to a single system. All the management functions are done from the single system to which these devices are attached. The difficulty arises when more systems attempt to address the same storage. Because the management is distributed among all the systems that the storage devices are attached to, complicated coordination is required, and there is no central management instance.

A SAN system has access to all of the storage devices and thus management can be centralized on any one of the hosts. However, implementing self-management in a heterogeneous environment has proved difficult. NAS devices have more “intelligence” built into them by their very nature (i.e. there is an OS with a file system, a communications stack, etc.). This extra intelligence lends itself to the idea of self-managed storage, making the overall task of managing storage resources somewhat easier. This architecture implies increased complexity when more granular performance requirements are to be met or increased performance achieved.

To achieve the requirements for PASS while at the same time reducing TOC, the OSD architecture is designed to be self-managed using the OSD intelligence built into each OSD. Devices know how to manage each of several resources individually or through an aggregation of OSDs. These resources include availability of capacity, requested bandwidth, latency requirements per session, IOPs and user-definable PASS requirements.

Finally, OSD defines the concept of “object aggregation” whereby a hierarchy of OSDs can be made to appear as a single larger OSD. The resource management of this large aggregated OSD is done either through a single or multiple redundant OSDs at the top of the aggregation or can be assigned to each of the individual OSD devices to achieve maximum resource management flexibility.

Don’t object; get objective.

—Christopher Walker is a consulting engineer for Sony DADC Austria.