Understanding Storage Efficiency Metrics

Enterprise-level storage requirements, including the management of those storage entities for the broadcast, media and entertainment industries, have evolved from a purely linear approach to content storage into a random, nonlinear and dynamic set of workflow processes that continually change as technology and service demands expand.

In an era where the financial bottom line regulates most decisions, one area that is often misunderstood, or in some cases never addressed, is efficiency in a data storage system. Understanding these closely coupled parameters, metrics if you will, helps validate choices and justify expenditures ongoing.

IN THE PAST

To place this in perspective, in the 1990s post-production world, as digital replaced analog, it was often difficult for owner-operators to justify the installation of a new digital (video) router. As the production world would learn, video routers would gradually be supplanted by network routers.

Today network routers are merely components of a much greater entity, networked storage, which has become the central repository and single most-relied-upon system in the production chain.

Operators should have a set of performance metrics by which they can judge the effectiveness of their resources, the enterprise data storage systems that make up their operations. This allows for optimization to be achieved using specific measurements that focus on the efficiencies of the various components in the system.

Storage metrics consist of a collection of both manufacturer specifications and utilization measurements based upon the facility's configurations established during the storage system design. The system metrics encompass components of both physical media (e.g., drives, controllers, servers) and software (e.g., embedded, operating and management).

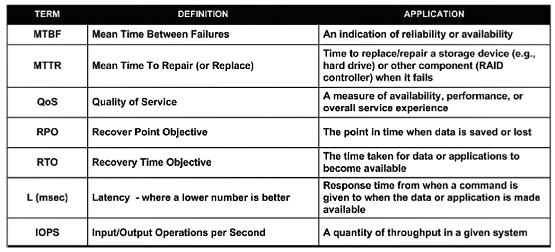

Examples of some common storage metrics are shown in Fig 1.

Fig. 1: Individual Storage Metrics Storage metrics include efficiency measurement parameters ranging from the "macro" level, such as the effective use of the power consumed or the volume of cooling required in a full load state; to the "micro" end, such as component or device level performance.

In a time perspective metric, the amount of activity or the performance level of a system is compared against both the availability of those systems and the space or capacity of the elements in the overall storage system.

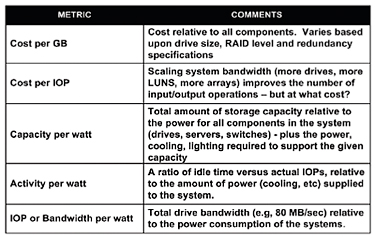

This kind of analysis is used when considering continuing with a legacy storage array versus a replacement array. For example, look at performance (acquisition time or activity), physical and data storage space requirements (capacity), and availability (bandwidth) between 300 GB drives in a high-performance fibre channel SAN versus the same storage capacities using new 15000 RPM, 1 TB eSATA drives in a NAS configuration. Metrics will also be compounded (see Fig. 2), taking into account external components other than the storage system itself.

Fig. 2: Compound Metrics Storage system performance adds another level of measurement. Here users are concerned with the number of input and output operations per second (IOPS); the bandwidth of the system (number of simultaneous streams per data rate); and the system response time once a command is issued to when data is available to the application.

Additional performance metrics relate to integral components of the storage platform, such as HDD-microcode, RAID controller or FTP off-load engine. Here, the number of reads and writes across the entire data store, how well the system recovers from random vs. contiguous sequential reads or writes (important in nonlinear editing vs. transmission playout servers) or the size of the I/O (as in long-form media files vs. clips or essence contained in an MXF wrapper) are measured.

Many measurement tools can be obtained free through third parties as operating system performance testers, and even in-house or system-vendor-provided tools built into server storage arrays. Video servers provide a full gamut of diagnostic monitoring that continually captures data and automatically tracks trends in operating performance, such as failures to complete reads or writes (incurring rewrites or rereads), and notify operators of potential problems ahead of a failure.

EVALUATING PERFORMANCE

When evaluating system performance metrics, users should establish a set of stress tests that involve idle time measurements, average/usual operations, peak and abnormal conditions such as might occur during national election coverage or breaking news events. As a routine practice, log these parameters per activity, and then recheck them on a regular basis. This is not unlike what a good doctor or high-performance auto repair shop might do.

When updates or significant changes occur (such as a RAID failure) recheck the performance. Be sure it is consistent with the previous operations.

Poor performance metrics accumulate over time. Bad numbers may not show up for a while, but over time performance may decrease and the user then finds themselves wondering what happened. Just like the buildup of DLLs or leftover registry entries in older Windows operating systems, eventually the storage system may no longer function as needed, resulting in a total rebuild or system replacement.

Karl Paulsen is a technologist and consultant to the digital media and entertainment industry and recently joined Diversified Systems as a senior engineer. He is a SMPTE Fellow, member of IEEE, SBE Life Member and Certified Professional Broadcast Engineer. Contact him atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.