AI Carves an Easier Path for Media Creators

Tackling the impossible is the goal of artificial intelligence and machine learning

WASHINGTON—Like any other technology, artificial intelligence and machine learning for video production and distribution came about in an effort to build a better mousetrap. Any product that does more with less effort has an advantage over products that don’t make the leap to the latest technology—that’s why self-driving car technology is often in the news.

There is no self-driving product for the television industry… no editing device that will automatically assemble a program, no camera that will point and adjust itself perfectly without human intervention, and no transmitter or distribution chain that will perfectly adjust itself to changing conditions and signal anomalies.

In Post

Those things are coming, however. There is no way to say exactly when, but my bet is that we are closer today to amazing artificial intelligence technology in the television industry than we are to the DTV transition in 2009. (And that seems like just a couple years ago!)

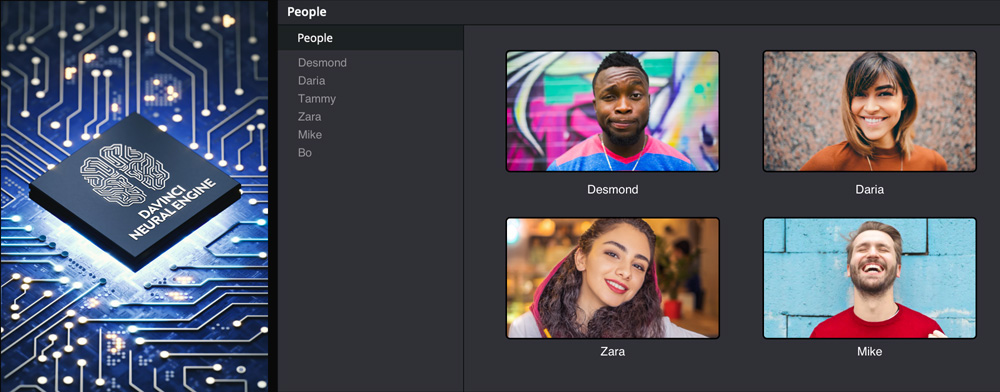

Meanwhile, there are several companies pioneering artificial intelligence and machine learning products that target a range of television applications. One of those is Blackmagic Design, which has AI functions in its DaVinci Resolve editing software.

“DaVinci Resolve Studio’s Magic Mask uses the DaVinci Neural Engine to automatically create masks for an entire person, object or specific feature, such as face or arms,” said Shawn Carlson, product specialist for DaVinci Resolve at Blackmagic Design. “DaVinci Neural Engine functions in Magic Mask offers specific human feature recognition for difficult isolation needs, like hair with bangs and exposed skin on a bearded face.”

Removing an object in a video shot can be difficult, especially if there is a lot of movement. DaVinci Resolve Studio users can remove unwanted objects using a combination of Power Windows, tracking and the object removal plug-in. The DaVinci Neural Engine analyzes the shot using machine learning and AI, and determines how to remove the object from the scene. Users can adjust various settings until the object disappears.

Carlson said that the DaVinci Neural Engine is for visual elements only at this time, and does not have any role with audio.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Streaming Data

The transport of streaming data is another function that benefits from AI and machine learning. With so much data moving so quickly there is no way that human observers can watch it all and compensate as necessary—it’s the perfect job for artificial intelligence.

Zixi is one company that uses AI to monitor streaming data and provide alerts and adjustments in the event of signal degradation. “We use AI/ML in two areas: video transport and video content analysis,” said Andrew Broadstone, senior director of product management at Zixi. “Low-level protocol measurements, such as round-trip time, network congestion and retransmission rate, are used to determine link quality and to anticipate upcoming signal degradation.”

Much of what Zixi tests is the transport stream quality, but the company’s quality measurements also drill down into the video encoding to ensure image and sound quality are maintained.

“Certain kinds of content analysis are independent of codec,” Broadstone said. “We also use AI and ML to estimate the perceptual quality of live video [VMAF] without a reference, but for this the video must be H.264/AVC transport stream format. In general, our customers overwhelmingly use H.264 since it is the most compatible format across devices.”

Broadstone said that Zixi’s IDP product uses tens of measurements collected every few seconds across all participants in a video workflow to determine what the company calls its “health score.” This health score lets IDP predict signal path quality and degradation.

“Zixi Health Score is the output of multiple models trained using gradient boosting across the entire Zixi data set with many months of data,” he added. “Zixi Health Score therefore is not a simple set of rules. However, it is typical to see the Health Score drop significantly when there is a sudden change in packet round-trip time, or if raw packet loss steadily increases.”

The aim of Zixi IDP is to anticipate problems and alert operators to the root cause, Broadstone said.

Cloud-Ready Monitoring

Monitoring data streams is also at the forefront of Interra System’s ORION, a real-time software-based, cloud-ready content monitoring system that enables service providers to deliver clean video.

ORION provides real-time monitoring of IP/SDI/SDIoIP-based infrastructures that looks at all aspects of video streams such as QoS, QoE, closed captions, ad-insertion verification, reporting and troubleshooting.

According to Ramandeep Singh Sandhu, senior management staff member at Interra Systems, ORION performs monitoring functions on hundreds of services simultaneously from a single platform, providing an operator with a single point of visibility and access to information such as status, alerts, alarms, visible impairments, error reports and triggered captures.

What are the typical anomalies that trigger an alert in ORION? “A total signal loss condition will typically be preceded by continuity counter errors, substantial reduction in program bitrates, high network jitter and packet drops,” Sandhu said. “QoS/QoE scores computed by ORION will also show significant dips in such cases.”

Identifying On-Screen Objects

As you might imagine, web-streaming specialist Amazon Web Services uses AI/ML for a range of applications. One example gives customers flexibility and precision when identifying on-screen objects. For example, AWS’s Media2Cloud can use Amazon Rekognition AI to identify that the on-screen object is a dog or something else.

According to Alex Burkleaux and Evan Statton of AWS, Rekognition is constantly adding new names, objects, and other features it can detect. For example, if a customer requires identification of specific kinds of dogs, Rekognition’s Custom Labels can be programmed to differentiate among images of different kinds of dogs, such as “labrador,” “terrier” or “boxer.”

Of course, recognizing faces is a frequent job for AWS Rekognition, and the faces of many celebrities are already in the database. Rekognition Face Search provides a mechanism for detecting people who are not part of the Celebrity Detection data set.

Where does all this AI power come from? Is it more processor power or better programming?

“They go hand in hand,” Burkleaux and Statton said. “Processing power and data are required to build and train machine-learning models. When you’re using AWS AI Services such as Amazon Rekognition and Amazon Transcribe, this is part handled by the managed service provided by AWS.”

AI/ML products may seem almost magical at times today, but they are only going to get more capable over time. Eventually, we may get to a Siri- or Alexa-like interface where you can simply describe what you want and have the service do the heavy lifting.

For example, you might say something like, “Analyze these six video clips and identify if any of the cars in them are Chevrolets” or “Monitor this data stream and report any conditions that either exceed standard parameters or consistently get close to a fault situation.” Of course, you might have an additional conversation to ensure the AI assistant understands what you are asking—that’s understandable.

It’s hard to say if that is five or 10 years in the future. It might be shown at next year’s NAB Show.

You can be sure that change is coming, and that there will be more AI/ML in the future.

Bob Kovacs is the former Technology Editor for TV Tech and editor of Government Video. He is a long-time video engineer and writer, who now works as a video producer for a government agency. In 2020, Kovacs won several awards as the editor and co-producer of the short film "Rendezvous."