Closed Captioning Mandate Spurs Business For Vendors

Broadcasters and video distributors are finding new ways to leverage financial and operational benefits of supporting those with hearing disabilities.

New York—With the government mandate for adding closed captions to all video content, whether delivered on television or online now past, TV stations and video distributors have been working hard to comply. The rules apply to both HD and SD TV shows, films, commercials, live news broadcasts, and Video on Demand (VOD) libraries. Failure to comply with the new captioning regulates may result in fines, penalties, and/or rejection of a show by a broadcast facility or MPVD operator.

In fact, the FCC has required most television programming to be captioned since 1997. In 2010, this requirement was extended to all programming delivered online that was also being delivered over traditional TV platforms.

The new rules in 2014 modify these past mandates to address the issue of closed caption quality. The FCC breaks this down into the basic components that make captioning understandable and useful to the audience: 1) the captioning's word accuracy and completeness with respect to the audio program; 2) its accuracy of synchronization with the audio program; and 3) its placement on the screen to maximize readability and minimize disruption to the visual program.

Bill McLaughlin, Vice President of Product Development at EEG Enterprises, in Farmingdale, N.Y., said the new Report and Order defines the criteria for acceptable closed caption quality in much more detail than any previous document, and includes a detailed set of "Best Practices" to ensure that the highest possible level of captioning is achieved on both live and pre-recorded programming.

Video Distributors Have Options

Suppliers of the required software and hardware technology interviewed for this article all report increased business, due to the new law, and that their customers are finding new ways to leverage financial and operational benefits (if there are any) of supporting those with hearing disabilities. Most stations follow local regulations on closed captioning as a public service condition of their license and can face sanctions (fines, loss of operating license) for failure to do so.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

[More information regarding the mandate can be found here]

"Adding closed captioning to programming is not just a regulatory issue, it also can engage and attract new audiences and help monetize content across various platforms," said Mohammad Shihadah, CEO of Apptek, headquartered in McLean, Virginia. The company offers a full range of automated transcription and translation software for closed captioning, subtitling and metadata creation for broadcast and web use—to help stations around the world comply with different (although similar) government mandates. "Additionally, AppTek's closed captioning creates rich metadata for media monitoring and search engine optimization for archives."

Apptek

One product, designed for live closed captioning applications, is AppTek's fully automated Omnifluent Live Closed Captioning Appliance, which is a piece of hardware running Omnifluent Media software that the company said delivers same-language captions for live content with accuracy and speed that match or eclipse those produced by a human at a fraction of the cost.

Using Omnifluent Media software, AppTek customers can create fully automated, same-language captions for offline content in 11 languages in near real-time. "AppTek designed this Appliance to reside at the broadcaster's facility and easily integrate with their existing production workflow," Shihadah said. "Through customization for speakers and language, AppTek's Appliance can deliver captions with an average accuracy of 90 percent or higher and an average latency of six seconds."

Indeed, accuracy and speed are critical elements of captioning a live or any other type of event. So, as a whole, have most stations complied with the new mandates? Well, according to the vendors we spoke to, it's complicated. To do it right, implementation takes planning, time and money.

EEG

"This is a difficult question to answer, because many of the Best Practices are qualitative in nature, and so compliance isn't a simple yes-or-no in all cases," EEG's McLaughlin said. "The FCC Report & Order did not mandate quantitative targets for word accuracy or caption latency, though they did issue a FNPRM (Further Notice of Proposed Rulemaking) indicating that this option was on the table for the future if stakeholders, including consumer advocacy groups, did not believe significant improvements were being made through the existing guidelines."

He said there are definitely some aspects of the mandate that require changes at many stations, particularly those outside of the top 25 markets that use teleprompters/newsroom computers for most of their live captioning. "The new mandate is very specific that all segments of newscasts must be captioned, including weather and field reports, which in most cases are not in the teleprompting system," McLaughlin said. "This is going to require stations to use scarce existing staff to create transcripts and enter them into the system, or to develop relationships with professional captioning companies to get the segments done."

EEG offers cloud computing as part of its captioning and subtitling portfolio. Another important new requirement, he said, is that the "accuracy" category defined by the FCC specifically includes captioning of non-verbal audio cues like sound effects, music, and off-screen speaker identification. This is a big problem for any stations that are attempting to create live with operator-less voice recognition systems. Even if the system meets accuracy targets for spoken words, it isn't going to help at all with description of non-verbal sounds. Only a human operator is going to be able to do this.

"New requirements for latency, accuracy, and caption positioning, are going to nudge a lot of stations who haven't yet to adopt IP-based live closed captioning connections to their transcribers," McLaughlin said. "Non-IP based systems tend to have problems on one or more these requirements—with a dial-up audio coupler, the audio quality is not high enough to get optimal transcription accuracy, plus captioners can't see video feedback to check the caption positioning; meanwhile, with a satellite return, the captioning delay is about double what it needs to be due to the compression and delivery time. The modern IP systems like [EEG's] iCap resolve all these problems, and the new FCC rules are going to continue to increase adoption.

EEG's live CC encoders, the HD490 series, include software for connecting to captioners through the iCap network. iCap provides high-quality, low-latency, secure audio feedback to a remote captioner, as well as video feedback to control positioning. iCap also logs all active connections, and has data archive features, which can be an important part of a broadcaster's responsibility to document and address any on-air caption discrepancy, either for technical reasons or due to a human error. iCap is an expandable software system that offers a lot more control over the live captioning process than point-to-point dial-up connections.

For stations that have a new need to caption segments of their news programming that they may have been previously leaving un-captioned because they weren't available in the teleprompter script, EEG offers iCap VC, which is a "re-speaking" voice recognition system that can be used with an iCap-capable caption encoder either remotely or within the plant. iCap VC works with PC-based speech-to-text software to generate real-time transcripts with only minimal user voice training. This, the company said, can save a lot of time over entering transcripts into the teleprompter system by hand.

Avoiding Fines

Stations or program providers that don't comply face fines, but only if a complaint is logged with the FCC. The Commission is responsible for investigating these complaints, and giving the broadcaster a chance to respond with tapes, logs, process documentation, and any other relevant information. There is a basic allowance for "de minimis" (trivial) errors, meaning isolated mistakes that were corrected reasonably quickly and not indicative of a systemic violation of the Best Practice standards. If there is a systemic or egregious problem, fines can result for the broadcaster.

Therefore, vendors say, it's important that broadcasters document what is being done to address each item. This will be very useful in the event of a complaint, for the station to be able to point to specific actions being taken to ensure that the caption quality is as good as it can be.

"It is expected that any violations or complaints regarding captioning will be submitted by viewers to the video programming distributor (VPD), which includes cable operators, broadcasters, satellite distributors and other multi-channel video programming distributors, or directly to the FCC," said John La, Product Manager for Modular Products at Evertz Microsystems. Evertz is based in Burlington, Ontario, Canada. "The FCC will provide the VPD with 30 days to resolve the complaint before imposing penalties."

Evertz Microsystems

Evertz has been providing captioning equipment for over 14 years now. Products such as the 7825CCE-3G and HD9084 captioning encoders have been used by many customers to provide an interface into which captions can be inserted into video for live broadcasting. These caption encoders provide the monitoring and control mechanisms to allow TV stations and VPDs to make the adjustments necessary to conform to the new FCC mandates.

Evertz captioning encoders can switch between live captioning and captioning from a teleprompter. Some of the functions of the 7825CCE-3G and HD9084 include: Switching between live captioning and captioning from a teleprompter; Live captioning over a remote IP interface; Saving and inserting captions directly to or from a file stored on a local Compact Flash card; Moving captions up and down based on user or automation inputs; and On-screen display for real time decode of the encoded captions.

I-Yuno

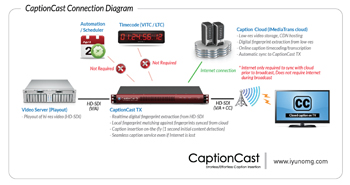

A South Korean company with new offices in Burbank, Calif. called I-Yuno offers a system called CaptionCast that provides subtitling, captioning and dubbing services via a cloud-based service, special software, and on-site support. It recently introduced a fully automated "fingerprint triggered" broadcast caption inserter. The single-channel CaptionCast inserter listens to incoming audio streams to trigger captions using digital audio fingerprints. TV stations have the option to revise inaccurate live captions using i-Yuno's captioning cloud service called iMediaTrans.

I-Yuno's Lee said this helps reduce the need for extra equipment and manpower while providing highly accurate captioning results sooner. The company also allows customers access to highly quality captioning and subtitling services—only an on-site server is required—for a mere $4/minute. The company also offers a free lease of a single-channel CaptionCast inserter if customers commit to 1,000 hours of closed caption services with i-Yuno, within a 5-year period.

Planning and Compliance Are Critical

At the end of the day, station have to spend time researching where their content is coming from and whether it properly complies with the captioning rules, because, remember, it's not the content creator that gets fined by the FCC, it's the distributor.

"I think stations are still trying to understand the rules," said EEG's McLaughlin. "They are still trying to figure out whether they need to dedicate more staff hours to captioning, and whether they need to upgrade equipment or change workflows. Again, many of the Best Practices are qualitative; the compliance issues are pretty complex and not as binary as I'm sure many of the people responsible for implementing them would like them to be."

Editor's Note: Other companies serving the space include CPC and Grass Valley, which both offer a variety of closed captioning and subtitling software options.