Closed-Captioning Technology Evolves

ALEXANDRIA, VA.—With new FCC rules on closed captioning recently taking effect (see sidebar), the biggest frontier for accessibility is live streaming of content. But tighter budgets, shorter deadlines and issues of accuracy remain hurdles. Fortunately new technologies including machine learning and speech recognition are helping broadcasters and programmers overcome these obstacles.

WHO’S RESPONSIBLE?

In 2016, the FCC split the responsibility of closed captioning between content creators and the multichannel video programming distributors (MVPD) responsible for the content’s distribution. Programmers however have been seen as being primarily responsible—up to the point of hand-off to the MVPDs at least—for ensuring that the captioning of the content is accurate, synchronous, complete and is placed so that it does not block other important visual content.

Additionally, the issue of accuracy goes back to 2014 when the FCC issued standards related to the accuracy, timing, completeness and placement rules for captioning. At that time the FCC determined that captioning must match the program audio, (including any slang), as well as nonverbal information that included speaker identification, descriptions of the music, sound effects, and even attitudes and emotions of the speakers along with audience reaction.

“In fact, the FCC said that the captions should be as accurate to ‘the best of your ability,’ but there have been no hard or fixed rules on what is actually considered ‘best,’” said Ralph King, president of Comprompter News and Automation of La Crosse, Wis. “The standards that the FCC are requiring are somewhat nebulous.”

There are other gray areas too. MVPDs are in essence responsible for then ensuring that the captions are passed through with the captioning intact and more importantly, in a format that can be recovered and displayed by decoders.

Although there have been similar regulations, according to Giovanni Galvez, product manager at Telestream in Nevada City, Calif., the difference now is “content providers have less time to ensure that the captioning is where it needs to be.”

Hiren Hindocha, CEO of Digital Nirvana

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Bottom line: as programming moves from on-air to online, captions must be present and accurate.

“If the caption does get lost there isn’t an easy way to get that back, so what we are looking at already is a way to provide captioning at the source and use digital fingerprinting technology that can allow these to be reconnected together,” said Hiren Hindocha, CEO of Digital Nirvana in Fremont, Calif.

CAPTIONING AS META TAG

A major advantage of closed captioning of content is that as it is uploaded online it can help media reach a particular audience.

“Captions are being uploaded to social media, and while it presents a challenge as some outlets like Twitter don’t currently support captions, it is another way for content discovery,” explained Galvez. “It is now seamless, scalable and compatible with various delivery mechanisms, but there is still the 8–12 hour deadline [according to new FCC rules] and there needs to be the horsepower to handle that demand.”

The possibilities this can offer for content providers have yet to be seen.

“Even now, closed captioning is a bit of an afterthought,” said Juan Mario Agudelo, vice president for sales and marketing at the National Captioning Institute, a non-profit advocacy group based in Chantilly, Va. “Right now the biggest problem is that there isn’t a set number of services that everyone can use equally.”

Moreover, the need for live captioning will only increase, especially as a lot of the demand is now driven from content producers who are working with smaller budgets than traditional broadcasters. This is where the use of technology—notably that driven around speech recognition—will likely increase.

“There’s a lot of interest in automatic speech recognition, which operates at a fraction of the cost of live human transcription,” said Bill McLaughlin, vice president of product development for EEG in Farmingdale, N.Y. “This is why EEG has become a direct provider in that market with its Lexi Automatic Captioning service, and why it also has customers utilizing third-party speech engines to feed our caption inserters. This Lexi model further can offer value because it is a pay-as-you-go cloud-hosted service with continuous automatic updates based on global learning, not just a local software package maintained by a single content provider.”

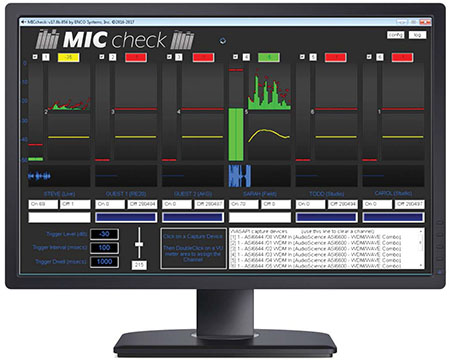

ENCO’s enCaption 3R4 produces real-time captions without any advance preparation work, and without the expense of live captioners or signers.

Telestream captioning solutions include its Vantage Timed Text Flip, Lightspeed Live Capture and Vidchecker, which allow for completely automated conversion and delivery of FCC-compliant captioned video files for the internet. These tools allow broadcasters to capture and begin processing the captioning from live programs during the original broadcast. Telestream software can also export embedded captioning into .mp4 files for OTT delivery.

ENCO, a broadcast systems provider in Southfield, Mich., has also replaced the traditional video stenographer through live automated captioning to serve hearing-impaired audiences at a lower cost.

“We turn audio into captions through live speech detection, and our enCaption3 systems can communicate those captions to any IP or traditional captioning encoder,” said Ken Frommert, general manager for ENCO. “The technical requirements are straightforward for any live captioning environment at the input and output of our device, whether IP, serial, or file-based. This is a concern that we can alleviate for our customers specifically related to how our technology is applied.”

According to Frommert, enCaption 3R4—which debuted at the 2017 NAB Show—produces real-time captions without any advance preparation work, and without the expense of live captioners or signers. It also utilizes ENCO’s latest enhanced speaker-independent deep neural network-based speech recognition engine to closely inspect and transcribe audio in near real time.

LANGUAGE HURDLES

The English language presents numerous challenges and this is where context is important. Machine learning can help understand by predicting the words that will be said much better than a human stenographer.

“A stenographer has to review a sentence to understand a context, and that makes it easier to understand homonyms—words that sound the same but that have different spellings and meanings,” said Agudelo. “There is still a long way to go with automated speech recognition. It is a process that is in flux and change, and it started about three years ago and will continue to be improved.”

The big leap in technology has actually been something used daily by literally billions of people—namely the smartphone.

“It is improving year on year, and [as] machine learning is coming into play, closed captioning will be closer to fully automated within two to five years,” said Digital Nirvana’s Hindocha. “Statistical data can determine what was said before and along with context, can make this technology much more accurate. The failing is still with proper nouns—such as names and places—but speech recognition tools can be preloaded with those words.”

New FCC CC Rules Tighten Ties Between On-Air, Online

ALEXANDRIA, VA.— Effective July 1, new FCC captioning rules require the captioning of online video clips from live and near-live TV programming. For live content, captioning must be completed within 12 hours from when the clip first airs on TV; for near-live, the allowable delay is 8 hours. This applies to all programming that previously aired on TV with captions, but the FCC regulations specifically exclude consumer-generated media.

These new requirements date back to 2012 when the FCC first began requiring that all IP-delivered TV programs be captioned, regardless of whether the content was full-length episodes or short clips. These requirements were phased in based on how the content was created; whether it was pre-recorded, live or near-live.

The regulations were further updated in 2014 when the FCC determined that clips to programming must also be captioned when delivered via IP, but there were some exclusions to the rule-as third-party platforms including Hulu, YouTube and iTunes were exempt. However, the clips mandate took effect in January 2016 and did apply specifically to direct lift clips of all pre-recorded content; while in January of this year montage clips were also required to be delivered with captions.

Not all stations have been compliant however.

“Given how long this has been coming I’m amazed at how so many smaller broadcast stations claimed to be unaware and therefore unprepared for these ‘new’ FCC rules,” said Ralph King, president of Comprompter News and Automation of La Crosse, Wis.

The agenda for the FCC should have been clear enough too.

“In 2014, the FCC set out a three-year agenda requiring all fully captioned traditional broadcast programming to also appear fully captioned when delivered through web and OTT services,” said Bill McLaughlin, vice president of product development for EEG in Farmingdale, N.Y. “The good news is that the technology to meet this latest deadline has been in place with most broadcasters for some time already, since all the infrastructure supporting the conversion of the broadcast captioning to web is already in place.”

Peter Suciu

This is why there will remain a balance that includes both speech recognition tools and stenographers, and in some cases for those with higher budgets and larger audiences, it may—at least in the short term—pay for the increased accuracy with a human captioner overseeing the process.

“As organizations grapple with the new mandate, their demand for highly skilled professional captioners and transcribers will most likely rise to meet it,” wrote Heather York, vice president of government affairs for captioning service provider Vitac, in a blog on tvtechnology.com recently. “After all, only humans can balance both the speed and accuracy needed to properly transcribe and sync captions to videos in compliance with the regulations. “Along with this rising call for human capital comes a demand for quality workflow automation technology to keep captioners on track, operating at the highest quality, and ensuring timely delivery and coordination between all parties.”

Improved accuracy could also come about with better direct interoperability with newsroom systems, according to ENCO’s Frommert. “This includes interoperable newsroom systems to automatically add proper nouns, such as names and places that would come across inaccurately in less intelligent workflows,” he said. “As we continue to improve the speech recognition engine with subsequent software updates, the already highly accurate output coming from enCaption3 will only get better.”

This can address issues such as crosstalk, which remains a problem for live stenographers, as a crowded stage can quickly lead to confusion on who is talking, and what individuals are saying.

This is where automated tools such as enCaption3 can determine who is speaking by correlating it to each microphone with a specific speaker, which immediately improves accuracy in live multispeaker productions.

“We’ve seen a lot of progress in the past 10 years since the first voice recognition technology was starting to be adopted, and it has really only been in the past three to four years that it has held its own,” said King. “Today’s technology can handle multiple English accents so it can address one speaker with a Spanish accent and another with say, a French or German accent, and it can handle regional pronunciations too.”

With the increasing use of graphics and split-screens comes more potential for closed captions to block onscreen tickers and other information.

“We think it is important to note this so that the captioning does not cover up the information already on the screen,” added King.

It is just one part of a very complicated set of issues that will need to be addressed in the rapidly changing environment of technology for media accessibility.