Embedded audio

The evolution from analog to digital signal distribution in broadcast television facilities has brought about a number of significant changes in routing and distribution system design. One of the biggest has been in how audio is handled. As recently as five years ago, a state-of-the-art facility would include several layers of audio routing, usually a mix of analog and digital, along with the video signal distribution layer.

With the widespread adoption of embedded audio, these separate audio systems have shrunk to islands in production and some master control areas, while the main video routing system transports audio as embedded audio signals. Eliminating audio routing and distribution equipment along with patch panels, cabling and support gear saves money and reduces complexity. The embedded audio world, however, also presents new challenges that must be addressed if desired flexibility, cost-effectiveness and efficiency are to be achieved.

Challenges

The first problem posed by embedded audio is how to reconfigure audio channels encoded into the digital signal. This can be as simple as adding a second language track, or making a left-right swap to maintain a consistent channel assignment pattern. In extreme cases, it may be necessary to completely rearrange channel positions. This function, generally known as shuffling, can be handled at the time material is ingested into the facility, in which case the shuffling is performed by dedicated equipment in the ingest system.

Effective stand-alone audio shufflers are also available, but they may be difficult to incorporate into a large, integrated facility where quick changes to live feeds from news or sports events are common. In a facility like this, the idea of including audio processing within the video router is particularly attractive.

Any manipulation of the content within an SDI data stream first requires that the signal be deserialized and decoded into its component data streams. Some video signal processing equipment already does this, but video routers traditionally do not. Instead, they output data that is a faithful copy of what was received at input.

Incorporating deserializing and decoding capabilities into a video router is a relatively new idea. What has made it possible are improvements in large-scale field programmable gate array (FPGA) components that make them smaller, more powerful and more energy efficient. By using these components, it is now practical to incorporate signal processing into a video router's circuitry without compromising its operational reliability. (See Figure 1 on page 24.)

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

But this functionality comes at a cost — a literal one. An I/O card with serializing and/or deserializing capabilities is several times more expensive than one that just moves SDI signals.

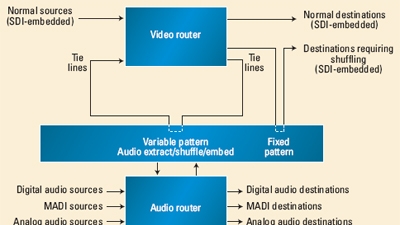

Modern routing switchers are typically based on a structure that divides the circuitry into three parts: an input card that accepts a number of signals; a central crosspoint card, usually with a redundant card in standby; and an output card, which drives a number of output ports. A basic shuffling capability could be implemented on either the input or the output card. Both approaches have their advantages, but the output card option offers more flexibility because it enables signals from the router's other input cards to be brought in and embedded into the output streams. When signals from multiple sources are being combined to create new ones, an audio subrouter must be included in the video router. (See Figure 2.)

Let's consider how large this audio router needs to be. Each HD-SDI input to the video router has the capacity to carry 16 embedded audio component streams (eight AES pairs). In a midsize routing switcher with 128 inputs, this works out to 2048 individual audio streams. Because every input must be available to every output without blocking, the internal audio subrouter must be designed with a capacity of at least 2K × 2K. That's as big as some of the largest dedicated audio routers in existence, and it is only serving a midsize video router.

Given the large number of audio streams within the router, this function is generally performed by a time division multiplex (TDM) system rather than a crosspoint matrix. The TDM router can be implemented in an FPGA, reducing the number of components required. A TDM subrouter can also be extended to provide digital signal processing (DSP) functions such as mixing, phase reversal and gain adjustments, giving the router the ability to control audio signal characteristics fully.

To increase the flexibility of the combined audio/video routing system, it should also be possible to provide direct connections both to external audio signals and to the audio signals extracted from the video. Audio input cards can handle this job by presenting their signals to the internal TDM matrix as well as to the crosspoint matrix, if necessary. Analog conversion can also be added to these cards, in which case their signals can be presented as digital streams to either or both of the submatrices. On the output side, audio cards can connect the audio streams either as AES pairs or as stereo analog pairs for monitoring or connection to legacy equipment.

When MADI streams are handled the same way, it simplifies the connection to audio mixing consoles or other equipment. This also provides a means of connecting the audio signals within the router to an external audio router, should system requirements exceed the capacity of the internal audio subrouter. Since a TDM matrix is inherently a synchronous system, it is necessary for all of the input signals either to be synchronous or to be synchronized within the router. To provide maximum flexibility for handling “wild” audio inputs, the synchronization process should include sample-rate conversion facilities that enable digital audio signals from the widest possible range of sources to be brought into the system.

Controlling the combined video/audio router also presents new challenges in the design of user interface devices. Most routing switcher control panels provide basic audio/video breakaway functionality carried over from the days before embedded audio was the norm. Now that there are not only video but also 16 audio positions to control, even the most powerful hardware panels may fall short. More effective is a virtual control panel that provides a visual display of the video signal and its associated audio positions so that the operator can choose the audio signal to be dropped into each position. GUI design also enables control of DSP functions and other signal configuration information to be handled more easily than it is in a traditional hardware control panel. (See Figure 3.)

Conclusion

While the addition of audio processing functionality to video routing brings facility-wide advantages, the equipment is undeniably expensive. When deciding how much audio capability is needed in a router, it is therefore important to analyze requirements carefully and to consider alternatives for external equipment or router re-entry paths. In many cases, it is sufficient to add a relatively small audio-enabled section to the video router, and then implement the router control system's pathfinding facilities and tie-line management to route audio-embedded signals to their destinations.

In summary, it is possible to take cost-effective advantage of the flexibility and operational benefits of embedded audio, but to do so requires careful assessment of need and evaluation of the available technology, as well as solid planning and system design.

Scott Bosen is the director of marketing for Utah Scientific.