Fox Sports, EVS Partner on ‘Xtramotion’ Super Slow-Motion

Cloud virtualization brings super slow-motion within reach for many applications

LOS ANGELES—A new approach to super slow-motion replay deployed to great effect during Fox Sports’ production of the Daytona 500 in February promises to bring this staple of high-end sports coverage within reach for more productions.

Changing the economic equation of super slow-motion replays is virtualization of the process in the cloud where the high number of frames required is created by interpolating between frames of video shot by conventional cameras at normal rates.

The technique, called “Xtramotion,” is the product of a collaboration between Fox Sports and slow-motion replay specialist EVS.

“EVS had this in their back room for quite some time,” says Michael Davies, Fox Sports senior vice president, Field Operations. “When we saw EVS was actually interested in doing this, we partnered up and collaborated to make it a product.”

What’s game-changing about Xtramotion is that it enables producers to generate super slow-motion replays from video shot with any camera—not simply high-frame models. For Fox Sports, that means leveraging this innovation using a variety of cameras that never before would have sourced super slow-motion footage, such as in-car cameras for Daytona and pylon-cams and referee cams for football.

For producers covering smaller events with slimmer budgets, Xtramotion can make super slow-motion replay a reality without its typical expense.

“Combined with replay, super slow-motion is one of the most compelling storytelling aspects of our generation watching live sports,” says Dave Pinkel, vice president, Key Accounts, at EVS. “Today, the value of Xtramotion is in augmenting and adding details to big events. But tomorrow, it could be the tier two and three market where the value [of Xtramotion] could be interesting.”

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

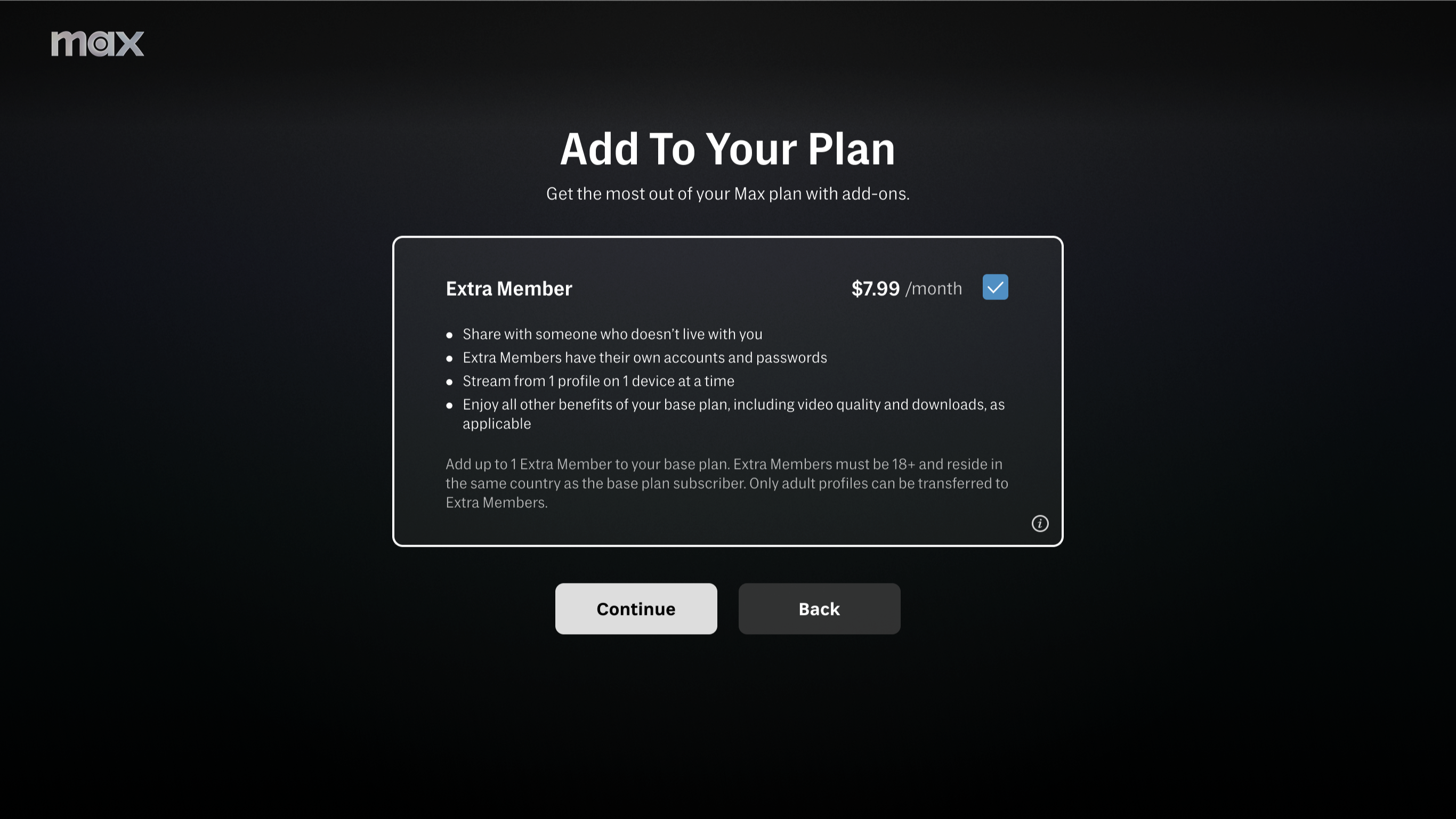

“For less than $1,000 a game, you can have this type of technology available to operators,” he adds.

SLOW-MOTION FROM THE CLOUD

The interpolation to create Xtramotion frames is done in the cloud where multiple CPUs and GPUs can be assigned to run the AI/Machine Learning-powered process. The ability to scale processing power in the cloud makes interpolating frames fly.

“It’s just a matter of how many resources that you want to get from whatever cloud service you end up using—and really it can be any of them,” says Davies. “That’s something you actually have control over.”

More difficult is dealing with the time to transfer video to the cloud for processing and then back for integration into a production. During a NASCAR race like the Daytona 500, there are breaks in the action, such as an accident and a yellow flag, that offer a pause during which Xtramotion processing and transport can occur and footage can be integrated into the production in a natural manner, says Davies.

However, there may be a technology solution on the horizon that could reduce transport time, making it easier to add Xtramotion clips to other sports. “One of the things we’re thinking about is MEC multi-edge computing. Companies are experimenting with this concept of putting the cloud—like a mini data center—closer to consumers to reduce latency,” says Davies.

Davies envisions spinning up Xtramotion in MEC data centers within relatively close geographical proximity to a sports venue to reduce video transport time and ultimately make integrating the synthetic slow-motion into game production easier and timelier.

BETTER WITH AGE

Applying machine learning to Xtramotion means that interpolation of frames should get better over time as the AI algorithms gain experience creating frames.

“Think about baseball, for instance. I’m particularly interested in giving this a good go in baseball with some of the POV cameras that aren’t typically super slow-motion,” says Davies.

“The computer is going to be getting better at making the ball crisper in super slow-motion replays over time. That’s the machine learning supplying the artificial intelligence with more information to make a better product.”

While Xtramotion continues to gain experience interpolating frames over the next few years, Davies sees AI beginning to pop up elsewhere in sports production. For example, artificial intelligence could be used to suggest various statistical graphics to a coordinator, who ultimately would decide whether or not to use them.

AI also could assist with analysis of plays and other game action, such as of baseball pitches, to generate new graphical looks. “I know AI can definitely start learning how to be a relevant supplier of contextual information that can then be turned into graphics,” he says.

But for now, Davies looks forward to leveraging Xtramotion to create striking new looks for game coverage. Already, he’s run archived footage from college football referee cams through Xtramotion and likes what he’s seen.

“Xtramotion makes that video look amazing. I think you’re going to see quite a lot of it this fall.”

Phil Kurz is a contributing editor to TV Tech. He has written about TV and video technology for more than 30 years and served as editor of three leading industry magazines. He earned a Bachelor of Journalism and a Master’s Degree in Journalism from the University of Missouri-Columbia School of Journalism.