‘It All Starts With Public Trust’: Scripps New AI VP Discusses Company’s Approach

Kerry Oslund shares his team’s strategies for integrating artificial intelligence

The E.W. Scripps broadcast group recently appointed Kerry Oslund and Christina Hartman to lead the company’s artificial intelligence (AI) strategies. Oslund is the group’s new vice president of AI strategy and Hartman was named vice president of emerging technology operations. Both are new positions and will report to Chief Transformation Officer Laura Tomlin.

In addition, Keith St. Peter, the new director of newsroom artificial intelligence, will lead AI strategy for news and report to Hartman.

TV Tech recently sat down with Kerry to discuss Scripps’ approach to AI.

TV Tech: Congratulations Kerry on the new appointment. Were you asked, or did you lobby for this position?

Kerry Oslund: Both, actually. I’ve been training for this position for over two years now through self-studies, being an evangelist and advocate for where we are moving right now in terms of our company’s AI journey, so yeah, I raised my hand, jumped up and down, and finally, I was given the opportunity, along with a couple of other peers.

TVT: This is the first type of C-level appointment on AI leadership we’ve seen in a station group. What do you hope to accomplish?

KO: This is about everything everywhere all at once, but doing everything everywhere all at once at different speeds with different guardrails. However, depending on what you do, your guardrails are probably going to be a little bit different, and the speed at which we attack the opportunity is going to be different as well, depending on what you do and what the guardrails are. And obviously, you know that the biggest guardrails are going to be around our journalism and our trust and our brand.

TVT: How would you describe how Scripps is using AI right now?

KO: Well, sure, AI is a pretty big and all-encompassing word that’s like the same as physics, right? So what broadcasters haven’t been using is generative AI as part of their everyday business, but that’s changing now, but certainly AI machine learning, all of that has been part of things that we’ve been doing for a long time.

Now, we’re going to generative AI and things change. Obviously, these tools are just remarkable and the speed at which these tools are emerging makes your head spin.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

TVT: A lot of people chararcterize generative AI as in its ‘Wild West’ phase. How is Scripps getting out in front of this?

KO: Some would say that, ‘yeah, we’re getting out ahead,’ and others would say that we’re way behind, and we’d both be right, depending on what we're talking about. Scripps has always been an innovative company and we certainly pride ourselves on being on the leading edge. And I think when it comes to responsible use of AI tools, you’re going to see us being on the leading edge of that as well.

TVT: Does Scripps have a mission statement to describe its approach to AI?

KO: Yes, sort of. We certainly started first off with guardrails and guidelines, so there weren’t any missteps, so good people didn't make bad decisions because there hadn’t been guardrails fully explained.

But it all starts with public trust and we’re doing nothing to diminish what we have and what we’ve earned over 140 years in respect to intellectual property—ours and that of others—in terms of accuracy; I don't think you can have public trust without being accurate.

So obviously, there are some challenges, and we are fully behind humans in the loop to make sure that the output is accurate, and for those humans that are in the loop, they are accountable for what happens. And those things are protected; they don't change. Obviously we care deeply about the privacy of our customers and proprietary information—these are important virtues and values.

Has that manifested itself in a strategy statement, yet? No, but I’m working on it. I’ve got a lot of help and we do have the bones of a strategy that are shaping up that I think are going to be pretty easy to understand and will help us move both swiftly and responsibly.

TVT: How are Scripps employees reacting to the company’s approach to AI, especially in terms of job security?

KO: Everybody is super-sensitive to it, but the truth of the matter is that AI is going to affect everybody’s job, whether they lose a job or not. That’s pretty extreme in my mind, but when we are bringing tools that will certainly help with speed and quantity, as long as it doesn’t impact quality, then we’re going to have a balance of time. And what are we going to do with that time? We can either use it to create topside opportunities—which is our preference—take this new time and these new tools and create new products and businesses and services, or find efficiencies in other ways.

And that’s the part that scares everybody—that we don’t initially think that we can create new products, services and new revenue streams. We don’t go there automatically, we go to the other extreme, automatically. But the bottom line is the bottom line, and we’re responsible to it. And it would be super irresponsible not to embrace these tools and see what we can do with them in a way that fits our mission and our brands.

TVT: Do you think, as an industry, broadcasters should work together in regards to their approach towards AI? Or do you think it’s really up to each broadcaster?

KO: It doesn’t always have to be broadcasters, right? I mean, sure, we have some of the same challenges, and it makes sense to work together. We’re working together differently and better than we probably have in the past, and there will be opportunities with AI that we can collaborate on.

Beyond my role at Scripps, I am stepping down from the board of Pearl TV (advocacy group for NextGen TV) to make more time for the ATSC board. And AI is now a new healthy part of the discussion at ATSC, where we’re talking about how we can use that standards body to make it easier for creators and manufacturers—especially when we combine artificial intelligence and ATSC 3.0, all of the magic that can happen and is newly enabled. Some of the stuff we could have done maybe a year ago or two years ago, but we couldn't afford the content expense.

Take translation services, for example. We can do Spanish translation, and we know there’s a large market, and that means that there’s probably a large financial opportunity. But did we do Mandarin? No. Did we do Italian? No. Did we do Polish? No, but it’s from here we can start thinking about that, because that content is readily available to us through AI translation services and that creates really interesting accessibility opportunities for us, and I do believe new revenue opportunities as well.

TVT: Companies like OpenAI are starting to partner with publishers—including Future US, our parent company. Broadcasters have expressed concerns over not being compensated when their intellectual property is being used to train large language models. What’s your response?

KO: That horse has left the barn. The same groups that were asking us to do content deals 6-8 months ago, now, all of a sudden say they don't need it. Why? Well, I think it’s because they already have it. that's what I think.

Look, we’ve had great conversations with some of the large players in the AI space and I do think that there is an opportunity for us, and I would assume with GPT Search, they promise that they want to have a new deal with publishers. We assume that the new deal would be in the form of remuneration, but we’ll see.

We'll talk to consortiums as they want to talk, but we want to be good listeners and seek to understand and then be understood. I just don’t think you can start with a knee-jerk opinion that our content is everything and, and they can't live without us. They've done a pretty good job so far and can live without us.

So I think we have to be realistic: We’ve got great content, it’s worth a bunch, and I do think that we’ll have more opportunities when it comes to to search and surfacing fresh content, than perhaps we will have with our archives and surfacing our archives—although I do believe that there’s a great opportunity to use AI in surfacing our content in our archives and providing that and licensing that to those who want to use it for whatever and it meets our terms of services, and we do a handshake and everybody wins.

TVT: The FCC made an attempt last year to require broadcast political ads that used some form of AI be labeled as such. Where does Scripps come down on this policy?

KO: I don’t know if we’ve put out a policy or a guideline, but I think that clear labeling and transparency is great for trust and therefore we would be fine with that—it’s something we would do without being forced to do it. And I think it’s something we will do. When it comes to our local news and that trust that we’ve gained over the decades, we’re not ceding that to anything by being stupid with the use of AI. Those guardrails are going to be super high, and we're going to be super careful.

Now, where it gets fuzzy is when we have entertainment products; networks like Court TV that do documentaries. In documentaries, particularly in dramatizations when you’re doing graphic recreations, AI does that for us in a better, cheaper way and it’s clearly labeled. When I want to just watch a TV show, that’s all right. But when I’m watching my local news, my guardrails are different; I have a different expectation of my local news operation than I do of one of our entertainment networks.

TVT: Can you tell us how Scripps is implementing AI within its internal operations?

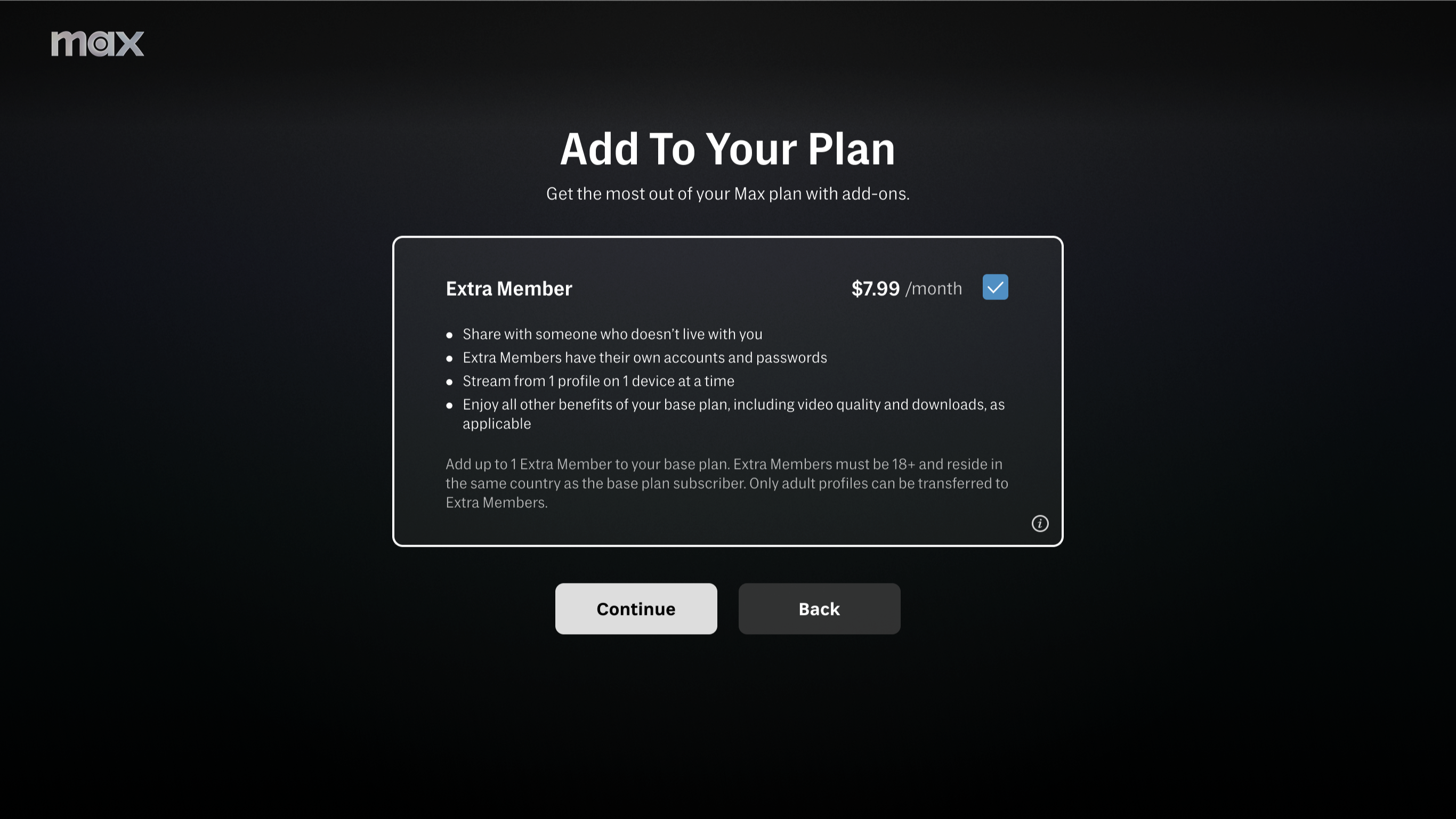

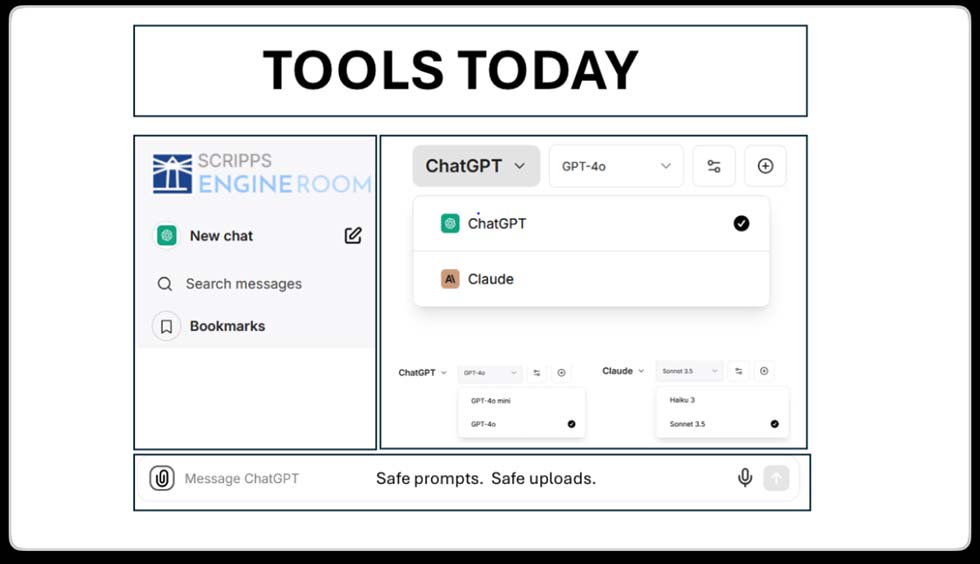

KO: We’ve created something called “The Engine Room,” which basically allows our employees to go and pick a model, either ChatGPT or or Claude—OpenAI or Anthropic. We’ve created our own environment where an employee can go in and they can do prompts and they can get the responses, and that is not going to go out to the LLMs or in any way be leaked to outside of our company.

So we can upload our proprietary documents, and we can then cross-examine our own work using the AI tools in a very safe environment. But here’s the good and bad news in a company like ours that has over 5,000 employees: Most of them are really curious, and they’ve been out there and they’ve been banging on the tools already, right? And so we’d rather give them a safe environment to bang on the tools then say, “Oh man, I could take a shortcut here, I could take this proprietary document, and I could run it through my personal version of ChatGPT, and I could get the answers, get my work done, blah, blah, blah.”

This has been released to fewer than 200 people for the last few months to just sort of get a feel for it, and today, I would say we put it in the wild for the entire company. It’s a great first move for us, but it’s not the last move for us in terms of tool sets, because the tool sets that we'll add in 2025 will be more towards the agentic AI sort of tool sets that are human assistance, pre-prompt populated zones and even some automation. But right now it’s about getting hands on keyboards. If I could do one thing in my first year on this job, it would be taking those who express skepticism into curiosity—I think that would be a huge win. And by the way, skeptics live everywhere, including in the C-suite.

The one thing that I think that happens when you give people the tools is that they start experimenting with this themselves, they become better prompt engineers themselves. They have a ‘light-bulb’ moment—and when that light-bulb moment happens, then the human curiosity is just unleashed.

And so the goal is to take skeptics and turn them into novices. Novices turn then into practitioners, and practitioners turn then into experts. And if we have any experts in our company right now, then my job will be to re-recruit them, because we already have too few, I’m quite certain.

And by the way, we can’t let our customers get out ahead of us when it comes to knowledge, or else we’re going to look like fools. We know that they’re using AI and they know that we’re using AI. We’re already using it in sales, production, like creating spec ads to take out on a first sales call. And I don’t think that we’re being silly or stupid and suggesting that we put in days and days and months and months of work in production. We’re basically saying, ‘This is a rough draft of what your message might look like.’

So I think you can see a lot more on the sales side. I think that every salesperson is going to have an AI sales assistant. I think that is going to be high-priority for us, when it comes to our highest-value content and those revenue pillars you’re going to see us probably focusing on AI first.

Tom has covered the broadcast technology market for the past 25 years, including three years handling member communications for the National Association of Broadcasters followed by a year as editor of Video Technology News and DTV Business executive newsletters for Phillips Publishing. In 1999 he launched digitalbroadcasting.com for internet B2B portal Verticalnet. He is also a charter member of the CTA's Academy of Digital TV Pioneers. Since 2001, he has been editor-in-chief of TV Tech (www.tvtech.com), the leading source of news and information on broadcast and related media technology and is a frequent contributor and moderator to the brand’s Tech Leadership events.