Mass Storage Elements in IT-Class Media Servers

Karl Paulsen

Broadcast central equipment rooms now include from a couple dozen to well over a hundred computers and servers similar to those used in corporate or enterprise datacenters. Both the selection and configuration of these forms of servers depend on the services or activities they support. Such services now encompass databases, media processors, editorial and graphics, command, control and overall system management.

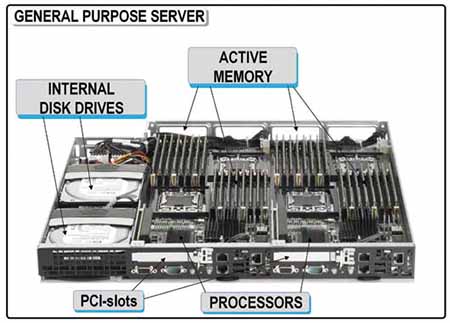

Server configurations can range from basic simple units with single processors and modest storage through high-end/high-performance systems with two (or more) multicore processors, significant active memory capacity (upwards of 64 GB and beyond) and either integral or separate mass storage systems in both rotational magnetic and silicon makeups.

Besides core processors and memory components, selecting the proper mass storage elements (i.e., the hard disk or solid- state disk drives) is essential to assuring each server achieves the performance necessary for the supported applications. In my previous column (“Elements of Enterprise COTS Servers,” Oct. 1, 2014), we looked into two of the three core elements usually found in enterprise-class “commercial off the shelf” servers often deployed in the CER. In review, these elements were characterized per the number and type of core processors; and by the amount and type of main memory (e.g., DRAM, VRAM, burst EDO (BEDO) RAM, SDRAM, etc.).

We’ll now look at the third element—the mass storage components often used to support enterprise-class server systems.

SELECTING MASS STORAGE

In most x86 servers used for missioncritical applications there will be two basic storage groups necessary for the systems to function: one is the operating system (OS) storage; and the other is the mass storage that contains the user’s data.

For some of the simpler applications, OS storage might be combined on the user data storage or the data generated per the application of the specific server (e.g., databases, media cache, etc.) However, in highperformance computing (HPC) applications, the OS drive is usually separate from the mass storage used for general user data.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Mass user data storage is typically confined onto spinning magnetic disk drives. With the growth of solid state (Flash) storage media, known also as solid-state drives or disks (SSD), OS storage systems began shifting to SSD in single or mirrored configurations. SSD storage protects against primary operating services corruption and other issues that might be generated in the user’s mass storage data sets.

Putting the OS data onto nonvolatile SSD memory significantly reduces the risk of a disaster should the spinning hard drive become compromised. SSD also allows fast boot times and rapid accessibility to commonly used routines, assuming those applications are stored on the system drive.

Flash drives are also used ahead of magnetic spinning disks as caches (buffers) or for the storage of frequently accessed data and metadata, repetitive applications or other commonly called routines.

For mass storage, users have choices that can fit a broad set of economic and performance criteria. In many cases, the local drive sets resident in the server’s frame will more than suffice for the operational needs typically found in many media applications. Exceptions are when shared storage, as in a central SAN or NAS storage system, requires high bandwidth and high availability to support multiple craft edit workstations or mass processing requiring continual reads and writes of hundreds of data sets.

Storage may remain resident in the server for such functions as search engines, data movers, proxy generators, single-thread transcoders or compression format rewrappers. Drives for these operations are governed by the volume of storage needed for interim/short-term operations; and by the number of threads and processes that occur during peak periods. When the operational expectation is for a modest amount of transcoding or proxy generation, the drive specifications (i.e., capacity, format and rotational speed) may be less than when large activity volumes occur. Large activity volumes include situations such as when many simultaneous high-resolution operations occur that also generate H.264 proxies on growing files (live recordings without a finite endpoint) as well as the conversion to multiple formats, such as the creation of a ProRes 422 file, AVC-Intra 100 file and an XDCAM HD 50 file from a single DVCPRO100HD original.

SELECTING DRIVE TYPES

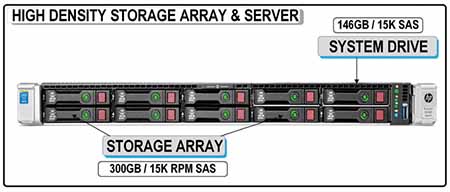

In a mid-scale news or production facility that includes editing, production and media asset management, archive, file-based QC, graphics and transcoding, the CER may need upwards of 120 individual COTS servers, plus 30–35 high-end user workstations for graphics generation, processing and delivery. Storage integrated into these servers may be a mix of 7,200 RPM and 10,000 RPM drives appropriately configured for the OS or the data they process. Support for SQL database servers, Web services and streaming encoders are also found, each requiring one to many dedicated servers, each with moderate capacity drive requirements.

Most enterprise-class servers use Serial Attached SCSI (SAS) drives. Some machines may use SATA drives to save cost or when higher performance isn’t necessary. Today, server drive capacities range from 300 GB to 450 GB each. Occasionally there will be 1 TB SAS drives and on some more rare instances, you might find 3 TB (7.2K RPM) SAS drives, depending upon the application and usage. As the drives increase in capacity, rotational speeds may then decrease. For example, 300 GB and 450 GB drives will be 10 K, while 1 TB and 3 TB drives will be 7.2K RPMs.

For each selected HDD, bandwidth (the number of operations) and transfer speeds (describing how many gigabytes of data per second can be written to or read from the drive), in turn will impact server performance and potentially affect the applications deployed.

Transfer speeds (i.e., moving data on and off the drive) specs may be written in terms of megabits per second (Mbps) or megabytes per second (MBps). Be conscious of this specification: the capital “M” vs. lowercase “m” can make nearly an order of magnitude difference. Furthermore, “raw bandwidth” versus true “transfer speeds” can be misleading as are inconsistencies in how these are stated by the manufacturer.

COMPARING SAS VS. SATA

Three form factors of typical IT-class server components found in broadcast central equipment rooms Metrics for SAS and SATA drives can be misleading. The full name for SATA drives is Serial Advanced Technology Attachment and are sometimes described as “Nearline SAS” (NL-SAS). For this discussion, we’ll only use the term SATA, which, from the market perspective, remains the largest in terms of pure volumes of storage devices deployed. SATA drives have the lowest cost per gigabyte and the highest storage density when compared with SAS.

Seek times will be dramatically different between SAS and SATA, with the former (SAS) as much as one-third that of the SATA (the lower this number, the better). Another metric for valuing the higher drive performance comparison is cost per IOP (input/output operations per second), with SAS having the better value.

The next drive selection metric is reliability. Expressed in two terms: mean time before failure (MTBF) and bit error rates (BER). There are many external factors that affect the overall MTBF of a system, most of which are too numerous for here. For the comparison of SAS vs. SATA, generally, SATA (NL-SAS) drives have a MTBF of around 1.2 million hours; with SAS drives exhibiting MTBF of around 1.6 million hours, a 28-percent improvement (per 2009 statistics).

For bit error rates, SAS drives are 10 times more reliable for read errors with SATA drives exhibiting one read error in 10^15 bits read, and SAS drives having a BER of one read error in 10^16 bits read. Of course other factors such as RAID, flash drive caching, redundancy and replication help to mitigate overall errors and should not be considered in BER descriptions.

These brief descriptions are by no means the only things to be considered when building up or specifying commercial IT-servers for media applications. The important point is to understand why there are cost differentials in selecting these components and what they mean to performance, reliability and usability.

If you’re budget-constrained or can’t provide for duplicity (i.e., mirrored systems) as protection, make certain your one (and possibly only) server is of top grade and uses the better-performing and longer-life components sized and specified for the application they will serve.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.