Metadata for File-Based Workflows Becomes Core Component

Media management, storage systems and video servers all employ varying degrees of metadata to keep track of the assets stored on their systems. Metadata is essential to the success of file-based workflows in MAM or in the cataloging process for active or archived media content.

In past columns, we’ve identified the various types of structural, descriptive or dynamic metadata from a technical and descriptive viewpoint. We’ve discussed how metadata linked to media assets provides a much richer value to those assets when used as historical information or as keys and pointers for searches.

Metadata on a traditional video server platform is primarily used to support and identify important relationships such as file location, coding types, file numbering schemes and pointers, audio channelization and descriptive nomenclatures that aid in associating the file with external applications. At the disk drive level, metadata provides locators to tracks, sectors or segments on the hard drive, which in turn organizes where the parts and pieces of the actual content are kept.

In the context of media asset management, metadata provides a deep and broadening set of values to the users of those assets. This happens not just at the technical file-system level, but more importantly, at an interactive level, which supports users needing to manipulate or find content for any number of reasons and activities. And because of how the metadata information is conveyed, users don’t realize it’s the metadata that literally provides these virtual links to the descriptive information about those assets.

AN EVOLUTION

Metadata has truly evolved over the past two decades. In the early days of video servers for broadcast play-to-air or transmission purposes, metadata had a distant and sometimes concealed relationship to the outside world. Before the term became what it is today, metadata was used to track the asset for activities such as server record or playout or cataloging.

Typically this early metadata was only passed to outside services, such as broadcast automation or clip-list players. For these functions, most metadata was contained on the video server’s “system disk” (i.e., the “C-drive”). The drive was often mirrored and carried both metadata and the server’s applications, file system descriptors and the user-generated names or numbers for the video files, which consisted of SOM, EOM or duration in either timecode or “counter” time.

Today, metadata—traditionally thought of as “the bits about the bits”—takes on dimensions that seem unbounded. It no longer references just the files on the video server. It is seldom carried on the system disk unless the platform is relatively small or used in clip-player-only functionality.

A CORE COMPONENT

Instead, in the world of file-based workflows, metadata is frequently managed on a discrete set of protected or clustered servers; and has increasingly become a core component of the asset management and IT-based “video” server architectures.

Metadata systems are essentially databases that tie information about media-centric files to either a closed system (for example, only the video server itself); or in most cases, to a much broader asset management system. In a simple somewhat closed video-server environment, metadata is structured so as to identify clip numbers, EOM/SOM, time code or duration, and creation or modification data.

In this model, metadata provides linkages between the file system (e.g., a file allocation table record set) to human-readable text fields that extend pertinent information about the files for use in location, search and the like.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

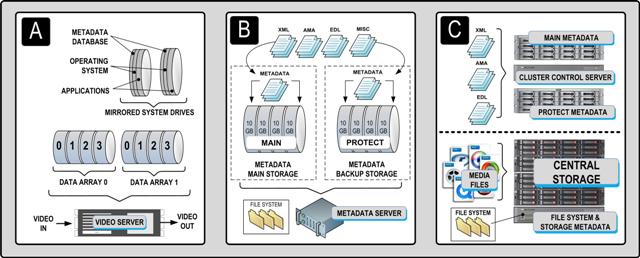

Metadata that is stored in larger complex databases is tailored to support a multitude of activities on various systems. It will be structured to support the closed activities within the MAM and for other external activities associated with peripheral components. For example, EDLs in a production asset management (PAM) provide links to records that describe the in-out points, element descriptions, ownership or control of the asset and hundreds of other data sets used in the post-production process. Fig. 1 shows three scales of metadata server architectures ranging from simple through complex, enterprise class levels.

Fig. 1: Examples of metadata architecture. Section A shows a simplified, traditional video or clip server where a mirrored system disk contains the file system and operating system, applications and metadata, accompanied by a mirror RAID storage system. Section B shows a dedicated metadata server, with main and protected storage, contained in a single chassis. Section C shows an enterprise class media asset management system’s clustered control server (databases including metadata) and mirrored storage in the top of the diagram; and a high-performance central storage system with its integral file system and storage metadata server at the bottom.

In a PAM/MAM, additional text fields used to describe the content of the essence are layered into the metadata databases. As child assets are created during production workflows, the child metadata remains associated to the parent asset’s metadata sets, allowing for the retention of pertinent information relative to the asset throughout the entire asset’s media life cycle.

Even if the file is transcoded, archived, used in another production activity, etc., the information about that asset is preserved through the database links originally given to the asset when it was created or ingested into the system.

One trend for asset management is the capturing of relative metadata and the subsequent preservation of that metadata directly into the compressed bit stream, the baseband SDI-video (in VANC) or a real-time stream. Given the power and capabilities of modern databases and with broadband interconnectivity, it is indeed possible that all the asset’s metadata sets could be kept in the cloud, allowing for a linkage to the metadata (through an encrypted key), which could be recovered anywhere, regardless of where the actual media was created, used, modified, displayed or stored.

For the future, system architectures must address the creation, retention and manipulation of metadata with as much integrity and protection as the video asset itself.

Karl Paulsen (CPBE) is a SMPTE Fellow and chief technologist at Diversified Systems. Read more about other storage topics in his latest book “Moving Media Storage Technologies”. Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.