QoS vs. QoE

Since the beginning of television broadcasting, engineers have been obsessed with improving quality. One does not have to look hard to find a long list of technological advancements, all focused on improving the quality of images delivered to the home. Early on, many measurements focused on legal aspects of transmitted signals — maximum white level, sync amplitude and horizontal blanking width, for those of you who remember all the fuss. Not only did these measurements ensure that the emitted signal was legal, but also they helped to ensure that viewers received good pictures and sound.

Quality of Service

As broadcasters began to interact with satellite and terrestrial transport vendors, we began to look for tools that would allow us to set measurable criteria for the performance of the video links. Specifically, we were looking to establish criteria that could be tied to a contract for carriage. These criterion were collectively referred to as Quality of Service or QoS.

Over time, QoS has evolved significantly, especially in relation to the carriage of professional video over IP networks. These days, QoS measurements might include things like IP loss ratio and cumulative jitter. (For more information on QoS in media applications, I would recommend a book by Al Kovalick called Video Systems in an IT Environment, published by Focal Press.) QoS is a valuable tool to assess whether a system meets pre-established technical criteria. Most transport contracts these days contain QoS provisions.

But there are some problems with QoS. It does not take into account the interaction between the technology and the viewer. This can be a big problem. For example, I have seen demonstrations where, after MPEG compression, a particular scene had a good Peak Signal-to-Noise Ratio (PSNR) score, but the scene was obviously seriously degraded.

QoS does not take into account other factors that might affect the viewing experience, such as the effect of large audio level variations between program audio and commercials. So while QoS is an effective tool for assessing technical performance, broadcasters realized that perhaps another tool was needed to assess how consumers were reacting to the total viewing experience.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Not long ago, a group of industry experts focused on the way video and audio content interact with the transport medium, e.g. an IP network. The Video Services Forum created a Quality of Experience Metrics Activity group to identify and describe metrics required to accurately characterize professional video and audio quality for streaming video transport over IP.

Some of the objectives of the group were to encourage development of test devices that could measure the selected metrics, to speed trouble-shooting of problems affecting video quality, and to facilitate problem resolution between customers and service providers by defining standard metrics that could be monitored at the demarcation point. The group also tackled the issue of creating standard metrics that work well in correlating network impairments with the visual experience for different codecs — a challenging task.

Importantly, the group did not tackle the issue of unambiguously defining Quality of Experience (QoE) or quality, but instead focused on identifying a set of metrics that contribute to the quality of professional video transported over IP networks. Also, the work did not identify metrics that would require complete decoding of the video/audio bit streams since the goal was to provide monitoring that could be used as the signals were in flight, meaning quality could be predicted at a number of places along the transmission path.

Specifically, the metrics that were selected were chosen because they provide assistance in monitoring, troubleshooting, verifying delivered service statistics, designing new equipment and ultimately, improving the overall QoE for end users.

QoE metrics

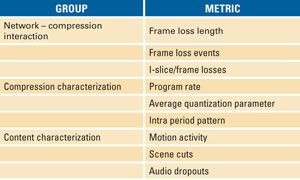

Several different metrics were identified. The first group were metrics that describe the relationship between transport losses and the impact of those losses on video/audio payloads. The second group were metrics that characterized compression used. The third group helped to characterize the content.

It is important to understand why different classes were needed. In the first case, the impact of a bit error on the observed video differs greatly depending upon whether the loss affects an MPEG-compressed intra/inter predicted macroblock versus the loss of a B Frame, which might have a much less observable effect. In the second case, it is important to know the quantization and video bit rate to understand the potential impact of errors on video and audio. In the third case, we know content that is high motion is much more stressful on compression systems. Identifying this content is important when studying the impact of transmission errors on a system that is already stressed to the limit by high motion content.

One thing to remember is that the QoE metrics described in this article were designed to be used for analyzing professional video transported over private IP networks; this is a far different application from transporting consumer video over the generic Internet. The group also focused on the use of private networks to transmit video over cable systems to the home. The group determined that, except in extreme cases such as breaking news, professional video is almost always transmitted over private IP networks, so this restraint seemed reasonable. The group also looked at a particular protocol stack, basically consisting of either MPEG-2 or H.264 compressed video transported over UDP and IP.

Table 1. Shown here are representative metrics from the VSF report.

Another important consideration that was used in the development of these metrics is that many of these metrics were designed to be measured over time — meaning that the metrics may have benefits when monitored instantaneously, but that they may also convey important trend information when they are sampled repeatedly and results are compared. The point is that trend information may be used to identify problems before they significantly impact video/audio transmission.

A sample of the metrics defined in the VSF report is shown in Table 1. Note that this is not an exhaustive list of metrics contained in the report.

A closer look

There is not enough space to cover all of these metrics in detail, but to take frame loss length as an example, the report defines loss event as the maximum number of consecutive slices/frames lost in a single loss event in a measurement interval. The report goes on to state that the measurement is important because IP losses typically happen in bursts. Together with the number of loss events, loss length is a major factor in determining the severity of a bursty loss. The longer the loss, the more it will affect the quality of decoded video.

Let’s look at another metric — motion activity. Motion activity is the average length of motion vectors in a measurement interval. Put another way, the amount of motion in a video determines its encoding complexity to a large degree. Video with a lot of motion is generally more difficult to encode than content with low motion.

Motion activity can help in estimating the base quality of the video stream as long as network losses are not present since, generally speaking, the higher the motion, the more difficult the content is to encode, and the more observable any compression artifacts are. The presence of network losses only makes a bad situation worse, and a high loss rate combined with a high motion activity metric is predictive of a lower QoE.

Perception matters

I should note that much of the material for this article came from the VSF report, and is the work of Pierre Costa, Stephan Winkler and other members of the Activity Group.

QoE is a topic that should be studied by broadcast engineers. While QoS is important, we should remember that perception matters, and that gets to the heart of QoE. These metrics try to quantify the impact of measurable impairments on the perceived quality of video and audio delivered to the end viewer

—Brad Gilmer is executive director of the Video Services Forum, executive director of the Advanced Media Workflow Association and president of Gilmer & Associates.