Seeking Universal Knowledge Through Archiving

Data is archived on custom-designed Petabox servers, proprietary systems built by the Internet Archive staff.

SAN FRANCISCO —If there was ever a scenario that would put the demands of long-term storage to this test, it’s this one.

There’s an enterprising, determined individual whose goal seems almost overwhelming: collect all pieces of text, music and video that have been produced by the human race and collect it in a enormous archive for the world to peruse.

This grand venture has been spearheaded by Internet Archive, a nonprofit organization based in this tech-savvy city that has spent the last 15 years digitizing millions of books and collecting data from thousands of websites, attempting to collect everything published on every Web page over the last 25 years – as well as the entirety of the written word in the thousands of years that mankind has been putting pen to paper.

OVERWHELMING GOAL

Insurmountable as it may seem, the goal is twofold: to provide universal access to researchers and give regular citizens unfettered access to archived information on everything from the current elections to the long-ago political banterings of ancient Greece.

“We want to provide universal access to all knowledge,” said founder Brewster Kahle, stating an overwhelming goal with such earnest alacrity that the idea begins to seem quite feasible.

His nonprofit library was founded in 1996, when “we started with Web pages and books and television, and then moved on to amass a large audio and moving images collection.” It was a cumbersome and solitary process in the beginning, with slow digitization of books and the painstaking harvesting of websites.

Now the Internet Archive has built itself into a staff of 150, with the ability to digitize up to 1,000 books today with 32 scanning centers in eight countries. The organization also has linked hands with the biggest archive and research organizations in the nation, including the Library of Congress and the National Archives and Records Administration. Outside individuals upload clips and documents, as well, at www.archive.org and to the video portion of the archive at www.archive.org/tv.

“The charge at our organization [the National Archives] is to preserve all the permanent valuable records of the three branches of the government for the life of the Republic,” said Bob Spangler, acting director of the electronic records section in Research Services at the National Archives. “The Internet Archive was the only organization that was capable of doing it the way we needed it to be done – meaning they understand the intricacies of formats on the Web.

“One thing that’s really important to us is knowing how to harvest and gather information into a format that is correct for archiving,” Spangler said.

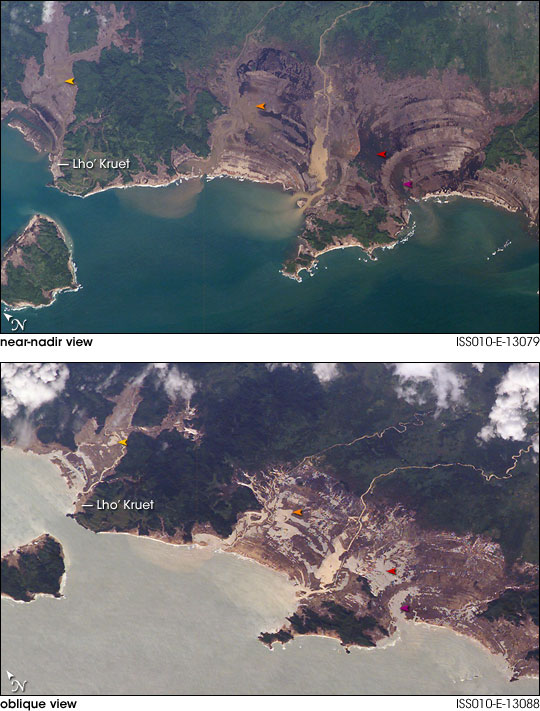

Preserving images of both mundane and life-altering events is one goal of the Internet Archive, such as this image of a road damaged during the 1989 Loma Prieta earthquake in California.

That format – known as Warc, short for the Web archive file format – has been key to correctly organizing content and packing it together in a bubble with corresponding metadata. “The problem with Web crawls and the gathering of Web content in the past is that sites uses different clients and servers and browsers,” he said. “All those inconsistencies create a jumble of information.”

The problem is then trying to correctly catalog that jumbled mess. “This is called the Deep Web problem, something that crawling technology can’t [resolve],” Spangler said. “It’s well understood. The key with [the Internet Archive] is that their method creates a coherence we can use.” The organization uses the BitTorrent protocol for distributing large amounts of data over the Internet, working to store the massive amount of data onto custom-designed Petabox servers, proprietary systems built by the Internet Archive staff to store and process the gigabytes of information being archived. The fourth-generation Petabox has 650 TB of data storage capability per rack.

Kahle has been working to develop technologies for information discovery and digital libraries since the 1980s, after helping to develop one of the Internet’s first publishing system, WAIS, or Wide Area Information Server, in 1989.

LIBRARY OF ALEXANDRIA 2

The organization’s current setup is a amalgam of computers and servers that are stored in four different locations: a primary copy in San Francisco, a mirrored copy in Silicon Valley, a partial copy in Amsterdam and a partial copy in Alexandria, Egypt. That locale is the ancestral home of the original library – the first Ancient Library of Alexandria —which is well known as the largest and most significant library of classical antiquities in the ancient world, founded by a student of Aristotle. Built with the overwhelming goal of collecting all of the world’s knowledge, the library gathered a serious collection of books from beyond its country’s borders, even establishing a policy of pulling the books off every ship that came into port. Several years later it succumbed to the worst fate that can befall a collection: destruction by fire.

Astronaut photos archived at the Internet Archive show the effects of the tsunami that hit Indonesia in 2004. “We call [this archive] the Library of Alexandria Version 2,” Kahle said. “The first one was best known for burning down – so we want to ensure [that doesn’t happen again].”

As of last September, the Internet Archive hit a milestone: having collected each bit of news produced in the last three years by 20 different channels. That means the Internet Archive has been recording news material from CNN, Fox, PBS and NBC, each minute of every day. The collection now contains 2 million books, 150 billion Web pages, one million videos and 350,000 news programs collected over 3 years from national U.S. networks.

The company also made headlines last year after it launched TV News Search & Borrow, a service designed to help engaged citizens better understand the issue surrounding the 2012 presidential elections. By searching for phrases that would have been part of the closed-caption portion of a video program, a batch of news clips will appear, with DVDs sent out on loan for researchers wanting long-form programs. The organization has also found success with the Open Library, which houses about 200,000 ebooks that users can digitally borrow.

The organization has also immersed itself in unique pet projects, such as working with the Library of Congress’ Audio-Visual Conservation Center in Virginia to digitize the 60,000 films as part of the Prelinger Archive, a bountiful mix of educational and amateur films collected by archivist Rick Prelinger. Films range from obscure movies on American advertising to urban history movies known as the “Lost Landscapes.”

“We acquired the Prelinger Archive eight years ago, and Brewster has been digitizing the original material every since,” said Gregory Lukow, chief of the Audio-Visual Conservation Center of the Library of Congress.

“The goal of the library is preservation and access—access has gotten to be much easier; preservation is much more difficult,” Kahle said. Like other organizations tasked with storing vast amounts of data, the Internet Archive has debated the merits of formats like AXF, LTO, LTFS and attempted to determine what format and storage device will last the decades. “You either have to write on a very durable medium or make copies,” Kahle said. “We’ve made copies.”

One of the classic roles of an archive organization is to determine what to keep and what to toss, and with the surplus of information on the Web, there aren’t enough eyeballs in the world to keep up with that. A growing reliance on automated technologies is allowing that selectivity to continue.

And there’s a comfort in realizing that an organization is working to see that this continues. “Having a permanent preservation like this is obscure to most people,” said Spangler from the National Archives. “They don’t think about that, about having material from 30 or 50 or 200 years from now. The Internet Archive are leading-edge technology people, but they are also really able to understand the usefulness of what’s in our past.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Susan Ashworth is the former editor of TV Technology. In addition to her work covering the broadcast television industry, she has served as editor of two housing finance magazines and written about topics as varied as education, radio, chess, music and sports. Outside of her life as a writer, she recently served as president of a local nonprofit organization supporting girls in baseball.