The Role of Full-Reference Monitoring

In a perfect world, systems that are used to deliver video content to viewers could simply be installed once and left to run continuously without human intervention. Unfortunately, service requirements change due to government regulation, the introduction of new technologies, and evolving viewer expectations due to competitive pressures.

As a result, video delivery systems are constantly in flux, with new hardware and software being introduced and new capabilities rolled out. Plus, equipment failures can happen at any time, and rarely occur when it is convenient. To help ensure system integrity in the face of this changing environment, video service providers frequently use system and signal monitoring tools to perform three main functions: service assurance, error detection, and fault isolation.

Service Assurance involves verifying that viewers receive intact delivery of all their requested audio and video content. This includes validating that the proper content has been delivered, and testing to see if it is presented to the users correctly.

Video Clarity’s RTM Player product allows side-by-side observation of reference and processed content, enabling detection and analysis of transmission path errors.Error Detection focuses on capturing system defects in real time, or gathering data about errors from system logs. The main use of this data is to search for patterns in the defects, such as correlations between errors in one segment of the network and faults that are detected in another segment of the network.

Fault Isolation is primarily used by service personnel to identify malfunctioning system elements that need to be replaced or upgraded. These tools are particularly useful when new system features are being introduced, allowing software or hardware modules that might need design modification or reconfiguration in order to properly fulfill their roles in an end-to-end system.

Poor delivered signal quality can have two main financial impacts on broadcasters. First, poor signal quality and service outages will drive viewers to other channels. Second, dropping or altering even as little as one frame of an advertisement can force broadcasters to “make good” and rebroadcast the advertisement at their own expense. With proper monitoring, these costly problems can be reduced or avoided by performing thorough validation of end-to-end network performance on a recurring basis. Since the ultimate goal of a broadcaster is to attract viewers and thereby advertising revenue, it makes sense to verify the quality of the product that is being delivered to viewers: the actual video and audio streams.

Monitoring Techniques

Video service providers have used a variety of test and measurement tools to identify problems and to help diagnose their causes. These tools, which can be hardware, software, or, most often a combination of both, operate using techniques that can be grouped into four major categories:

Transmission path analyzers focus on the circuit that is used to transmit the video signal, whether packet-based, satellite, broadcast or telecom circuit.

MPEG stream analyzers deal with verifying the logical structure of the compressed video stream, checking for compliance to the well-defined syntax rules.

Baseband video measurement systems come in two main varieties – those that use a copy of the source signal for comparison (called Full Reference analyzers) and those that don’t (called No Reference analyzers). Each of these four categories is discussed in greater detail in the following sections.

Transmission Path Analyzers

Devices that analyze transmission paths observe and record various types of errors that can occur in data communications, such as lost packets or bit errors. Such data can be gathered either by capturing and analyzing live streams or by extracting information from the error registers of the transmission equipment.

The primary benefit of transmission path analyzers is their ability to look at signals at any point along the path from source to destination. These devices do not need to understand the detailed internal structure of the payloads being carried on a circuit; they simply look at the performance of the basic bit stream. Errors that can be detected typically fall into the categories of bit errors, lost or delayed packets, transmission delay variation, and other parameters that are closely associated with the physical transport medium. Many modern analyzers can look at various steps along the transmission path, including both packet-based and circuit switched network segments.

Unfortunately, the very flexibility of path analyzers limits their usefulness. Because they look at the transport signals and not the payload, they can diagnose a circuit as “no trouble found” even if the payload signal is completely unusable. These kinds of errors can occur if, for example, the source and destination devices are configured to use different versions of a protocol or incompatible compression parameters.

Overall, transmission path analyzers are quite effective as diagnostic tools for troubleshooting specific segments of a network path. As a tool for assessing end-to-end video signal quality, they are of minimal value.

MPEG Stream Analyzers

The syntax rules that define a compliant MPEG stream are complex – they are detailed in hundreds of pages of standards. Any violations of these rules can cause video image errors at the decoder’s output. The exact nature of the error depends on the specific syntax violation and on the mechanism used by the decoder to handle these errors.

Stream analyzers are quite valuable for software developers, since they can capture specific instances of improper MPEG data generation. These units can also help identify problems occurring at the interfaces between devices from different manufacturers, who may not have interpreted the many pages of specifications in the same way.

These devices often demand skilled operators who are deeply familiar with the subtleties of MPEG stream structure. Some of these devices are capable of decoding and displaying a video image; however, it is hard for a user to tell if the signal that being displayed is actually what was intended by the content creator.

Baseband Video Monitors – No Reference

Baseband video, whether SD or HD, is produced at the output of the final devices before consumer television displays, such as cable or satellite set-top boxes or at the output of ATSC tuners. At this point, any compression has been removed, the video and audio signals should be aligned, and any data that isn’t to be shown to the viewer should have been removed.

Monitoring devices that operate without a reference analyze the video and audio signals to determine if significant defects have been introduced into the video stream. Major errors, such as a loss of video framing are simple to detect and log, as are long periods with no audio signal. Other errors, such as audio noise spikes (clicks and pops) and dropouts or video anomalies such as black screens, test patterns, “postage stamp” video (images that are both letterboxed and side cut) can also be detected and logged.

Non-reference monitoring is performed in many video transmission facilities, by using automated tools or by way of multi-image monitors observed by humans. The primary advantage of this testing methodology is that it is fairly inexpensive on a per-channel basis, and can be implemented without requiring access to the original signal source.

This type of monitoring is subject to certain limitations. False positives are one issue, where errors are reported that didn’t actually happen, such as a reported frame freeze when the image was simply a long still title scene. Another problem is false negatives, where actual errors are not reported, such as frame skips or very brief audio dropouts. Without a reference signal, it can be very difficult to determine if an all-black screen is an error or simply an artistic technique used for dramatic effect.

Baseband Video Monitors – Full Reference

When a full reference is used, the test system is able to access the original source material for comparison. This allows a direct comparison to be made between the intended video and audio programming and what is actually delivered to the viewer. A wide variety of errors can be captured and analyzed, so that a more realistic analysis can be performed of what a viewer will actually see.

One big advantage of a full reference system is in the almost complete elimination of false positives. This can be a great boon to technicians and other users, who can confidently rely on the information that is being reported, and not spend time troubleshooting problems that don’t exist.

Full reference monitoring can be used whenever the test set has access to both the original source material and the signal that is to be tested. This can either be done in real time using live signals (with appropriate allowances for network delay) or by comparing recordings of the source and the video signal under test. Either way, many different defects can be captured and analyzed.

Types of Video and Audio Defects

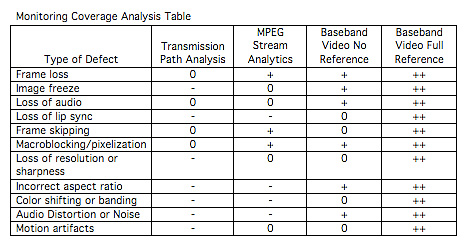

The following list of video and audio defects provides a brief description of some of the most common types of errors that occur in daily transmissions. These defects are then summarized in a table that also shows how well the various types of test equipment perform in detecting these defects.

Frame loss: One or more video frames are missing during a video sequence, which can cause frozen image or blank screen, depending on decoder configuration. Very easy to detect using full reference; more difficult with no reference; can usually be detected in stream analytics and may be caused by packet loss.

Image freeze: Image remains fixed and unchanging for more than one frame. Can be caused by frame loss or loss of signal, but may also be intentional (such as in a title sequence). Fairly easy to detect with baseband video analyzers, but can be very tricky to verify as true errors without a full reference source.

Loss of audio: When the soundtrack of a program goes completely silent, it is usually for one of two reasons: either something is wrong with the audio signal transmission, or the program’s creator is using silence for dramatic effect. Unfortunately, it is difficult to determine which situation exists without access to the original source material for reference.

Loss of lip sync: This occurs when the timing relationship between the audio output signal and the video output signal changes so that the sounds to no longer correspond to the images. While this defect can be caused by transport errors or MPEG syntax violation, they are not directly observable with these tools. Non-reference baseband video test sets are essentially powerless to determine if an audio signal is synchronized with its corresponding video signal.

Frame skipping: The deliberate deletion of frames from the video sequence, which can occur when a decoder is trying to compensate for timing errors caused by stream errors or mismatched clocks. Skipping is very hard to detect in the output signal without a reference.

Macroblocking/pixelization: Most noticeably, the appearance of squares or rectangles within the displayed image, typically caused by loss of data in the compressed video bit stream. In some cases, these defects can persist for several frames until the buffers in the decoder are re-loaded with fresh data (as occurs when a purely intra-coded frame is received). All of the different categories of test equipment are capable of detecting errors that can cause this problem; however, only full reference test sets can measure the impact that these defects have on the images presented to viewers.

Loss of resolution or sharpness: Images that have lost some of their fine details or definition of the edges in the image space; can be caused by excessive compression or removal of high-frequency image data. This type of degradation is extremely difficult to detect using except when using full reference monitoring.

Incorrect aspect ratio: When the metadata is removed that describes the proper aspect ratio of a video signal (e.g. 4:3 or 16:9), some devices will deliberately expand or squeeze the video image to completely fill a specific frame size. These distortions can propagate through the video delivery system without causing any transport alarms or syntax violations.

Color Shifting or Banding: While it is unlikely that a simple network fault could cause a shift in an image’s color, it is certainly possible for an incorrectly configured device in the signal path to cause a change. Similarly, color bands can appear in scenes with gradual transitions from one color to another (such as a sunset scene that shows both light and dark portions of the sky); the banding is caused when compression has reduced the number of colors that are able to be represented in the image. Since the impact of these defects depends greatly on the specific material being shown, a full reference test set will provide the most useful troubleshooting information.

Audio Distortion or Noise: Essentially, anything that has been introduced into the sound track that is not present in the original signal, including pops, clicks, tones, or clipping. Due to the lack of synchronization pulses or other reference signals in a continuous tone audio signal, it can be very difficult to detect changes in an audio signal without reference to the source.

Motion Artifacts: There are a variety of methods that are used to compress the images of objects as they move across a video image. Errors in these algorithms can cause unnatural motion, image blurring, or even complete removal of an object’s image for one or more frames. Because only a small portion of the overall image is typically affected by these anomalies, full-reference testing is one of the only ways that these types of errors can be captured and analyzed.

Table entries indicate the likelihood of detection of each type of defect by each category of test set, according to the following key: ++ = Very Likely + = Probable 0 = Possible - = Not Likely

The Transcoding Gap

Video signals are frequently encoded and decoded at multiple points along the transmission path from source to viewer. Different compression technologies and different bit rates can be used depending on the specific requirements for each link in the chain. Whenever the signal is transcoded from one compression system to another, data about stream errors or other picture defects can be lost. This transcoding gap can remove much or all of the information used by some types of test and monitoring equipment, making their stream analysis results incomplete or downright misleading.

For example, video feeds from a television network to a CATV facility may use a compression system such as MPEG2, because it is the standard format used by their local affiliates for over-the-air broadcast. To save bandwidth on their distribution network, the CATV provider will often transcode (i.e. decompress and then re-compress) the signals using a lower bit rate compression system such as H.264 before delivery. Any errors that affect the video or audio signals in the MPEG2 bit stream may still be visible in the signals that are delivered to viewers, but any information about the cause of the defect will not be present in the end stream. In other words, the H.264 bit stream syntax may be perfect in every way when it reaches the viewer, but video and audio data may still have errors that have been introduced well upstream of the H.264 encoder. This gap in information can occur any time a signal is transcoded along its journey from the source to the viewer.

The end result of these successive transcoding gaps is a bit stream that is delivered with video or audio errors but no evidence for what caused them. If the video image has been distorted due to packet loss or unsynchronized clocks that are upstream of the last compression device, the stream could arrive at a viewer’s device without a single error that could be found using a transmission path or MPEG stream analyzer. This is probably the most compelling reason to use a baseband video monitor.

Conclusion

Real-time, full-reference signal monitoring is capable of detecting and logging a wide range of defects that can affect the viewer’s experience of video and audio content. This technology’s ability to capture errors such as poor lip sync, image freezing, motion distortions and other subtle mistakes in displayed images and sounds make it a powerful tool for accurately assessing the performance of an end-to end transport system. As one of the few tools that can accurately measure network performance across multiple transcoding gaps and data format changes, full-reference monitoring allows network operators to get a total understanding of how well a delivery system actually performs from source to viewer.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Wes Simpson is President of Telecom Product Consulting, an independent consulting firm that focuses on video and telecommunications products. He has 30 years experience in the design, development and marketing of products for telecommunication applications. He is a frequent speaker at industry events such as IBC, NAB and VidTrans and is author of the book Video Over IP and a frequent contributor to TV Tech. Wes is a founding member of the Video Services Forum.