Time to Check on the Machines

AI/ML tools are improving and increasing in use but are not yet, and perhaps will never be, the magic bullet for all media cases

LONDON—Media and entertainment enterprises are seeking automation to drive efficiencies with artificial intelligence and machine learning the technology keys. In a post-pandemic world where a remote and distributed work model is the new norm, AI engines could come into their own.

“We’re at the beginning of a golden age of AI and ML,” says Hiren Hindocha, cofounder, president & CEO at Digital Nirvana, a Fremont, Calif.-based developer of media compliance technology. “The use in media is tremendous. It makes content searchable and translatable into multiple languages, allowing content to be consumed by users anywhere.”

Others strike a note of caution. “AI/ML is working and has not led to the mass layoffs that some feared,” says Tom Sahara, former vice president of operations and technology for Turner Sports. “But nor has it reduced budgets by a huge percentage.”

Julian Fernandez-Campon, CTO of Tedial, agrees, “AI/ML has become a ‘must-have’ feature across all technologies but we have to be quite cautious about the practical application of them. They’ve proved good results in some scenarios but in reality, broadcasters are not getting big benefits right now. Being able to test and select the proper AI/ML tool quickly and cost-effectively will definitely help adoption.”

Roy Burns, vice president of media solutions at Integrated Media Technologies, a Los Angeles-based systems integrator, says customers are confused about what AI/ML is or what they want to do with it; “We have to explain, it’s not a magic bullet.”

SPEECH TO TEXT

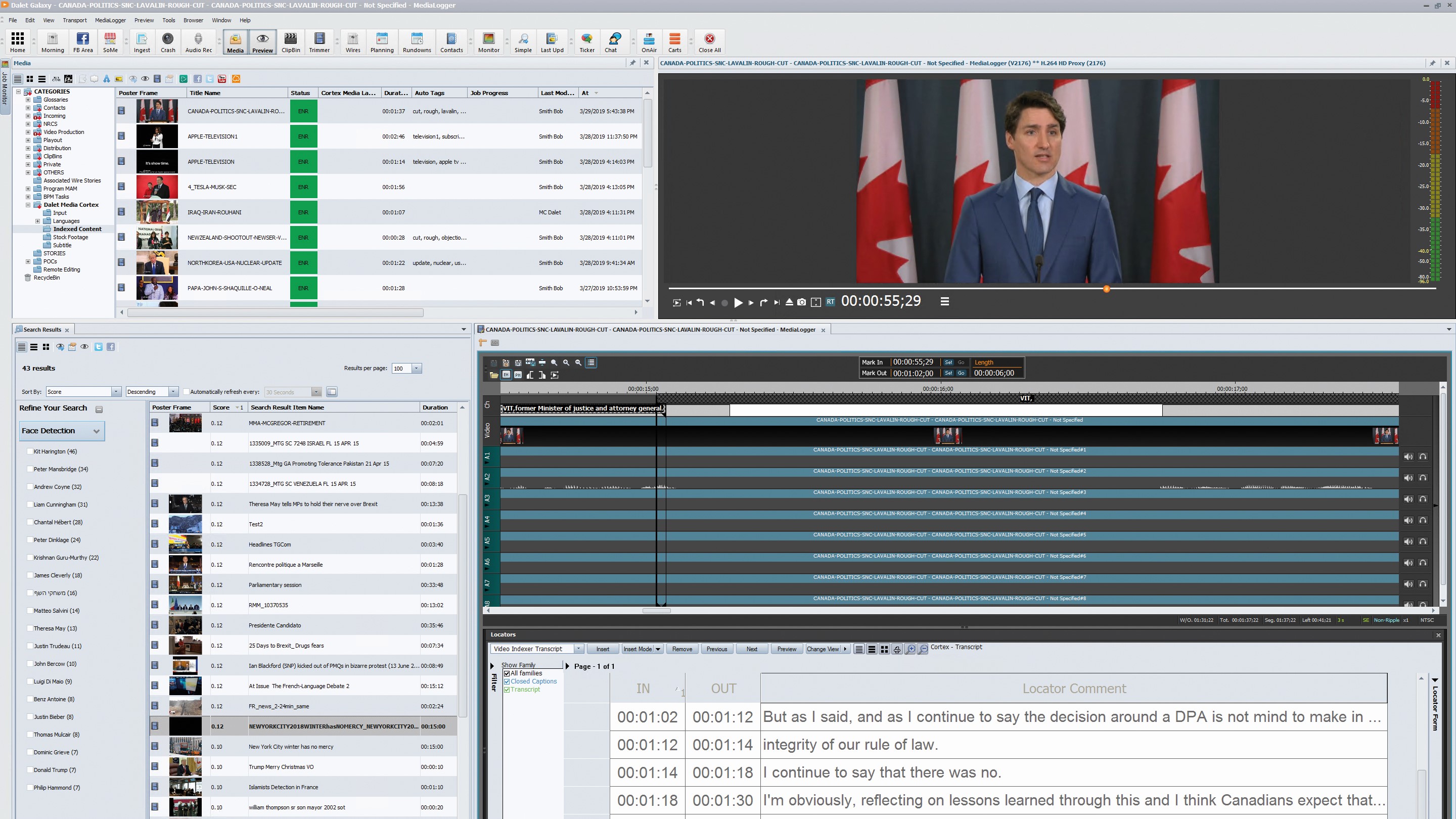

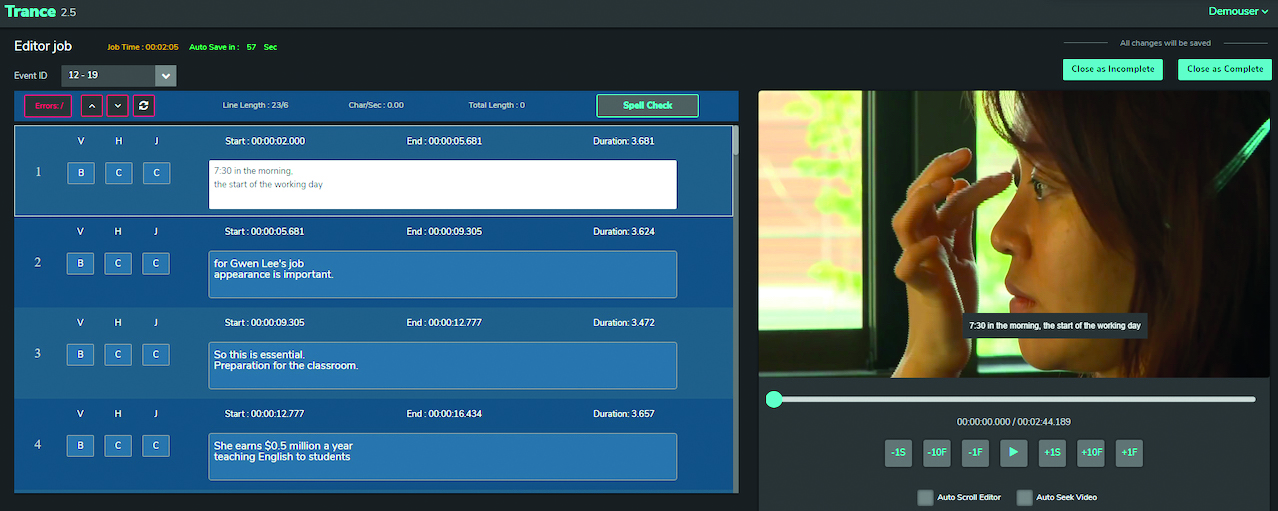

The key benefit and one ready for general operation today is captioning. Live speech-to-text quality is rapidly improving, leading to the possibility of fully automatic, low-latency creation of subtitles. Dalet reports time savings of up to 80% in delivering subtitled content for news and digital publishing. Additional ML capabilities allow systems to properly segment and layout captions to increase readability and compliance with subtitling guidelines.

“Speech-to-text translation is probably most mature where there’s about a 90% confidence rate,” says Burns. “For some people that’s good enough but for others making an embarrassing mistake is still too risky.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

“It’s important to understand that the output of object or facial recognition tools are not human readable,” he adds. “They are designed to give a ton of metadata about the asset but to correlate it against your media you need a MAM [media asset management]. That’s what I try to explain—if you can ingest AI outputs to a MAM and correlate against a central database of record that is what is going to make it searchable.”

Hearst Television has adapted its MAM with Prime Focus for automation of file management of commercial spots. Joe Addalia, director of technology projects for the station group, explains, “We have hundreds of commercials coming into our systems every day. If we have to mark in and out each time and do a quality check we are not being efficient. Instead, the MAM automates this, harvests necessary metadata and supplies it downstream to playout. There’s no reason for an operator to go into the item.”

Addalia emphasizes the importance of metadata. “You can have the most glorious 4K image you want but if you can’t find it you may as well not have it. AI/ML is about being able to find what you need as you need it.”

Hearst’s internal description for this is “enabled media:” Metadata-enriched video, audio or stills content that Addalia says will advance the possibilities for new workflow and products.

“On top of speech-to-text, automatic machine translation is nearing maturity to enable multilingual captioning scenarios,” says Michael Elhadad, director of R&D for Dalet. “The main obstacle is that standard machine translation models are not fully ready to translate captions out-of-the-box. Automatic machine translation is trained on fluent text, and when translating each segment into individual subtitles, the text is not fluent enough; standard models fail to produce adequate text.”

The alternative method, which consists of merging together caption segments into longer chunks, leads to another challenge: How to segment back the translated chunk into aligned and well-timed segments?

“A specialized ML method must be developed to address this challenge and produce high-quality translated captions,” says Elhadad. “This remains an open challenge for the industry and something that we’re working on at our research lab.”

CURRENT USES OF AI/ML IN MEDIA

Additionally, object and face recognition logged as metadata can assist scripting and video editing, specifically with a rough cut being automatically created based on given metadata fields, for example, creating highlights from keywords, objects, text-on-screen. Tedial has been using AI/ML tools for some customers in the automatic logging of legacy archives, to identify celebrities and OCR using AI tools.

A big pain point is “text collusion,” when onscreen text (perhaps indicating a place or date) overlaps with caption files. Files presented with this error will immediately fail the QC of all the big streamers, but detecting issues manually in every version permutation is not cost-effective.

OwnZones offers a deep analysis platform to scan content and compare it against other media items like time text. “The AI analysis tool can find the location of onscreen graphics using OCR [optical character recognition] and with information from time text is able to detect a collision,” explains Peter Artmount, product director for the Los Angeles-based developer of media management distribution technology. “A failure report is automatically sent back to whoever did the localization work to fix captioning before sending on to OTT services.”

Typically, it would take an hour or two to manually QC and flag issues per hour of content; OwnZones claims its AI does it in 15 minutes.

Another common use case is scene detection for censorship. Artmont describes a scenario in which an episodic drama containing occasional profanity requires closed captions to be blurred out for transmission during daytime hours.

“Typically, you have to store all the versions you create, eating up storage on-prem,” he says. “In our example, you are storing 300–400 GB per show, yet the only difference between each version is to 3–4 MB of frames. We apply our AI analysis to generate a (IMF-compliant) composition playlist from the content. By storing only the differences [scenes with bad words] from the original we can trim content by 46%, making storage far more efficient.”

CURRENT USE OF AI/ML IN SPORTS

Sports is a greenfield where the use of AI can leverage production to generate more tailored content for a specific audience. “With the reduction in live events, the ability to monetize valuable content from years of archives is key,” says Elhadad.

There are two principal applications: Indexing (tagging, transcription, classification and object/face recognition) of vast archives; and automatic highlight creation, event-driven automatic overlays and titling.

Aviv Arnon, cofounder of WSC Sports, an Israeli-based developer of sports media production technology, says its platform is taking “dirty” production streams of a sport into its cloud-based platform where an AI/ML system breaks the game into hundreds of individual plays. The system applies a variety of algorithms to ID each clip, make it searchable, and to clip each with optimal start and end times and then gathers the results into video packages for publishing.

“Our ML modules understand the particular patterns for how basketball is produced including replays, camera movement, scene changes,” Arnon said. “We have all those indicators mapped to automatically produce an entire game.”

He says sports leagues need to scale their content operations by packaging different clips to social media and other websites and that AI is the only way to do so rapidly.

“I can’t say it’s 100% accurate but 99% is too low,” he said. “A better question might be, ‘if I had a manual editor would they have clipped it a second shorter or longer?’ It’s not about the veracity of the content. There’s no doubt it has helped speed up the process by allowing an operator to handle a lot of content in a short amount of time.”

Elhadad explains that indexing is collected frame by frame (e.g., a frame contains the face or the jersey of a known player), but search results should be presented as clips (coherent segments where clues collected from subsequent frames are aggregated into meaningful classification).

“While descriptive standards exist to capture the nature of such aggregation (MPEG-7), the industry has not yet produced methods to predict such aggregation in an effective manner.”

SMPTE DEVELOPING AI/ML BEST PRACTICES

Work is under way in this area. SMPTE is working with the ETC@USC’s AI & Neuroscience in Media Project to help the media community better understand the scope of the AI technology.

“AI is promising but it’s an amorphous set of technologies,” says project director Yves Bergquist, noting that a quarter of organizations report over a 50% failure rate in their AI initiatives. Consultancy Deloitte also found that half of media organizations report major shortages of AI talent.

“There are a lot of challenges around data quality, formats, privacy, ontologies and how to deploy AI/ML models in enterprise,” Bergquist says. “AI/ML is experimental and expensive and there are duplications across the industry.

“We think there are strong opportunities for interoperability throughout the media industry,” he adds. “Not everything has to be in the form of standards. We also want to share best practices.”

The task force is made up of about 40 members including Sony Pictures, WarnerMedia and Adobe. Research is focused on data and ontologies, AI ethics, platform performance and interoperability, and organizational and cultural integration. “That last topic is the most important and least understood challenge,” Addalia says. “It speaks to the ability of an organization to create an awareness and understanding of what AI is and is not, how to interpret its output, how to understand the data.”

The task force is “expecting to make a substantial contribution in the area of suggesting best practices in terms of creating a culture of analytics,” he said.

Addalia agrees that no single tech provider can do it all. “TV is a cottage industry,” he said. “We have to use our collective resources properly so we can leapfrog into next-gen where we have automated workflows. This requires cross-industry collaboration. Vendors must work together.”

There’s also an onus on end users to provide feedback, Addalia added. “They need to define the use case and the desired results,” he said.

Muralidhar Sridhar, vice president of AI/ ML products at Prime Focus says M&E needs specific treatment and that ready-made AI engines have not transformed the industry.

Human-like comparison of video masters, transcription for subtitling and captions, AI-based QC, reconformance of a source from a master, automatic retiming of pre- and post-edit masters are all important use cases, he says, provided the AI can be made to understand the nuances.

“The problem is that while most AI engines try to augment content analysis, the ability to accurately address nuances is not easy to match,” he said. “Marking segments like cards, slates and montages takes time and cost. It needs 100% accuracy and 100% frame accuracy."

Prime Focus’ prescription for clients like Star India, Hotstar and Hearst Television is to combine computer vision techniques with neural networks where necessary then customize the solution for the customer.

DEVELOPMENTS AHEAD

Higher accuracy and speed across all AI/ ML capabilities continues to advance. Fernandez-Campon advocates “Intelligent BPM,” where AI tools improve workflows by taking automatic decisions and focusing the work of operators on creative tasks. “That’s why it’s key to offer flexible and cost-effective AI/ML tools that can easily integrate into workflows and be swapped one for another,” he said.

Dalet is working on a new approach to indexing faces and creating “private knowledge graphs” it thinks will speed up the process of cataloging large content archives and building libraries of local personalities for regional needs. Current technologies are not able to easily recognize faces throughout a historical archive, the company says.

“Better context-aware speech transcription, more accurate tagging and smoother automatic editing will further reduce the need for manual, repetitive tasks,” says Elhadad. “AI/ML empower content producers and distributors to focus on higher value creative work. AI/ML is becoming pervasive: another tool in the box that users will find increasingly beneficial without the doom-laden ‘man vs. machine’ context.”

Adrian Pennington is a journalist specialising in film and TV production. His work has appeared in The Guardian, RTS Television, Variety, British Cinematographer, Premiere and The Hollywood Reporter. Adrian has edited several publications, co-written a book on stereoscopic 3D and is copywriter of marketing materials for the industry. Follow him @pennington1