Cloud Production Assessment for Media

IP in the studio is not even close to IP in the cloud

Navigating our traditional production ecosystems at scale can be complicated enough. Moving some or all that environment to the cloud—well, that’s a horse of a different color. While steady progress continues in moving portions of the production world into the cloud, many elements and workflows need to be solidified before the production world—as we know it today—can be fully satisfied.

Spawned in part from the COVID-19 protocol changes to the entire television and media working environment, “production in the cloud” seemed to move forward rapidly. According to findings from Devoncroft surveys conducted during the pandemic, the importance of “cloud production” has accelerated dramatically to the top of the importance food chain.

Until COVID-19, the top leader had clearly been identified as the “transition to IP,” which had become the most important industry focus on technology since around 2019.

Depending on IP

The terminology “production in the cloud” has become quite broad and possibly even misleading. To set the stage, we need to set some perspective on the meaning of “IP.”

Networks, whether on-prem or in the cloud rely upon IP. The anomaly attached to the term “internet protocol” or simply IP, becomes a slightly less contentious topic once the marketing term is removed.

IP is a definition that must be properly applied to actual applications and in the appropriate space for which the usage applies. In the technology stack, “IP in the studio” (i.e., full bandwidth, uncompressed signal systems) is not even close to “IP in the cloud” except by its namesake, internet protocol. Cloud and ground are not interchangeable. Collectively, there is considerable differentiation in structure, layers and applications—a topic well outside the space available here.

How IP is tailored in a cloud vs. an on-prem (or ground) activity is a governing factor in what can be accomplished in which environment. Users should not assume that the IP applications in a live studio (i.e., SMPTE ST 2110) are the same as IP in other production environments. Take just the latency and timing aspects for live, switched studio environments on the ground—that’s simply not deployable in a cloud-only application or on a widespread commercial offering, at this time.

Functional Awareness

These statements are not intended to degrade the value, potential or range of capabilities for the cloud. On the contrary, the perspective is about "functional awareness."

For example, consistent, broadcast-quality, real-time production in the cloud has opportunistic capabilities when appropriately confined using a finite set of parameters under the umbrella of a select set of usually proprietary products from a limited array of providers. Cloud playout is a perfectly mature example where systems address needed capabilities within the confines of the application they are employing.

Understand the perspective. In-the-cloud live production is simply not the same IP animal when compared to a studio-grade, well-engineered, ground-based managed network fabric using a system such as in SMPTE’s ST 2110 and their associated protocols. Furthermore, one would not expect weekend NFL live football games to be produced in the cloud with the same level of production value we’ve seen accomplished when using two or more mobile units, 12+ cameras, 10–15 slomos, and a set of five M/E production switchers.

Over time, this analogy is expected to change as evidenced by what we’ve already witnessed during the pandemic. Everything changed and some of those changes have indeed remained.

Cloud Layers

Structurally, elements of a cloud-enabled production environment consist of one to multiple layers supporting various segments of the production ecosystem. Much of this rudimentary cloud workflow is accomplished through software-enabled sets of applications relegated to pools of servers and storage elements. For complete integration, all the other necessary components are a more complicated matter, in similar ways that ground-based (on-prem) implementation use SDI or ST 2110.

One initial requirement to getting content into and through this chain is the preparation necessary to get content from its source (the ground) to the cloud and back again to the intended destination(s). This hurdle is gradually being resolved as owner/operators take new steps to reaching those goals.

None of these capabilities happened overnight. Years of development continue today as cloud service providers ramp up with experienced, technically savvy professionals whose goal is to move the entire media ecosystem into the cloud.

The technology needed to enable these capabilities include compression rate and transport improvements, synchronization of multicamera images, audio-manipulation in real time, communications and non-AV signaling such as tally or foldback, and a serious level of effects capabilities that model today’s ground-based production switchers in the studio and from the field.

Offline to Online

Emerging development for cloud-service-based production and editorial purposes follow a similar agenda to when linear to nonlinear (ground-based) productions advanced to digital some 25–30 years ago.

Those who remember online linear tape editing during the 1980–’90s certainly recall the enthusiasm (and complications) of initializing the online editing world into offline for preproduction purposes. Timeline-wise, offline (the predecessor to nonlinear editing) was a multiyear effort, which occurred well before the desktop-editing revolution. Offline, developed as a tool for rough-cutting a conceptual product that then generated an edit-decision-list (EDL), was the goal, which was then exported to the online (usually videotape-based) cutting environment.

Several iterations of this workflow followed, taking the offline editorial version (compressed, usually motion-JPEG video) up a notch, which used better image compression, faster computer processors and high-volume disk storage. Within a few years, that evolution essentially drove the demise of the online linear edit suite and through the harmonization of high-performance disk-based storage, moved into the world of desktop editorial production.

Cloud-production is now following similar steps, but at a much faster time frame.

Parallel Demise

Think of cloud vs. studio production in a similar framework to how the demise of linear videotape for editorial production progressed. None of this happened instantaneously. For a parallel perspective of where the cloud is headed for media production, take another look at how the industry has evolved comparatively.

Today, users first need to get the source content to the cloud. Ingest, at data rates comparable to studio-quality IP is impractical, unnecessary, extremely expensive and not very cost-conscious. Access point costs for high-bandwidth, uncompressed, signal transport in turn limits profitability for any commercial entrepreneur.

By previous comparison, high bandwidth, standard-definition linear video (270 Mbps) in the late 1980s was relatively complex to apply to any network. A T1 (1.544 Mbps) line (popular at that time for transport) was insufficient for real-time SD video. A DS3 (44.7 Mbps) line was cost-prohibitive, even on a part-time basis and commanded at a minimum 6:1 compression ratio just to reach 270 Mbps. Some used an ASI-transport over another carrier, but again, this was costly, harder to find, and required high-priced terminal equipment to convert the SDI signals for ASI transport.

Fast Forward

Today’s HD video (1.5 or 3 Gbps) transports have a similar level of complication when moving uncompressed HD (ST 292M/424 SDI) or even ST 2110-20 IP video into the cloud. Uncompressed doesn’t make economic sense as alternative solutions for smaller-scaled production using proprietary implementations in a compressed signal domain (e.g., NDI or GV AMPP) are appreciable options.

Once in the cloud, software solutions for what were once silicon-centric hardware-based devices must take over. Unless that cloud is a private co-lo and can facilitate placing physical hardware there that can be managed remotely, everything shifts to code-based software systems.

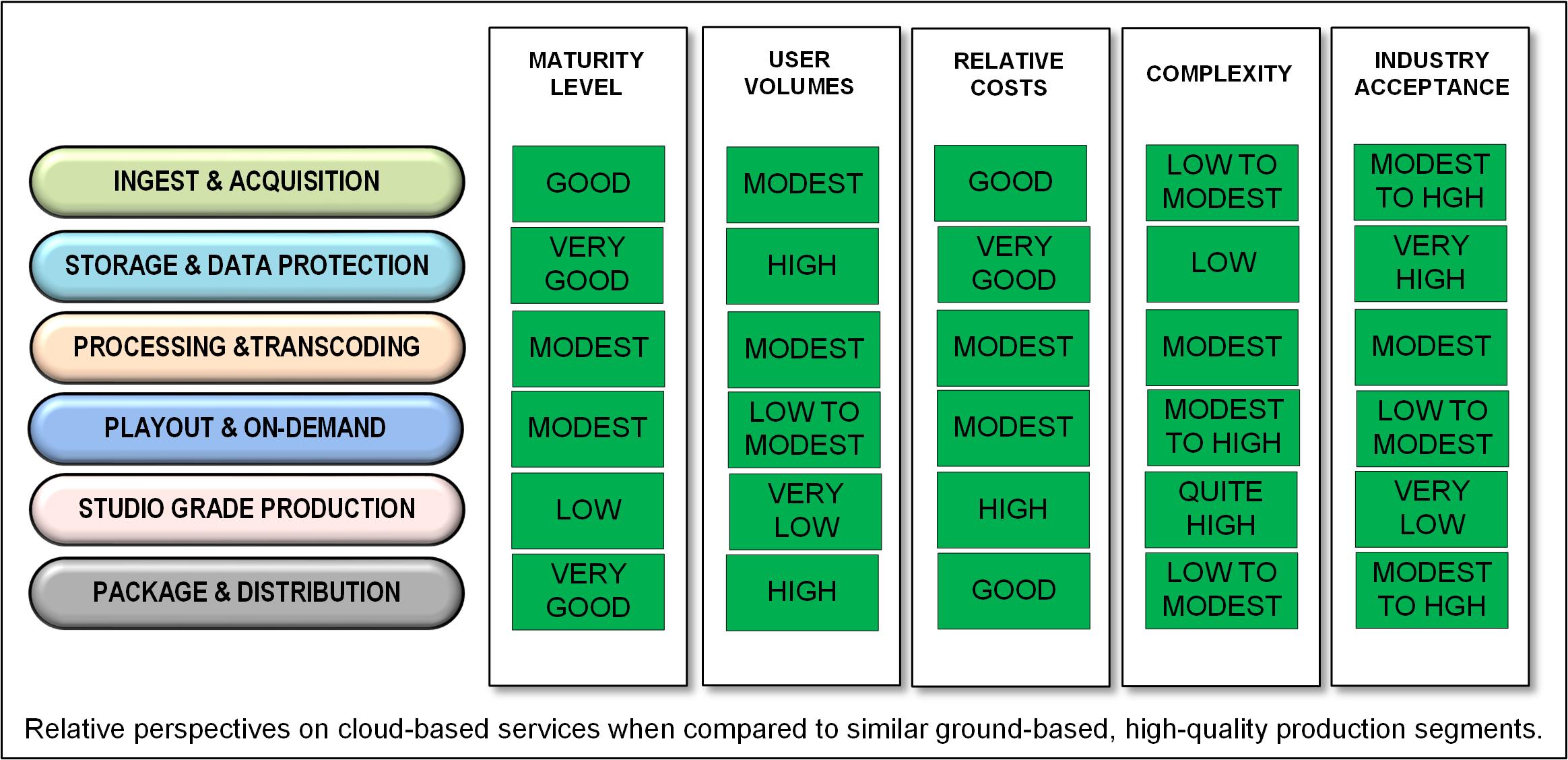

To make real-time, live, cloud production work efficiently and effectively, each of those previously exampled dimensions need to change the signal structure to accommodate the cost and signal transport infrastructure and to address the needed production elements and services. (See Fig. 1 for selected relative comparisons.) Such changes demand that users compress the video signal to a format (e.g., JPEG-XS), which can be affordably transported from the studio (ground) to the nonlinear compressed dimension and eventually up into the cloud world.

This new work is ongoing, making very good progress, and is a key topic in the eyes of the media-production entities within the major cloud services providers. We fully expect that given demand by the enterprise user, such capabilities will be forthcoming, cost-effective and viable for many.

The advice for today is that content production technical professionals need to immediately start exploring what it will take to stay in this loop—as this train has left the station and will reach full speed in a very short period of time.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.