Composing an Infrastructure in the Cloud

Intelligent systems can now make monumental improvements in capabilities

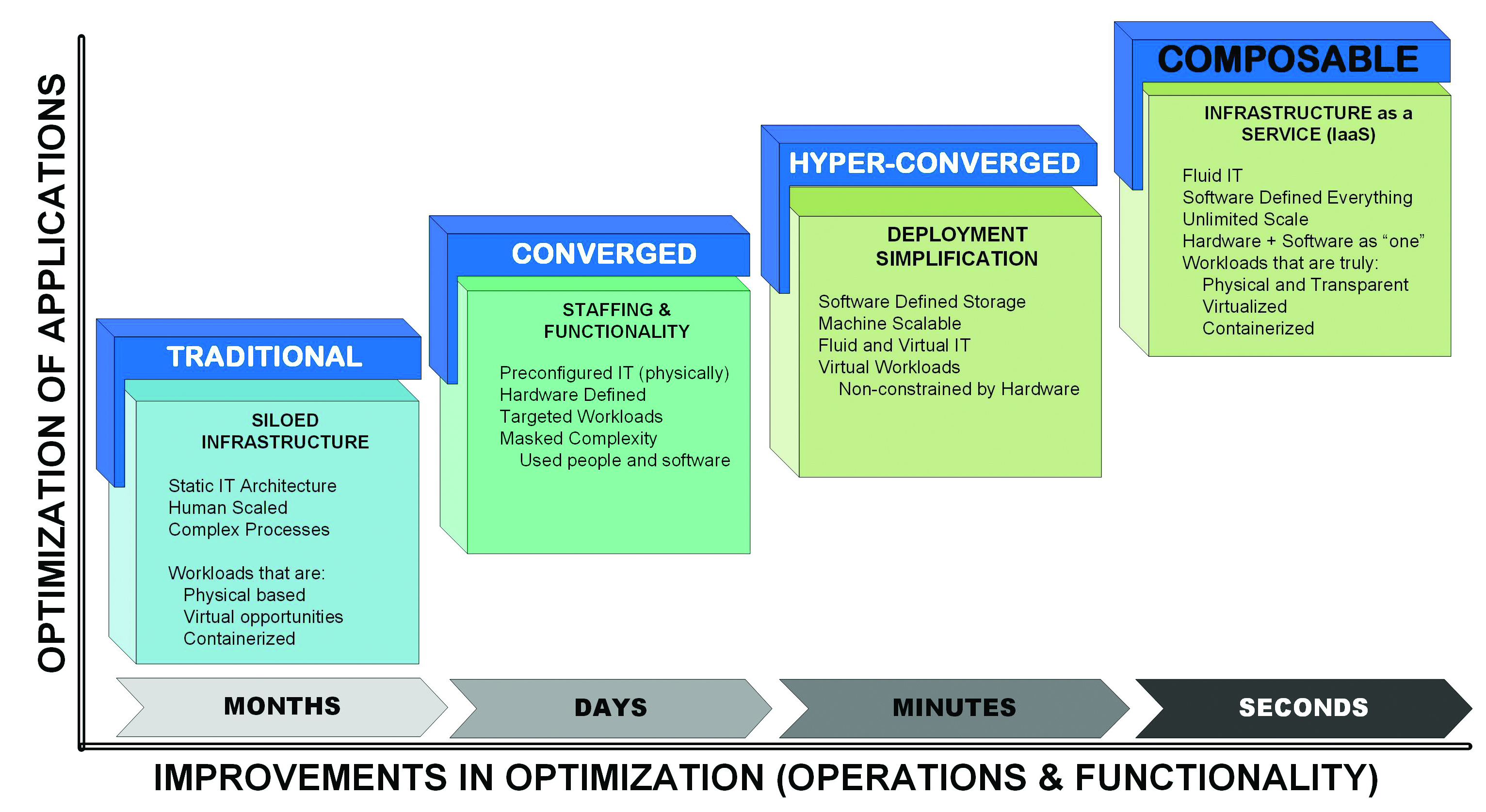

Users of cloud services access a huge volume of capabilities, processes and opportunities when they open an account and start moving data through the system. These users—whether as businesses, individuals or government—are actively engaging in the continually changing digital transformation. This installment examines updates and concepts in what is referred to as a “composable” infrastructure.

Agility is just one of the core reasons for utilizing the cloud and its infrastructure. Other objectives include services that are “friction free,” with control systems that maximize available resources and systems that provide a peak ROI over an infrastructure organized using automated provisioning and intelligent managed resources. Each of these signify basic requirements and rationale for choosing a cloud solution, however, some still wish to have similar functionality in their own managed private datacenter.

Infrastructures composed of compute and storage are built upon a foundational network with onramps and offramps between “ground-based” users and a cloud-provider that interleaves on a fabric that glues together those systems, which vacillate between compute and storage.

Such an environment is being referred to as a “composable infrastructure,” that is, a fabric of Ethernet-based hardware and innovative software layered in a distributed network that claims no limitations (Fig. 1).

Fully integrated datacenter solutions consist of controllers, switches and other hardware steeped in a managed set of software subsystems that respond to the needs and objectives of the consumers. Missing are the traditionally inherent constructs found mainly in on-premises implementations, which serve only the single sets of solutions for which they were conceived to provide.

Conventional architectures for datacenters incur specific limitations based on how the topology of the switches and configurations are architected. For example, while it is possible to build a non-blocking fabric, such as in spine-and-leaf, it usually will require dedicating half the bandwidth in the top of rack (TOR) switches to that fabric, which in many designs and applications, is expensive.

PROTOCOLS, BOUNDARIES AND DISTRIBUTION

System solutions are generally designed to maximize the usage of the available fabric connections (or links). Those designs then must further model for failure possibilities while mitigating the probability of network loops. Protocol boundaries (L2/L3 boundaries) are used as considerations whereby L2 (Layer 2) traffic is confined for communications to leaf nodes, while using L3 (Layer 3) addressing for inter-rack traffic.

Evenly distributing bandwidth across the fabric is a target objective of the L2/L3 boundary. When the datacenter bandwidth cannot be evenly distributed or cannot consume bandwidths uniformly, load balancing will be necessary. Distribution models will be random, driving the network design to some form of equal cost multipath technology (ECMP). Hot spots become the result of uneven traffic in the network, which is managed using techniques such as OSPF (open shortest path first).

LATENCY VARIATIONS AND WORKLOAD SEGMENTATION

Latency is a direct consequence to variations in bandwidth. Latency should be deterministic and will generally be based on a specific workload. In leaf-and-spine, however, these are (generally) not the case.

Multistage traffic transport can take on varying paths when considered end-to-end yielding to latency variances of great proportions and unpredictability. Boundaries, which are often determined by the networking topology’s wiring, can be harmful to workloads.

A strategy to combat these variations is to use workload segmentation—something that the cloud-solution provider has baked into its own architectures given the variability and the multitude of applications expected (and managed for) in the cloud architecture.

CONTROL PLANE/DATA PLANE

Besides the above descriptions of latency, cloud-based bandwidths should be distributed based on workload needs. Bandwidth will be dynamic. Much like the loading required when the compute demand is high, and I/O is low, bandwidth among the compute-serving bare metal devices will be allocated according to the needs of the system. Conceptually this is better managed in a cloud-solution environment than in a firmly structured on-premises datacenter, simply because of cost-to-value unpredictability.

Datacenter topologies will typically utilize a control plane and data plane as their foundations. Often the datacenter’s control plane is tightly controlled. Only a limited set of protocols may be available and are usually not designed to facilitate external, user-defined and dynamic demands—ones that change upon need and are more likely designed for specific operational threads and models.

SDN solutions, for “software-defined networking,” are used to address known limitations by decoupling the control plane from the network itself. Implementations of SDN made in early network architectures used fixed assumptions about how and where traffic would flow in that network. Cloud solutions, however, continually manipulate the flows and adjust (using principles of SDN) to mitigate the usually tightly controlled architectures, thus mitigating the fixed “single-lane/road-like” architectures in a hardwired datacenter environment.

COMPOSABLE FABRIC

A relatively new approach to resolving several of the issues described in these previous discussions looks at addressing data plane, control plane and integration plane (i.e., automation-centric) issues holistically and individually—and based upon dynamic workloads. In a composable architecture, the planes will independently evolve and leverage each other dynamically. Here, the data plane takes on the needs of physical connectivity across the network via its topology model. The data plane, through the application of distributed software, handles the functions of packet (data) forwarding—collapsing and routing the entire system to a single building block.

In large cloud environments, intelligent routing distributes the pathing among the various connectivity components using composable—that is, capable of being assembled, alterable and then disassembled—networking, that found in fabric management components distributed throughout the cloud.

High-performance, advanced fabrics can be found in closed systems to enable high-performance, cluster-based compute architectures. Ethernet-and IP-based networks previously suffered from these capabilities, but no longer. Such new approaches are evolving as cloud-based datacenters take on new foundations to serve the needs of an evolving set of clienteles, workloads and demands.

PERFORMANCE UPSURGES

End-user customers, for the most part, are unaware of these accelerating background systems—they just see performance increases once their data gets into the cloud. Cloud providers can charge for the faster, more powerful services.

Alternatively, building a new on-prem datacenter is further afforded through similar interconnection solutions that leverage logic-based systems for TOR and rack-to-rack fabric topologies. Logical fabrics can be selectively placed throughout the system, thus utilizing composable software-centric control plane solutions (that is, an automated, self-managing set of software subsystems).

New opportunities continue to grow outside the basic SDN controller’s ability to define workloads through server-based APIs or other associate parameters. By employing embedded protocols, equal-cost algorithms let workloads be autonomously managed without requiring the end user to manually manipulate the data services or integration plane. Results are transparent, no workload-awareness capabilities.

Keeping the nuts-and-bolts necessities away from the user is a plus. Intelligent systems can now make monumental improvements in capabilities, driving the fluidity and flexibility of the cloud even higher.

Karl Paulsen is chief technology officer at Diversified and a frequent contributor to TV Technology in storage, IP and cloud technologies. Contact Karl at diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.