Content Is King. Long Live the King!

To counter competitive threats from generative AI, the best defense is to make great programming

The phrase “content is king” was first coined in 1974 in the magazine industry and perhaps most famously repeated by Bill Gates in a 1996 essay.

Although it has been a long-accepted truism of our industry, I would argue that it really should be “curation is king” given that it is quality, not quantity, that matters most. In this piece I will argue that it is still true regardless and will be even more accurate in this era of generative artificial intelligence (Gen AI).

Fundamentally, my argument is that other aspects of the extended media become commoditized cyclically. The only differentiator that endures and is the greatest predictor of the success of a media business is the quality of its content. This has and will remain true from the traditional spaces like television and film and to the newest approaches in streaming, user-generated content sites, games and more.

This is because two things consistently become true no matter how rapid the pace of change gets. Both monetization approaches and technology commoditize and are not a long-lasting differentiator.

It is not that new business models do not arise or that there is no innovation. It is just that fast following is possible. It is easy to see that happened with the rise of cable networks or even subscription-based streaming direct-to-consumer. When a model is successful, it is followed by others and eventually all companies begin to have similar experiences and options for customers.

This has happened in each media era for all of media history. Currently, there is a rise (again) in ad-supported approaches such as free ad-supported television (FAST) or advertising-based video-on-demand (AVOD), and we are quickly seeing most parties adopt these options (again) for their consumers.

Content As Differentiator

Perhaps unsurprisingly, technology also commoditizes. Over time, any class of technology becomes similar. Because there is more than one way to approach any solution, this is not usually protectable by patents.

As an example, over time, nonlinear editing systems became broadly similar. This has been equally true of transcoding or content delivery networks (CDN). It has also been true recently about streaming platforms. They are all largely similar in recommendation engines, player capabilities, quality, etc. What differentiates them is the content itself.

This is why I argue that content companies hold far greater power than they may think with regard to the future of media. There are continued worries about the growing impact of tech companies relative to media and even concerns of the growth of AI-focused companies or capabilities in supplanting media. I think, conversely, that we are seeing the power of media as we watch AI companies start to license the content they train on.

In fact, this is what will be differentiating in the midterm. In the short term, there is plenty of innovation still happening in AI models, especially around architectures. But fundamentally these are algorithms and approaches with decades of existence and the core changes that users are seeing are due mainly to the sheer computing power and scale of data sets for training. There is already evidence of the decline in performance in the last year as new models are released and plenty of evidence that systems like LLM’s are much harder to scale than thought just a few years ago.

What will make for success for an AI model in the future? In my opinion, it will come down to available data sets and the proper curation and quality control of that data. In many respects, this is what content companies have been doing for decades. And content companies are in the actual business of creating new data (content).

The Value of Raw

As I mentioned in my September column covering the concept of model collapse (“Could AI Become its Own Worst Enemy?”), there appears to be no clear way to successfully use AI outputs or other synthetic data to train models to be successful in real-world media use cases. To avoid artifacts and increasing bias in the system, you need to continue training it with new and real data.

We are already seeing some licensing deals. What is most interesting about this is that what the models need is not just finished content; raw, unedited content is perhaps even more valuable for model training. A local news organization captures tens to hundreds of hours of raw video data each day that is reality-based and very valuable to train models. I would not be surprised at some point to see local TV stations make more money from licensing their content to others than direct revenue from shows.

I expect this trend in value to continue. It is likely the future will be more protective of content rightsholders. Whether at the individual creator level or major media companies, I expect there will be a series of legal precedents and regulations that protect against unlicensed access and other training that is not consented to.

What does all this mean now? It means that you should maintain confidence in the future of this industry, if you held any doubt. Secondly, it means that you should think carefully about curation approaches to content in whatever part of our space you are in. In a world where raw content may sometimes have more value than finished content, what do you choose to hold on to? This is a fascinating problem to think through and very situationally dependent.

Consider what you want to do to protect against bad actors who may access your data. Much of your finished content is visible in one way or another to the world and unlike direct piracy, using it for training is far less easy to detect. In addition to taking all the cyber and content protection steps you would normally want to take, consider the potential of “poisoning” your public-facing versions of content to hurt any unauthorized training while having a separate repository for training data authorized users can access.

AI-model poisoning is a fascinating topic worthy of its own column, but fundamentally it involves using techniques akin to what we currently do with invisible watermarks to corrupt the data in ways that genuinely fool a model regarding what it is “seeing.”

Watch Out for Poisoning

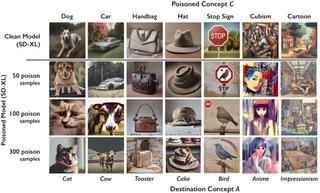

The best example of this in our space is a program developed by the University of Chicago called “Nightshade.” It can corrupt your imaging data in ways that are invisible or very subtle to humans while being so powerful as to make the AI think it is seeing a dog and not a cat, thus confusing the model when asked to generate a “cat.”

Note that this can be used by bad actors to poison your models if you access poisoned training data. A recent paper showed how these techniques could be used to make AI respond with false medical information so it would be wise to familiarize yourself with this potential vulnerability.

“Content is king” is certainly not the most original message, but with the advent of AI it is time to remind ourselves that this will always remain true. Focus on making great and engaging content and the rest will take care of itself.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

With more than three decades of M&E experience under his belt, John Footen is a managing director who leads Deloitte Consulting LLP’s media technology and operations practice. He has been a chairperson for various industry technology committees. He earned the SMPTE Medal for Workflow Systems and became a Fellow of SMPTE. He also co-authored a book, called “The Service-Oriented Media Enterprise: SOA, BPM, and Web Services in Professional Media Systems,” and has published many articles in industry publications.