High-Availability Storage System Considerations

In high-performance media and entertainment environments, much of the storage utilized may require high-availability functionality

Storage expectations in high profile or mission-critical environments command continuous and uncompromised performance with at least 99% uptime (i.e., less than 1% downtime) irrespective of all the other associated operational conditions. Achieving this conformance is accomplished in part by creating—and maintaining—a number of conditions that support the storage and all its associated parameters.

When the storage system is continuously operational, highly resilient to any faults, and is self-correcting with no single points of failure (SPOF) – then the storage system is defined as having “high-availability” and is otherwise known as “HA-storage.”

HA-storage is but just one component of high-availability systems, as will be explained in this article.

CATEGORIES AND COMPLIANCE

Business conditions often will dictate the level and need for storage systems with capabilities outlined in the example description above.

Varying types of HA-storage have definitive diversity including the types of storage, capabilities, redundancy, resiliency, system offerings, scalability, and of course, pricing. Size and capabilities are characteristics that place HA-storage into various perspective, such as specified for small- to medium-based business (SMB) categories and operations.

In high-performance media and entertainment (M&E) environments, much of the storage utilized may require high-availability functionality. Applications including news content ingest, graphics, live production and editorial workspaces demand “instantly-available” data from storage systems that pose no latency in storage placement or retrieval.

Minimal latency in HA-storage infers there is no perceivable difference in live or real-time storage applications when compared against off-line or non-real-time operations. Effectively, HA-storage is instantaneous with no observable latency in placing, retrieving or acting on the data stored on those systems.

CONTINUOUS DUTY REQUIREMENTS

HA-storage systems support functions such as host software-based solutions in clustered servers with continuous duty. As a comparison, motors with “continuous duty” specifications means the motor is capable of continuous operation at its rated power with a temperature rise within the specified insulation temperature. Such motor specifications are described in IEC 60034-1:2010. HA-storage provides similar performance metrics yet will differ based upon HDD or SSD devices.

For hard disk drive (HDD) storage, the corollary of continuous duty references the drive rotation, typically cited as the constant angular velocity (CA), which effectively means a drive spins at a fixed rate (RPMs). High-performance hard disk drives have performance characteristics, which are grouped into categories known as access time and data transfer time (i.e., “rate”). For HA-storage, this specification is only a portion of the overall performance expectation.

SSD HIGH-AVAILABILITY STORAGE

For solid state drives (SSD), that is, drives without rotating magnetic mechanisms, the HA-performance dimension takes on a differing perspective. Some of the relevant HA-system specifications and benefits include “triple replicated storage,” which deals with the independent and secure replication of the data on a per-gigabyte basis.

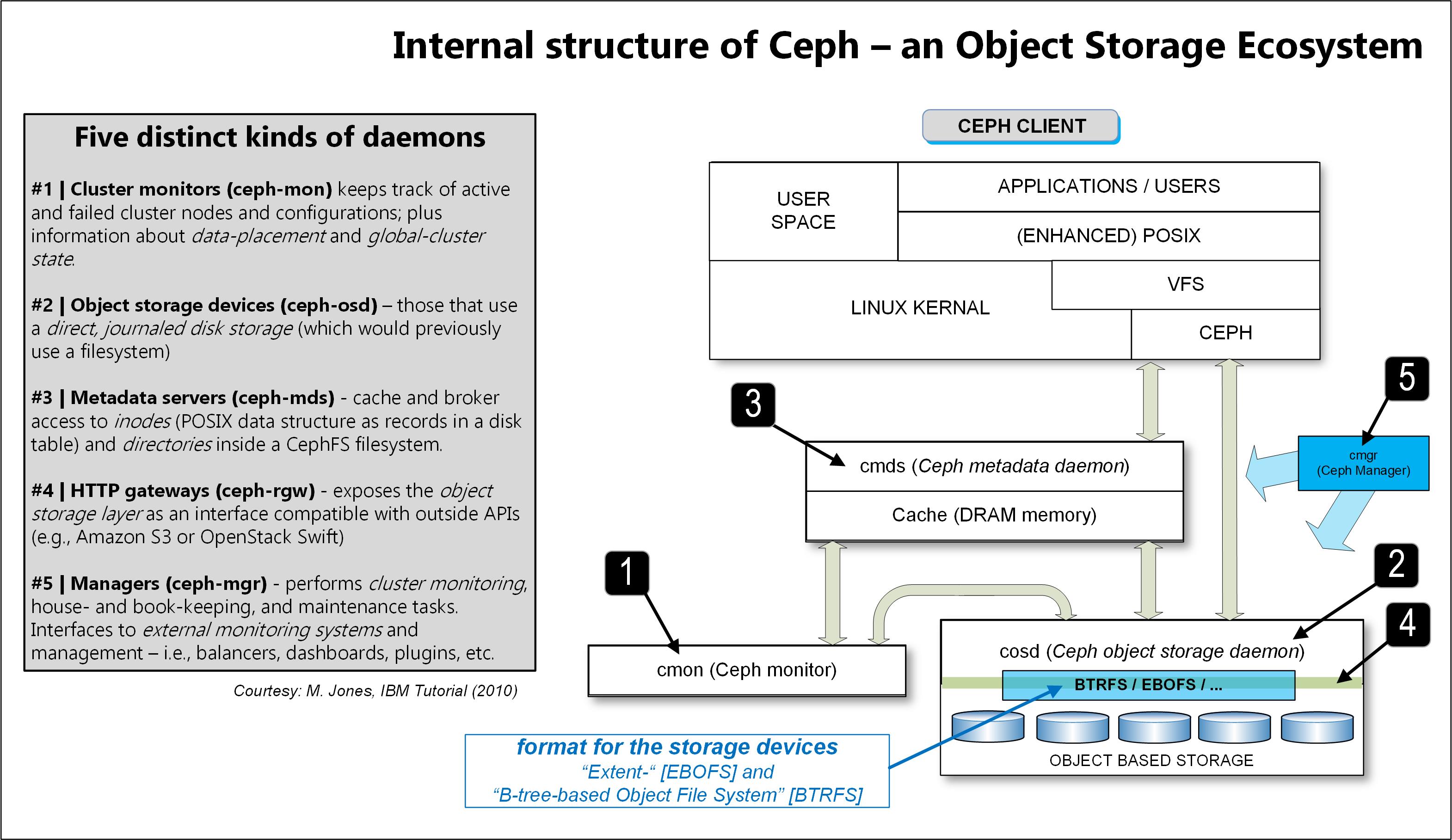

In a block storage application, the system may utilize Ceph, an open source, software-defined and distributed object store-and-file system providing high levels of performance, reliability and scalability. Ceph employs five succinct dæmons, a program or set of programs that run in the background and without any direct control from a user. (For more about Ceph, see Fig. 1).

Additional HA-SSD benefits include self-healing capabilities, 100% SLA uptime, the ability to have “uncongested” server-storage connectivity and global support where applicable.

Providers of high-availability storage solutions build their architectures on multiple cluster-based infrastructures. When the HA-storage is relegated to those systems, clusters will consist of multiple hardware servers (nodes) that collectively operate in high-availability modes. Such models exhibit zero downtime, instantaneous bypassing time, no downtime during maintenance or support, and instant hardware upgrades in a transparency model that the end users do not sense or experience.

MEETING CONDITIONS OF OPERATIONS

Additional command or secondary services (e.g., failover, data replication, snap shots) considered as “conditions of operation” are absolutely appropriate in HA-storage and its associated computer storage architectures.

Devices associated with network storage or appliances will often require HA-storage as “conditions for operations.” Such additional features generally include redundant network (i.e., a “RED” and a “BLUE” network topology) with features including multipathing drivers or associated storage adapters. In actuality, such features may be applicable to storage systems for entry and/or midrange storage—both of which may include HA systems. For those large-scale systems, these conditions become mandatory.

PHYSICAL RESILIENCY

Typical storage systems employed in M&E solutions are nearly always built with features including redundant and hot-swappable power supplies, dual controllers, dual system drives (usually SSD) and multiple-variable cooling fans. Other well-recognized data-centric services may include support for snapshots or data deduplication. Some of these services depend upon the operational model, operating system and the organization’s security requirements.

The primary idea behind high-availability is rather straightforward and simple, “…eliminate any single point of failure (SPOF).” HA generally ensures that if a server node or a path to the storage system goes down (expected or unexpected), nearly 100 percent of all the data requests can still be addressed and served.

Multiple layers of a storage-deployed solution may be independently or collectively configured for high-availability. One of these elements is the management of the logic volumes (LV), which may or may not already be a part of the particular suite of storage solutions and capabilities. Varying degrees of system management also become value propositions for selecting one provider versus another.

DATA CORRUPTION PREVENTION

In a “logical volume” HA configuration, the system is generally built as an active-passive model. Here, a single server only accesses shared storage one at a time. Generally, this is considered an ideal approach, as some of the other advanced features, such as snapshot and data deduplication, may not be supported in an active-active environment, i.e., when more than one server accesses the same shared storage. In this active-passive model, a dæmon prevents corruption of both the logical volume metadata and the logical volume itself.

When multiple machines make continuous or overlapping changes to their shared storage data sets, there is a potential risk that some corruption may occur. Mitigating such issues impinges on the high-availability factors since additional data manipulation time may result in latency, which in turn reduces performance. There are always tradeoffs in developing an HA-storage architecture. Linux is one environment where active-passive storage operations are usually applied to mitigate the risks of data corruption. Such applications are typically applied to all the servers in a system to avoid other conflicts that might compromise either the data integrity or the system performance.

HIGH-AVAILABILITY SOLUTION COMPONENTS

When looking for high-availability options in small-to-medium business (SMB) applications, system architects should explore all the components in the system, including applications, operating system, physical and virtual machine (VM) servers, adapters and NICs, network (LAN and SAN) switches and their associated storage systems for any potential single point of failure.

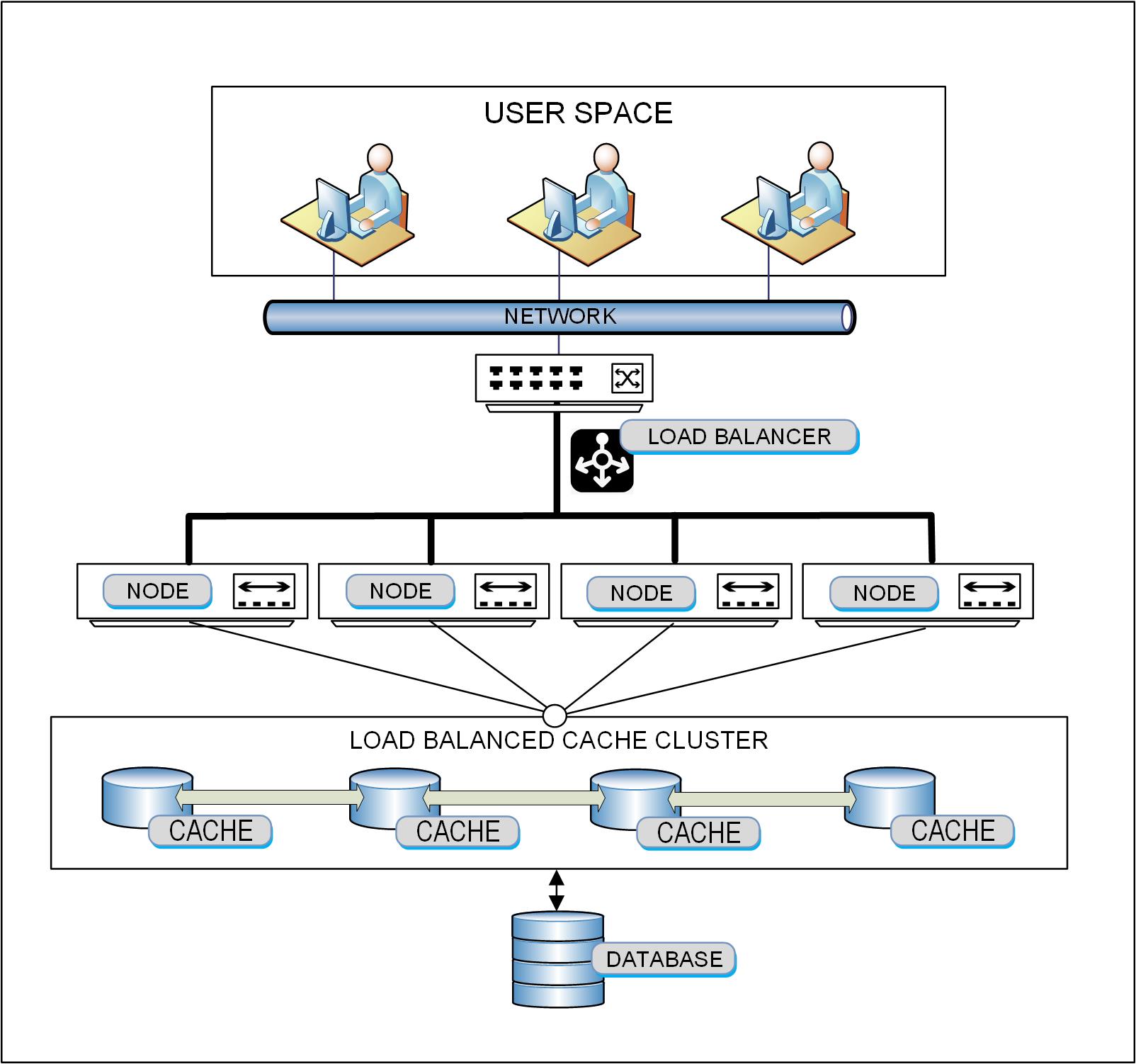

HA storage arrays should expect at least two controllers to support continuous operations should one controller be lost. For disaster recovery (DR), HA-storage requires a complete secondary storage system to hold critical data and business application needs should the primary storage system go offline. Often, by replicating data to another storage system at another site, such issues are prevented. Fig. 2 shows a simple, but typical overview for a collection of nodes and associated HA-storage caches.

TOTAL SYSTEM PERFORMANCE

When opting in for an HA-solution, don’t just look at the storage-solution sets as the only top-level priority. HA-performance is accomplished by examining the “total solution” and acting appropriately “across the board” to reach the highest level of applications and availability, especially at scale. Data storage components are just the start; remember the total or overall equation when looking into high-availability systems and applications.

Karl Paulsen is Diversified’s chief technology officer and a frequent contributor to TV Tech in storage, IP and cloud technologies. Contact him at kpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.