Low Latency Distribution: What Does It Mean to Video Streamers?

Depending on the value of the video content, latency takes on varying degrees of importance

One of the early problems with live video streaming—first demonstrated at the 1997 NAB Show—was that the images hiccupped and sputtered due to constraints in bandwidth. Yet, even with those annoying imperfections, it was clear from day one that video had a future over the internet.

Now—25 years later—getting rid of those video interruptions over the net is close to reality. It hasn’t been an easy engineering problem to solve. In live video streaming, it is important to remember that the lower the latency, the less robust the video signal becomes. Low latency video can hiccup, or stop playing completely, after even the tiniest gap in the bandwidth of the stream.

Balancing Act

Since day one, live video streaming has been a balancing act—low latency versus stability of the content stream. (Though things have dramatically improved from the days when virtually every online video was shaky and dropped out frequently.)

Today, depending on the value of the video content, latency takes on varying degrees of importance. With the standard streamed church service or town council meeting, which ranks as the lowest-valued content, segment size can be reduced from 2-4 seconds with little cost or concern. In this class of video, latency can be reduced to between 6–12 seconds.

More valuable, but pre-recorded, broadcast content averages about 5-6 seconds of latency, while demanding real-time content like OTT streaming of live online sports, gambling or gaming demand special low-latency technology. New technologies with complex names are dealing with the latency issue and programming vendors are expected to charge a premium for the service.

Until recently, ultra-low latencies of less than a second were best achieved using UDP-based WebRTC (Web Real-Time Communication), a free and open source protocol introduced by Google in 2011. It provides web browsers and mobile applications with real time communication via program interfaces.

Until about 2020, the HTTP approach—a competing method—could not provide a low enough latency for interactivity, so WebRTC remained a popular solution.

Apple vs. Microsoft (Again)

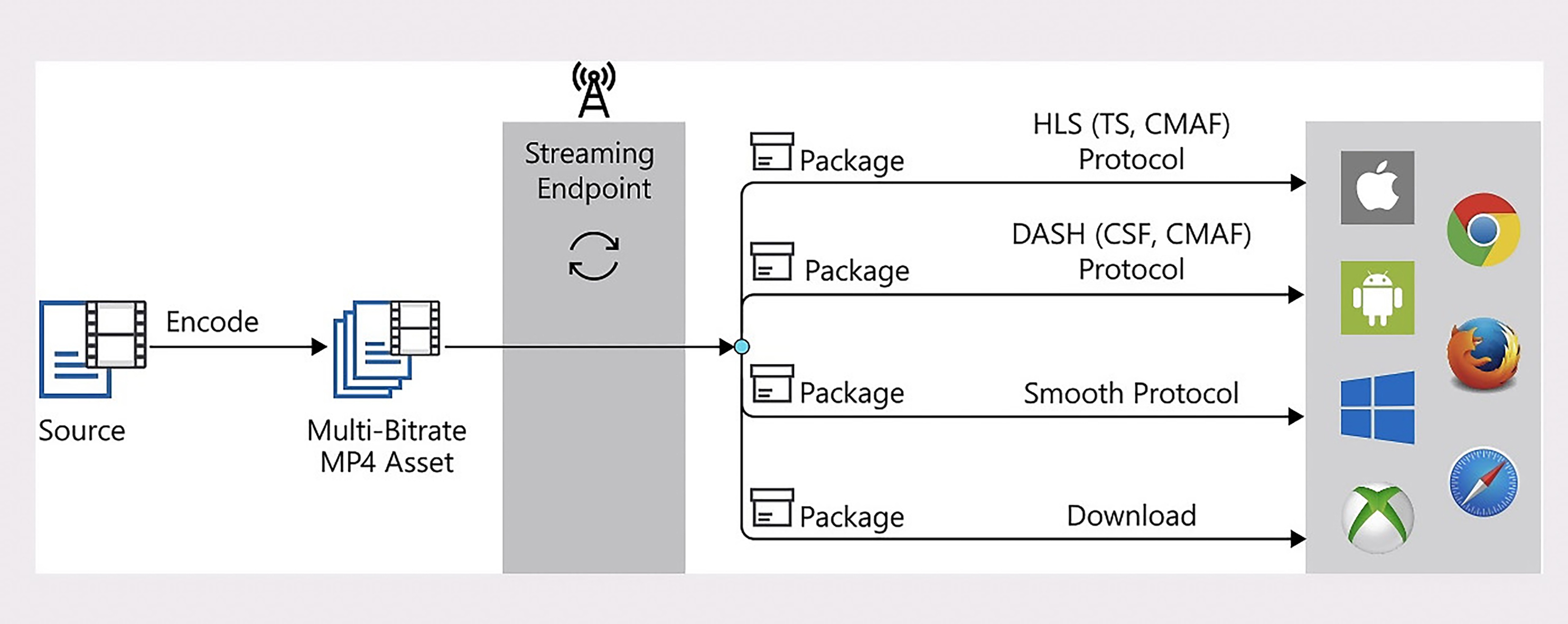

However, the cost and complexity of encoding and storing the same video file twice for the two main computer platforms—Apple and Microsoft—made processing and storage of content expensive. Two versions of the same video stream had to be made either in advance or instantly. With users accessing streams across iPhones, smart TVs, Xboxes and PCs, this expensive complexity became a major issue.

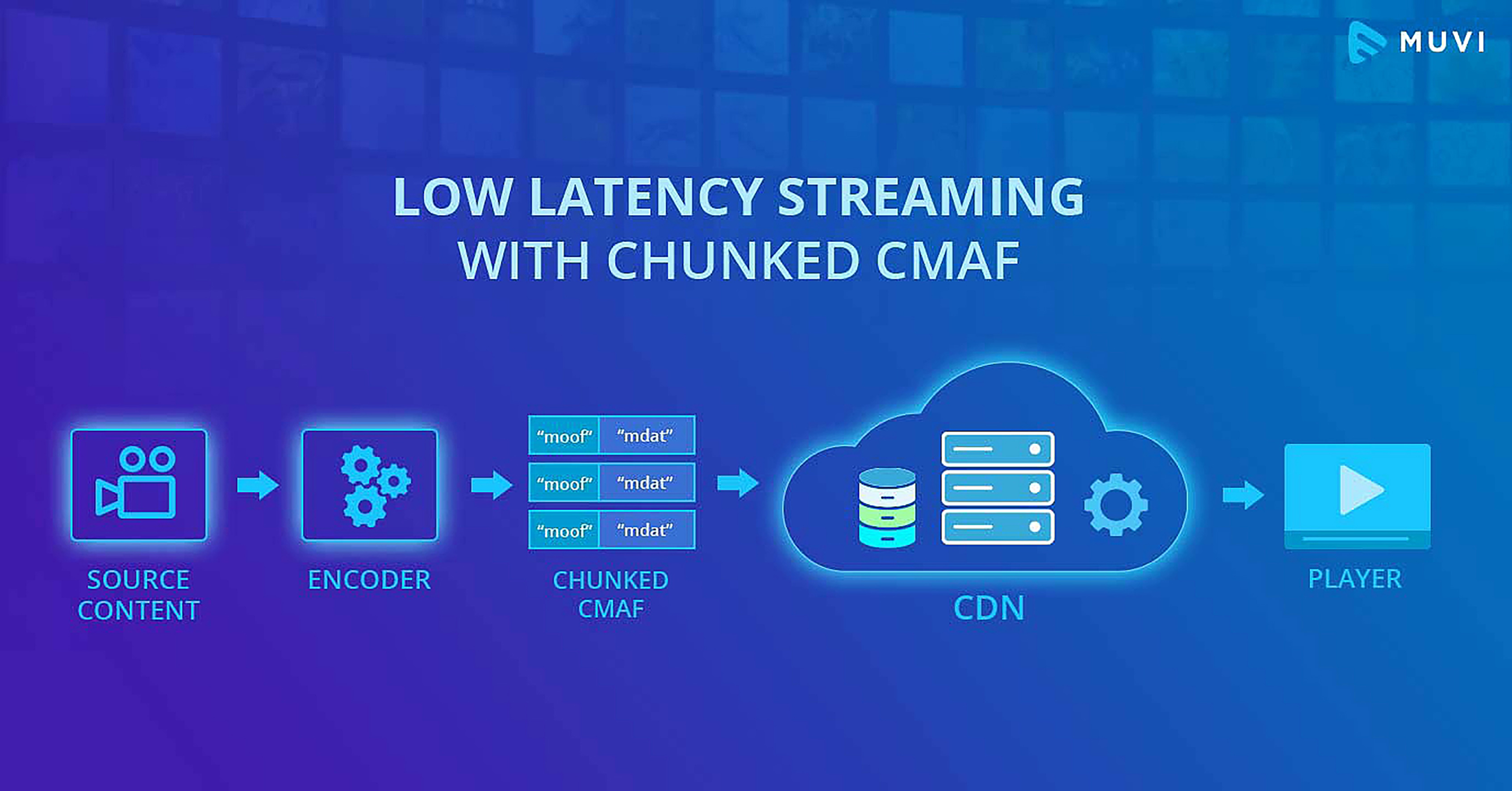

In 2016, Apple and Microsoft suggested that the Moving Pictures Expert Group (MPEG) create a new uniform standard called “Common Media Application Format” to simplify online delivery of HTTP-based streaming media (Fig. 1). It was published in 2018.

Once the standard was created, manufacturers implemented it quickly. The benefits of encoding, packaging and caching a single container for video delivery was obvious. But CMAF did more than just reduce encoding complexity.

HTTP-based video delivery still lacked the real-time delivery options that viewers wanted. CMAF had to also improve latency. Now, Microsoft and Apple have agreed to reach audiences across the HLS and DASH protocols by using CMAF, a standardized transport container (Fig. 2).

CMAF represents a coordinated industry-wide effort to lower latency with chunked encoding and transfer encoding. It also supports file encryption and digital rights management. Multiple incompatible DRMs are supported, including FairPlay, PlayReady and Widevine.

Since its creation, CMAF has opened the way for a new generation of low latency technology. These consist of Low Latency HLS (LL-HLS), Low Latency DASH (LL-DASH) and the High Efficiency Stream Protocol (HESP). CMAF works hand-in-hand with these protocols.

LL-HLS, which was announced by Apple in 2020, became the most used technology for streams via HLS. There is a DVB standard for low-latency DASH and there is ongoing work to ensure interoperability for all DASH/CMAF low-latency applications.

Harmonic and Akamai showed low-latency CMAF demos with a latency of under 5 seconds at the 2017 NAB and IBC shows. Since then, most other encoder and player vendors have integrated the technologies into their products.

Market Competition

All low-latency systems work in the same basic way. Rather than waiting until a complete segment is encoded—which usually takes between six and ten seconds—the encoder creates much shorter “chunks” that are transferred to the content delivery network as soon as they are complete.

In a new forecast from Rethink TV, a research organization, it is predicted that low latency protocols will quickly find a place in live sports delivery. As time goes on, all content—not just sports—will make use of the protocols, the researcher predicted.

Rethink TV anticipates low-latency delivery will be a very competitive market in the next few years. These low-latency distribution technologies, which can be offered as a premium service to subscribers, are key for a number of competitive video technology vendors and users. Pivotal will be knowing how to optimize both the centralized and edge infrastructure to compete with rivals, the research found.

As most devices are now totally compatible with CMAF, Rethink TV predicts most will be able to receive video streams packaged in LL-HLS and LL-DASH formats. Competitive forces will drive adoption.

For owners of content, rights holders and streaming services, the reduction of latency for live video can differentiate them from their rivals by dramatically improving the reliability of video service.

This also applies to broadcasters that operate both traditional pay-TV distribution infrastructure and an OTT service. Those broadcasters need to get their latencies down to something nearer their traditional pay-TV competitors.

Reducing OTT latency is essential, but now for only live content. It will probably remain in the sports and betting arena for the time being. However, all on-demand video programming will eventually migrate to a low-latency technology, probably before the end of this decade.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Frank Beacham is an independent writer based in New York.