Planning for New Architectures: Is Workflow Optimization Worth the Trouble?

The M&E industry is experiencing a seismic shift from legacy architecture to a world of virtualized software and applications

The broadcast industry is 100 years old: the BBC was formed Oct. 18, 1922 and made its first radio broadcasts the following month, while in the United States, President Herbert Hoover introduced the first radio in the White House. Several decades later television arrived.

What characterized the industry then was that the technology to achieve it was hard, with most equipment designed and built especially for radio or television. That meant there were no real economies of scale, so the barrier to entry was very high.

It also meant that workflows were constrained to what the equipment—and your particular architecture—could do. Add to that limited access to the content, with much of it on film and the rest on very expensive, hard-to-copy tape, and processes were sequential and time-consuming.

Going Virtual

Today we see a seismic shift from that legacy architecture to a world of virtualized software and applications. Those can be run on standard computers, so processing could be housed in your machine room, shared with the corporate data center, or outsourced to the cloud.

The ability to host professional, broadcast infrastructures on COTS hardware is simply a result of Moore’s Law (not to mention the ingenuity of the chip industry in figuring out workarounds), meaning that affordable processing now has sufficient grunt to do what we used to need expensive bespoke hardware to do.

This has a second massive impact on the media industry: Where once the barrier to entry was high, now more or less anyone can create and “broadcast” media. Most of us carry 4k Ultra HD cameras around in our pockets every day, a function which comes free with our phones. And more than 500 hours of content is uploaded to YouTube every minute (yes, those units are the right way around).

To stay competitive, broadcasters have to re-engineer their businesses to provide OTT delivery and a mass of additional online content, while competing with new entrants to the market specializing in streaming. And everyone from sports teams to houses of worship are cottoning on to the fact that they can create communities using streamed video.

Broadcasters start with established workflows, developed to deliver content to one or a handful of linear channels, or to one or a handful of distribution end points, through the technological limitations of their installed architectures. The opportunity now exists to retire the heavy iron, build the workflows they want using virtualized software, and potentially transform their businesses.

Enter Microservices

It’s worth thinking about what virtualization means. Where once we had industry-specific black boxes that did big tasks—graphics inserters or transcoders, for example—now we have processing built on microservices.

A microservice is often defined as the smallest viable unit of processing: it carries out one specific task but does it with the utmost efficiency. Microservices are brought together to do a specific job or workflow and, once that job is complete, they are released, and the processing power can go off and be applied to something else.

This opens up a tremendous opportunity to completely redefine or optimize all your workflows by using microservices to make the most efficient use of the processing power available (or reduce your spend on processing power to what you actually need). It allows you to make a dramatic move from largely manual processes to highly automated systems that will free up experienced staff to develop new services to generate further growth.

As consultants who are privileged to talk to broadcasters across all continents, I have to say that our experience is that many have decided to simply lift and shift their workflows from legacy hardware to software architectures, with the intent to optimize as part of the “second phase.” And as we all know, a second project phase is often re-prioritized to accommodate new initiatives, so companies often miss out on the real benefits of the move to virtualized systems: the ability to automate and reduce resources; and to simplify, optimize and deliver new efficiencies.

Taking the Big Step

In answer to the question in the headline, yes, workflow optimization is definitely worth the trouble. Yet many broadcasters are still treading cautiously, in part because the legacy processes are so deeply ingrained; but it’s also because it’s difficult to evaluate and implement new opportunities and new ways of working without interrupting existing operations.

Typical of the hesitation from broadcasters and content owners was around the cloud. Early advocates tried to convince us that it would transform the economics of broadcasting, yet experience has shown that, without a good plan, costs are comparable or even more expensive in the cloud, particularly for smaller businesses who do not have the buying clout of the “big boys.”

Those who have taken a more rigorous view have found that cloud storage is the way into new workflows. It makes sense to keep the content close to where you will need it, so successful companies are developing systems with multiple cloud storage environments. And, once the content is in the cloud, the arithmetic around cloud processing becomes more favorable, particularly as major cloud operators offer their own suites of sophisticated media tools.

Optimizing workflows starts with business analysis: what are the goals; what will generate the revenues; what should be kept in house and what should be outsourced; what can be automated; and what opportunities will be opened up by releasing the staff?

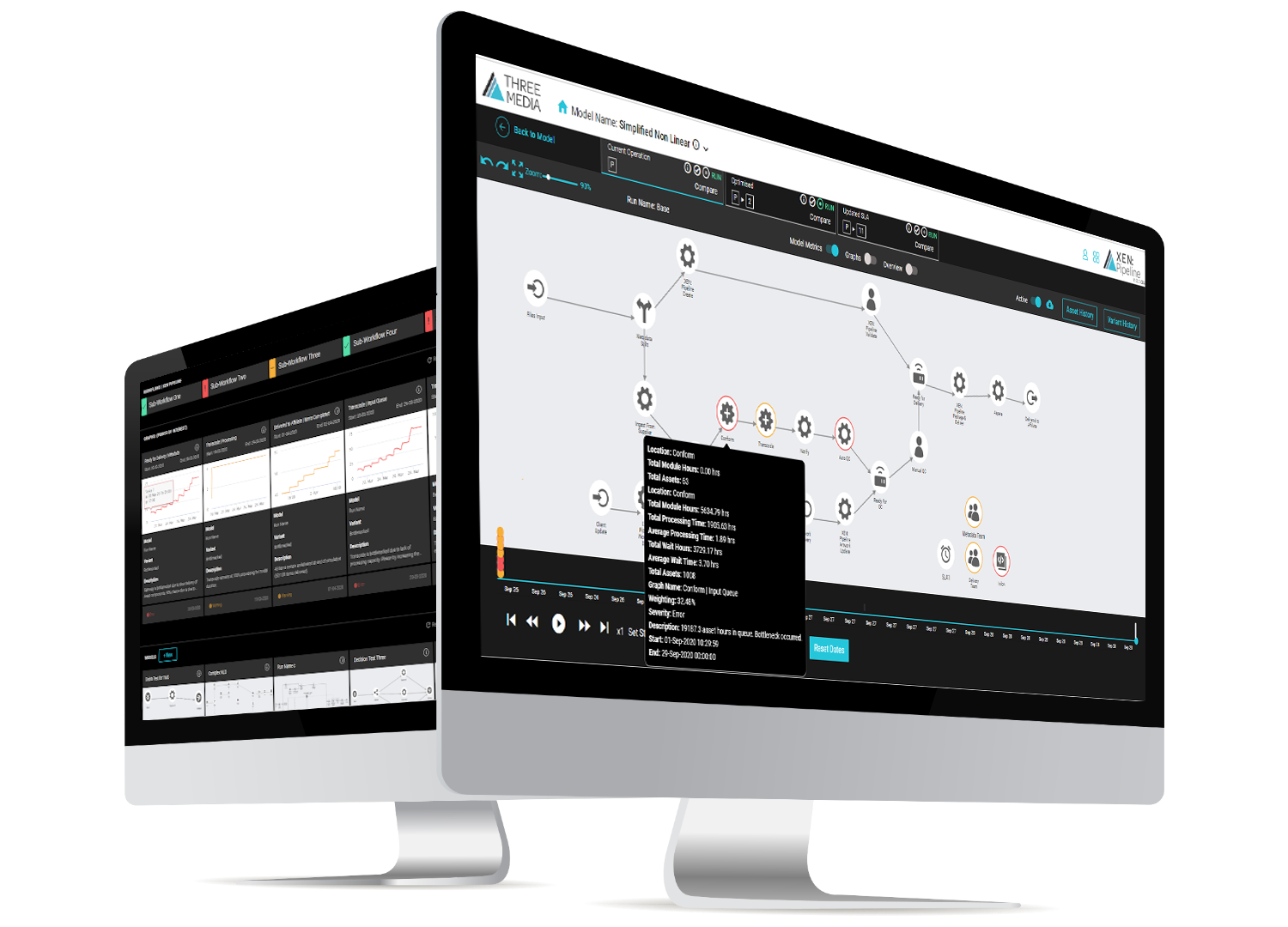

Armed with the list of goals and constraints, workflows can be mapped to see how they can be optimized. We have had significant success with a technique from the IT industry called “process mining,“ which allows us to create a complete model of the organization, from top level goals to each individual point in the technology platform, using real and simulated data to provide the multi-layered analysis.

Applying this model and process data sets, a business or team can instantly see where the bottlenecks lie. More importantly, they can run through the whole array of “what ifs” to see where they have too many or too few resources (human or technology), how they can redeploy to optimize workflows, justify investments directly against potential returns, and chart a course through their future strategy and industry initiatives.

To be clear: workflow mapping and optimization is a major step. But I would argue that, without it, you will never seize all the advantages that virtualized architectures offer, and you will never create the operational efficiencies that are vital to compete today and remain relevant in the future.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Craig Bury is CTO of Three Media