Selecting a Storage Architecture

Style and foundation are key to performance and success

Why is a storage architecture an important component in the organization’s overall media composition and delivery platform? Simply stated, the storage architecture is the foundation that sets the prioritization of an organization’s data management, performance, metering and content protection strategy. The concept is relevant whether for transactional processes or unstructured media-centric content creation and delivery.

Storage management architectures are one of the more important areas that define both the ease of operations and the success of the operation from a delivery perspective. Additionally, unprotected or improperly structured architectures can end in disaster; and it is not unusual to find one or both of these elements in some of today’s content creation environments.

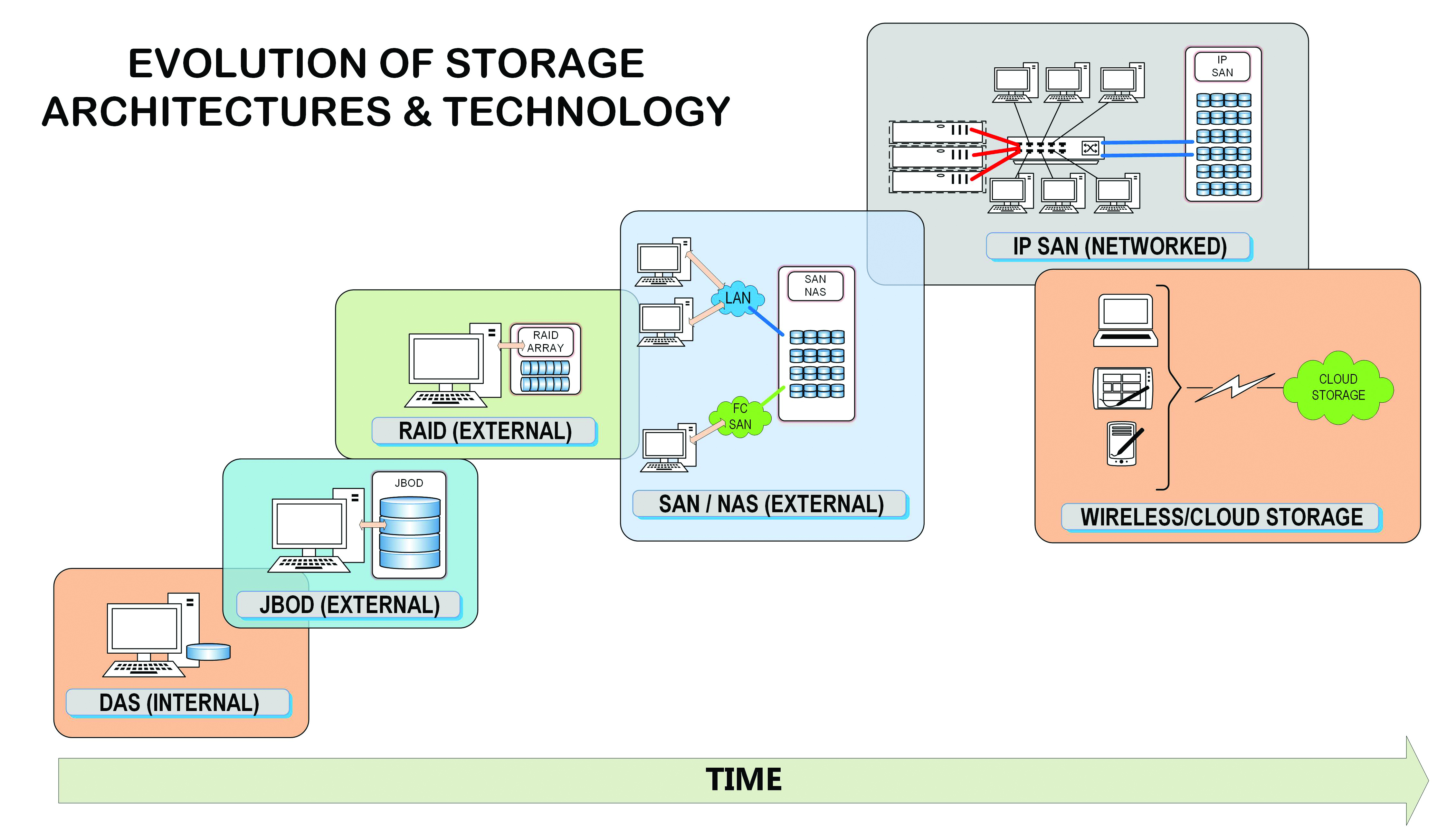

Storage technologies have steadily evolved over the past 30-plus years, as depicted in the accompanying evolution timeline in Fig. 1. Today, architectural styles for storage may be composed of solutions provided by storage service providers, storage component vendors and dozens of experienced (and some not so experienced) consultants or solutions providers. Given the system complexities, home-brewed storage at an enterprise level just isn’t very practical any longer. Selecting an appropriate style or foundation for your storage architecture becomes key to its performance and the continued success of your operations.

GETTING THAT CONTENT DELIVERED

As an example, in a broadcast television news organization the time to get a story “on the air” can make or break that program from an audience attention span and ratings perspective. Everything in a “breaking news” headline is based on who gets to see that story first. Coupled with a story’s promotion is the volume of delivery platforms that the story can get released in the fastest time. No longer does a news and information organization depend solely on its over-the-air or cable-channel distribution. News depends upon a multitude of delivery forms to get the message (and its advertising) to the user.

Delivery requires speed. Data generated from a single instance of a story must be conformed to many additional form factors—web delivery, social media, streaming services for phone and mobile devices, cloud storage (as applications) for other users or subscribers and, of course, the primary service from which it bears their name and logo of identification.

Each of the elements for this delivery will depend on a reliable and effective storage solution and its delivery methods. Any single failure can create a cascading effect that is unpredictable due to factors such as time, impact, audience attention and more.

How the organization picks its storage architecture depends highly upon how, when and where the content must be delivered—and who needs to get that content first. Such content delivery channels (paths) may be internal: that is, editing, production, post production, immediate on-air studio playout or next-time/repeat delivery playout. For external delivery that need may be for users who only see the content in a linear format; or for nonlinear services that repurpose and re-present that content; and many other varying avenues of consumption.

COMPETING PROCESSES

Copying, replicating, reformatting, transcoding, asset management delivery and other components are often utilized in both sequential and random processes. Such processes may require competing storage architectures along with a storage delivery network that can adapt to sudden changes, bursts in services and an unusually high level of continual stress that is seldom predictable.

Thus, picking the “lowest common denominator” for the storage solution isn’t the best, most efficient or most advantageous choice. Storage architects who configure these platforms need to understand the requirements and develop a solution (or set of solutions) that best services each of these needs in a flexible and adaptable environment.

As an overview, storage architecture styles may include “takeoffs” or examples of the following kinds or levels of storage systems.

TIGHTLY OR LOOSELY COUPLED

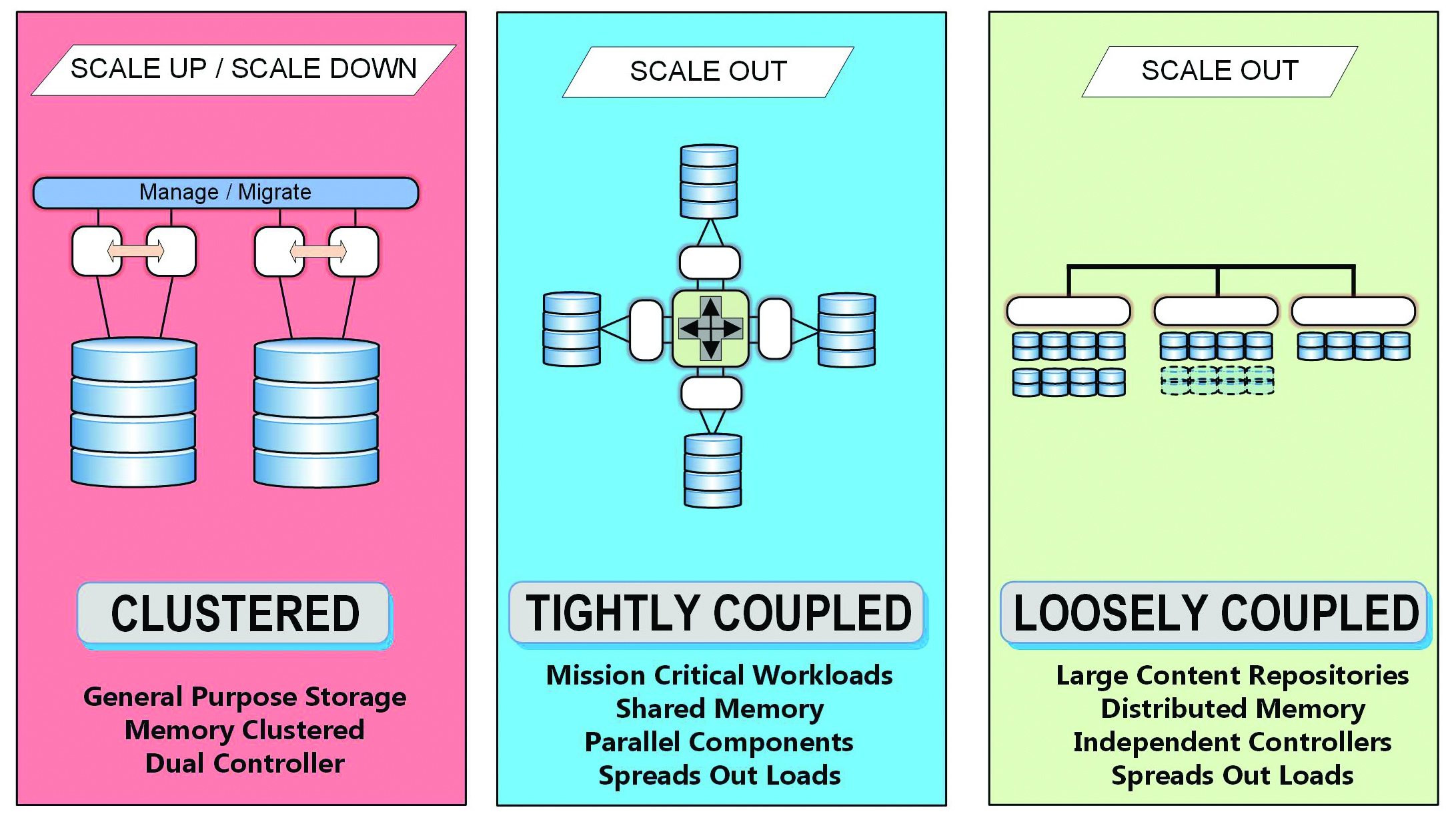

A loosely coupled system will not share memory amongst or between its nodes (Fig. 2 Green). In this system, data is likely distributed among many nodes, which may involve a large amount of inter-node communications during data writes. While simple to use and for distributed reads where data can reside in multiple places, such a system can be expensive when looking at the system cycles metric.

Transactional data (non-mediacentric data) can be impacted by things such as hidden write locations that are effectively low latency and brought on by the types of storage, such as NVRAM or SSDs. In this model, data may be kept in more than one location allowing multiple nodes to hold it and speed up access based upon paths that might be open, even if momentarily.

Conversely, in a tightly coupled architecture (Fig. 2 Blue), data is distributed between multiple nodes, often configured for parallel running and orchestrated by several high-availability controllers configured in a grid. Here, inter-nodal communications is necessary to keep the efficiency level high, latency low and processing running at peak capacity. Often such systems are engineered so that I/O paths are symmetric amongst all associated nodes.

In similar fashion to how enterprise class network switches function, storage system failures (drives, controllers, memory) are quickly identified, and alternative (prescribed) paths are implemented almost instantly. The effect goes unnoticed and operations continue without impact.

MULTI-TIERED AND CLUSTERED

In this model, applications (such as HTTP-based calls) will manage and make appropriate use of separate, almost layered tiers for specific delivery platforms. Web, application, database (asset management) and processing (transcoding or packaging) servers already have the storage access pathing embedded in their code-bases. Calls to central storage are routine, secure and redundant. Should any one tier be compromised, the other paths will be protected (i.e., are safe) with the aid of network security protocols, firewalls and even managed, physical switch segregation.

In the clustered environment, memory is not shared between nodes and data will “stay” behind a single compute node (Fig. 2 Red). I/O paths in a clustered architecture model may have varying layers. Some may employ an umbrella-like or federation model allowing the entire system to scale out as necessary. I/O is manipulated until the appropriate node reaches the data set needed for the particular task. Redirection code manages these operations with a potential drawback that induces potential latency while the right path awards the right connection for the selected data requirements.

DISTRIBUTED ARCHITECTURES

When a nontransactional data model is necessary, as in data in an editing, media asset management or a random processing chain (that is a nonlinear and nontranscoding operation)—a distributed storage architecture model across multiple nodes may be the choice. In this model, memory is not shared amongst nodes and the data is distributed across the nodes as in a fanout or multiparallel infrastructure. From a file system structure, such a distributed architecture may use objects and may operate as a non-POSIX (Portable Operating System Interface per IEEE Std 1003.1-1988) protocol.

Distributed architectures are less common but sometimes still employed by large enterprises, which enable petabytes of storage (Fig. 2 Green). Search engines and extremely complex asset management implementations with federated access on a global basis are candidates for the distributed architecture. In this situation, massively parallel processing models are implemented, resulting in speed and scalability—a perfect example of cloud-based resources when the onramp/offramp accessibility is unencumbered.

There are still more considerations for storage architectures that involve cost evaluations, compromises and scaling requirements—sometimes based on the phase of a particular project or the impacts of legacy and existing functioning components that still have a financial life expectation sitting on the books. Working with an expert in these areas is essential, especially at the enterprise level. Software applications, workflows and data structures all become key components in determining the full solution set. When the delivery of your assets depends upon speed, reliability and performance, one cannot afford to do things only half way.

Karl Paulsen is chief technology officer at Diversified and a frequent contributor to TV Technology in storage, IP and cloud technologies.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.