Storage Technologies Need to Change to Keep Up With Supply and Demand

Server and storage spending are leading indicators of the global economy

In recent years media organizations have been preparing for changes in how media is produced, which inevitably came to fruition once the impacts of Covid were realized. Prior to the pandemic, remote production was quite stable for those larger venue-like productions (concerts, sports, entertainers), however day-to-day operations (news, weather, local shows) had to be re-envisioned on a much broader scale once production crews became isolated.

The media and entertainment industry saw a huge impact as the industry nearly shut down overnight. Once organizations were able to survey the availability of their own resources, the extension of those resources quickly expanded, supported by software-enabled internet-based interconnects and flexible remote applications that were portable and adjustable.

‘Remote Mode’

When the pandemic was “officially” over, the entities that enabled these new routine remote operations quickly understood that they could continue in the “remote-mode” fashion and sought to keep that new operational model functional. This obviously pleased the majority of those who had now resolved to work from home and could easily and effectively operate in that model with only marginal impact on homebase facilities.

Certainly, exceptions popped up and adjustments had to be made—but the overall design and modeling for remote production was established. The foundation for many live and non-live productions were now setting like wet concrete.

Other things besides connectivity and applications have been changed or augmented to allow remote or at-home production to be sustained. The most obvious is the cloud—but cloud facilities had to expand in similar fashion to allow home-based central equipment rooms of the broadcast facility to be more easily utilized.

By that I mean, accessibility, bandwidth, networking and fast, easily accessible storage all had to be reshaped in order to support live and non-live production requirements.

One of those areas, storage growth, has not slowed down in the least. Predictions say that by 2026 more than 220 exabytes of data (equal to 220 quintillion (1018) bytes) will have been created and stored somewhere in various ecosystems, according to research and a report by Coughlin Associates.

The Varieties of Cloud

Reports have indicated that server and storage spending are leading indicators of the global economy. As for the future, companies are either terrified or optimistic of that pattern, or both at the same time, as predicted by NextPlatform in September 2022. IDC reported that total revenues in “shared cloud,” “dedicated cloud” and “all-cloud plus non-cloud” reached $39.9B; and overall increases from 2020 for the same period reached over $10B in growth.

This only covers a single segment of those overall applications, and when compared with past sales—including service providers and everyone else (private users, non-shared services, etc.)—total revenue in 2020 was $130.9B and the predicted revenue in 2026 will reach $197.3B (references from IDC in 2022 as a CAGR of 10.9% from 2022 to 2026).

With this growth, how will storage technology be able to keep up with the supply and demand expectations? What is changing to meet these goals? There is a steady change in storage technology platforms, which are necessary to meet the objectives expected. Some manufacturers are betting on the change to an all-flash storage device, whether for the local device or the cloud. One manufacturer recently reported that they expect capacity of their proprietary DFMs (direct flash modules) to increase by six-fold in a few years, reaching up to 300 TB capacities.

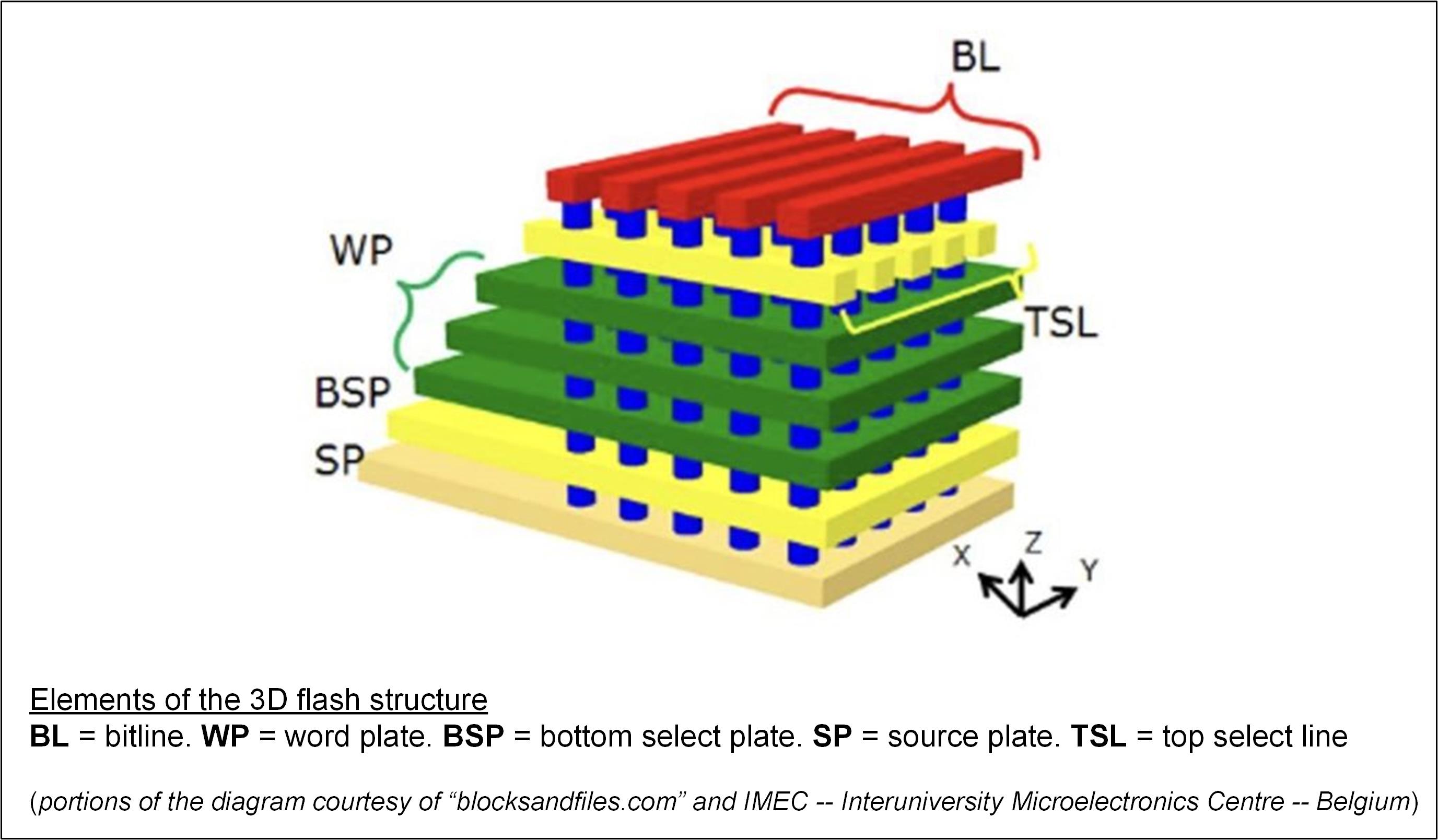

Advancements in 3D NAND (Fig. 1) areal density will look somewhat like how HDD (hard disk drives) grew a few short years ago. The physical capacities of such hard drives nearly reaching saturation as molecular densities drove magnetics to practically crashing and colliding upon themselves. Today, 24 TB and 48 TB DFM drives are shipping, dwarfing past other devices and showing that HDDs may no longer be the norm.

Predictions say that by 2026 more than 220 exabytes of data (equal to 220 quintillion (1018) bytes) will have been created and stored somewhere in various ecosystems."

How is this changing? Heretofore, 3D NAND devices used a stacking or laying principle with between 112 and 160 layers per IC. In the next few years (and less than five), fab vendors expect the number of active layers to increase to between 400 and 500 layers, yielding much higher-capacity 3D NAND ICs.

Other Storage Segments

Applications for storage and its growth indicate that there are other storage segments which also need to be supported by M&E.

Long-term archiving will continue on various media form factors, with external HDD deriving around 18% of the archive storage space and 20% for local storage networks (NAS and SAN) with about an equal amount for private or public cloud at 21%. Digital magnetic tape (e.g., LTO or similar) remains the leader at 32% overall, according to Coughlin Associates’ research—but this may be shifting downward as cloud becomes more convenient and affordable. This prediction, again, is for long-term storage and for short-term storage the model changes even more dramatically.

Both bandwidth and storage are required for a proper balance in content generation (i.e., production, capture, post and readying for transmission). The evolution of the network to 100 Gbps is almost routine now. Effectively, new installations striving towards an all-digital post-production environment should consider no less than 100 Gbps pipes to address the up-and-coming virtual environments that already require 20 to 50 Gbps connectivity/communications on a consistent basis.

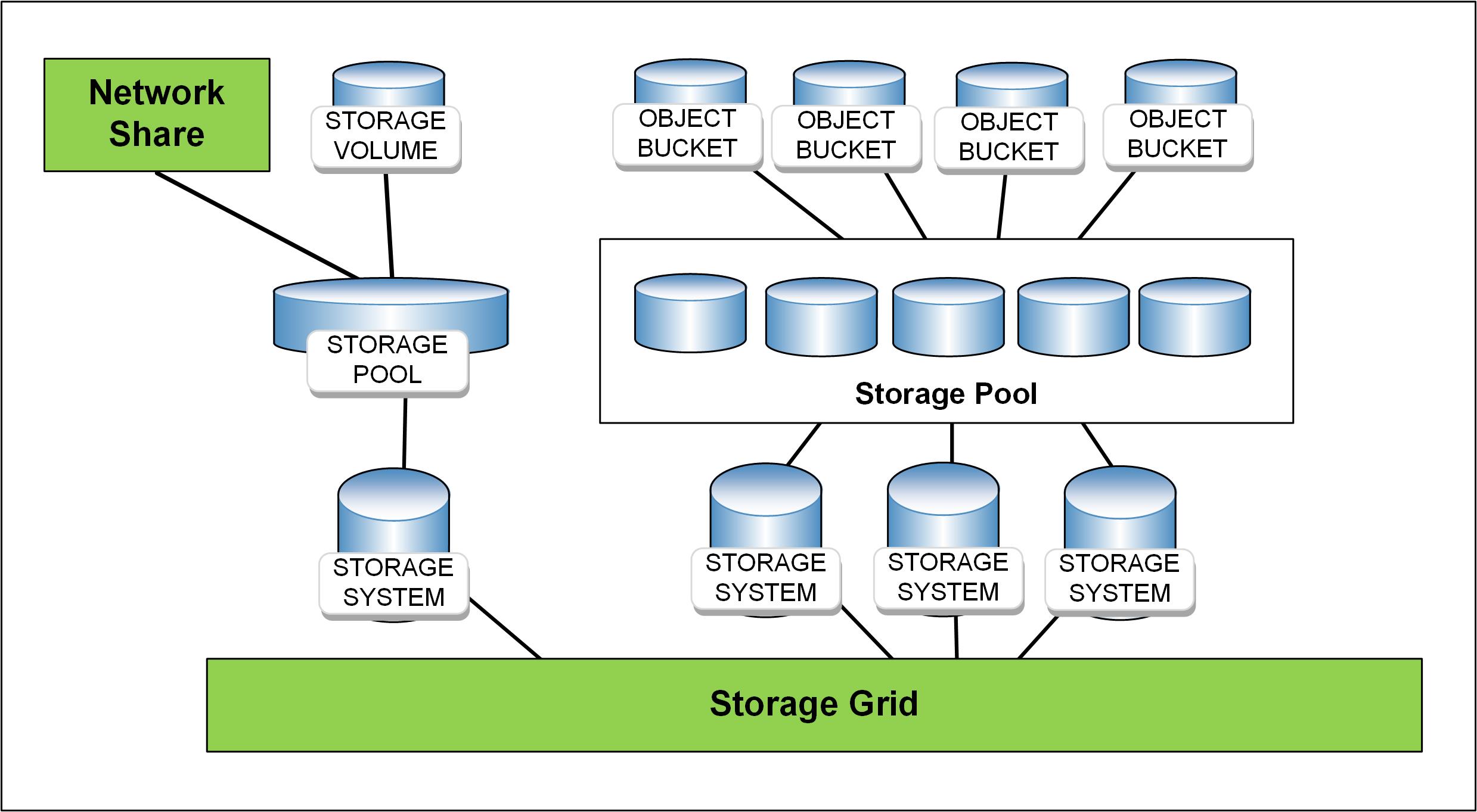

Multipliers for content capture command more and faster storage solutions that can only be met by adding storage (local and/or cloud) and pipes that move data at rates approaching 50+ Gbps continuously. If you’re producing 4K (or 8K for long-term archiving) the network must be capable of handling 100 Gbps with the servers and storage able to receive (and return) such data rates. Today, for most storage systems in the M&E space, the bulk of those facilities continue to rely on HDD, however, SSDs are playing an increasingly important role through the use of NVMe SSD and NVMe over fabrics leveraging the “pooling” of storage.

Storage pools (Fig. 2) are principal constructs around storage virtualization. The “distribution” of a storage pool (a block of storage) may be connected in any number of means including Fibre Channel, iSCSI or direct attached (DAS) storage devices. The pool should not be “vendor-locking,” meaning that a pool should not be dependent upon one storage vendor’s product or proprietary solution—and instead allow flexibility to add or remove storage devices across the overall storage network solution.

This, in turn maximizes the opportunity to scale or shift storage depending on the load or application; very important when exchanging files or resolution densities in a production environment.

One can see that the dynamics associated with these new and future production storage sets will require some careful planning to mitigate being trapped into a single source solution. This appears, on the surface, to be the target for future storage product providers, whether in the cloud or on-prem.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.