AES69-2015: Key to Immersive Audio

Ever since audio technology made the shift from mono to stereo imaging it seems that some listeners have been on a quest for the most immersive audio image possible. Quadrophonic, Ambisonics, Dolby Surround, DTS and Dolby Digital were all born from the desire to provide a truly encompassing audible experience for listeners.

Although early developments were audio- only, it wasn’t long before multichannel audio was married to motion pictures using systems like Fantasound and Cinerama, while television broadcasts remained mono until the 1980s.

PERSONAL EXPERIENCE

Take a stroll through consumer electronics stores now and you’ll see a proliferation of surround systems to accompany large-screen televisions, but just as many personal Bluetooth speaker systems and plenty of high-end headphones. In fact, the trend for surround and immersion seems to be moving toward personal versus shared experiences, driven by smartphones and handheld gaming systems. However, much of the content being consumed on these devices still originates with media and broadcast production companies.

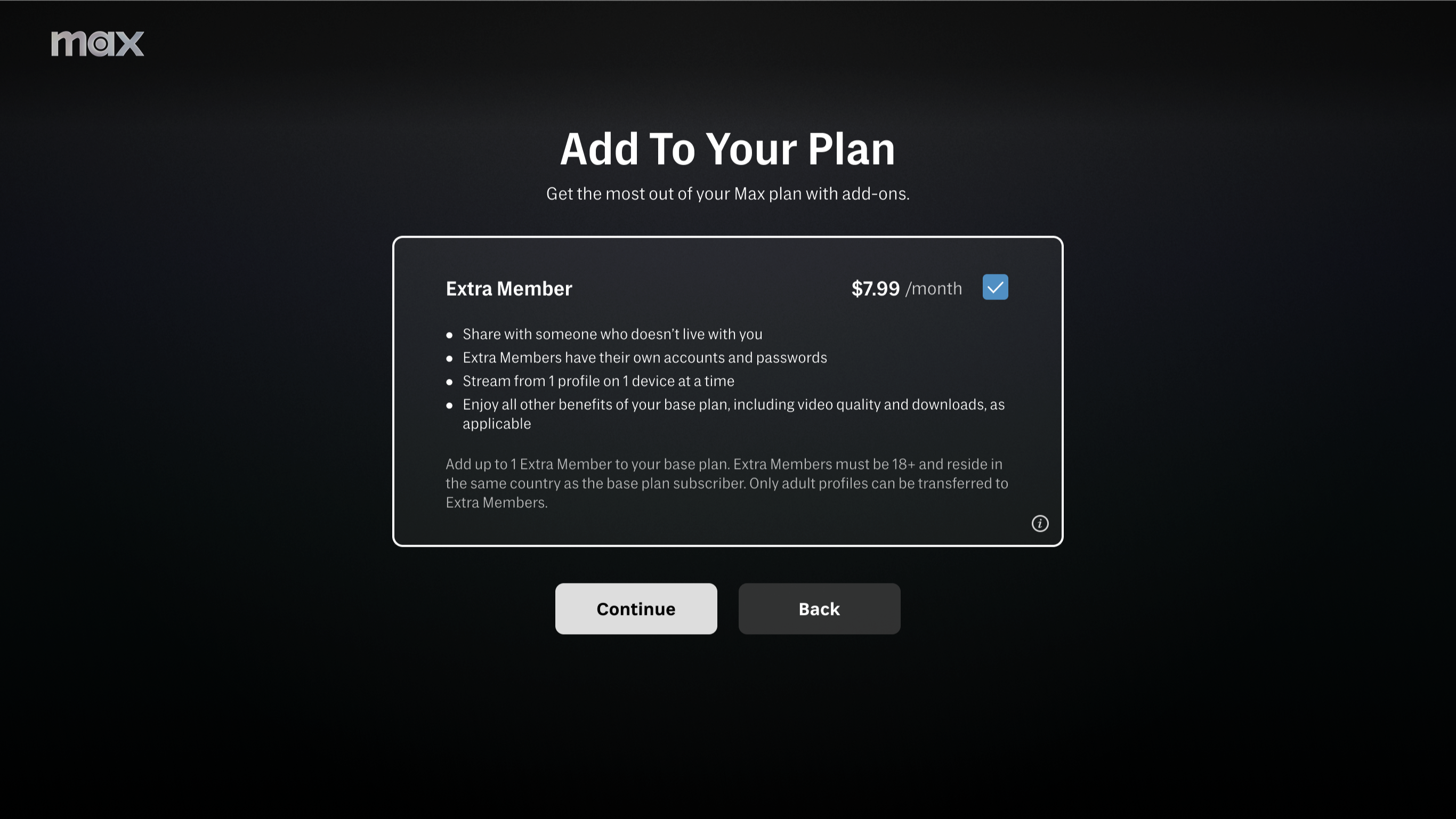

Juan Punyed, Telos Alliance director of sales for Latin America and Canada, previews a Dolby Atmos immersive audio demo in the Telos Alliance NAB booth. A number of manufacturers jumped into the immersive audio field early in the game, but now, with ATSC 3.0 seeking to bring immersion into the broadcast space, the Audio Engineering Society has stepped forward with a standard aimed at ensuring immersive audio delivery systems use the same file format for exchange of spatial audio information.

Before we take a look at the standard it is important to note that this standard promotes binaural listening as the key to immersion, which means this standard is all about the head and the space, real or simulated, that it resides in.

Binaural recording is nothing new and the typical method for making binaural recordings is to use a dummy head with microphones placed at the ears, the thought being to capture audio as a human would hear it. Unfortunately, the human head itself is another object in the acoustic space and will itself modify the audio we hear.

Depending on many variables—including where our head is in the soundfield in relation to the source—sound is likely to arrive at each ear at a slightly different time, at a different intensity and with a somewhat different frequency response depending on the shape of our head and ears, and reflections/cancelations from our body.

IT’S ALL IN THE HEAD

Fortunately our brains learn and adapt our hearing to compensate in a response called head-related transfer function (HRTF). HRTF is a key parameter of AES69 along with the help of two impulse responses: head-related impulse response (HRIR), which helps us localize the source, and directional-room impulse response (DRIR), which allows us to place the source in the room.

As with other AES standards, this one builds upon work done by other industry groups rather than establish conflicting standards and unnecessarily reinventing the wheel. In this case the work is based on ISO, ITU and IETF standards and utilizes netCDF-4 as the format for data storage.

The standard relies heavily on audio objects, a staple of the gaming audio world, which is relatively new to broadcast, and something we’ll see a lot more of as we inch closer to ATSC 3.0. The primary objects in AES69 are “Listeners,” which can consist of an unlimited number of receivers; “Sources,” which can consist of an unlimited number of emitters; and “Rooms.”

In any given AES69 file there can only be one Listener, one Source and one Room. These objects are placed within two coordinate systems that determine where they exist in space. The Global Coordinate System allows placement of sources and listeners inside the space, while each source and listener have their own local coordinates within the global system, which allow placement of emitters within the source and receivers within the listener.

Directivity patterns of emitters and receivers are defined within the local coordinate system. To assist with orientation, orthogonal “View” and “Up” vectors are defined, with View on the positive x-axis while Up is on the positive z-axis. A long list of metadata parameters are included and provide an additional method of defining variables and their characteristics, as well as important information about the data inside the file.

As one would expect, listener, receiver, room and room types are described in the metadata parameters, and provision is made for external applications to include metadata specific to their needs and requirements. Available room types are free field, reverberant, shoebox and .dae, a data interchange file providing links to 3D applications. All information gets stored in a single netCDF-4 binary data file with a .sofa (Spatially Oriented Format for Acoustics) extension. AES69-2015 goes into far more detail and depth than there is room to cover here so, as always, I encourage you to pick up a copy and dig in if you want to know more.

Binaural audio is not without its issues and detractors. Location of sources in some binaural recordings can be hard to pinpoint, with sounds to the sides being easiest to determine while sounds to the front and rear sometimes seeming as if they are located at the listening position itself.

Fortunately, the sizable list of parameters included in AES69-2015, along with built-in extensibility, likely means the standard has enough breadth to compensate for any shortcomings of binaural listening.

My one experience listening to an immersive format so far was a demonstration of Headphone:X from DTS and it was a phenomenal experience. Audio sources were played over speakers in the demonstration room; then we were instructed to put on supplied headphones and the audio was repeated.

The headphone audio sounded virtually the same as the audio from the speakers, (to the extent that most of us took the headphones off to be sure). Of course, the DTS guys had plenty of time beforehand to run room impulses and tailor the setup before we arrived, but the result was very impressive nonetheless.

If the immersive systems from other manufacturers are as impressive, and I expect they are, and AES69-2015 is used for interchange among systems, then the world of immersive audio is about to become a very exciting place to listen.

Jay Yeary is a broadcast engineer specializing in audio. He is an AES Fellow and is a member of both SBE and SMPTE. He can be contacted via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.