At the Center of Scalability

Scalability is generally attached to the concept whereby a system can expand from one particular “size” to another. Often the perception is that the top dimension is undefined—bringing to mind: “Just how large can this system expand to?” If you think about organizations (e.g., eBay, Amazon), there probably is no perception of the end point. Yet when applied to media asset management (MAM), the limits may be perceived by the number of records the system can handle, a capacity bounded by storage (not including the cloud), or by the effectiveness of the database to manage, search and retrieve the assets when needed and in a reasonable time period.

All this is quite ambiguous, to say the least. So, let’s put another descriptive term in front of scalability: “seamless.” Sometimes this becomes part of a marketing effect and sometimes the term is built in reality. In the case of the cloud the actuality probably can’t be identified regardless of the application—because “everything” is seamless in the cloud.

“Seamless scaling,” widely used to infer a system’s ability to expand to some level, is not necessarily a new term. Applicable models for the architecture are typically applied to networks, IP video streaming, CDNs, data storage and to MAM solutions. And most of these need to scale “seamlessly.”

When used in the “architecture” scenario, resources—and their usage—are, hopefully, scaled linearly against the load placed on the system by its users. For example, in compute operations, the load could be measured in the amount of user traffic; it could be set against input/output operations (IOPS); or may be related to data volume and more.

PRICE/PERFORMANCE CURVE

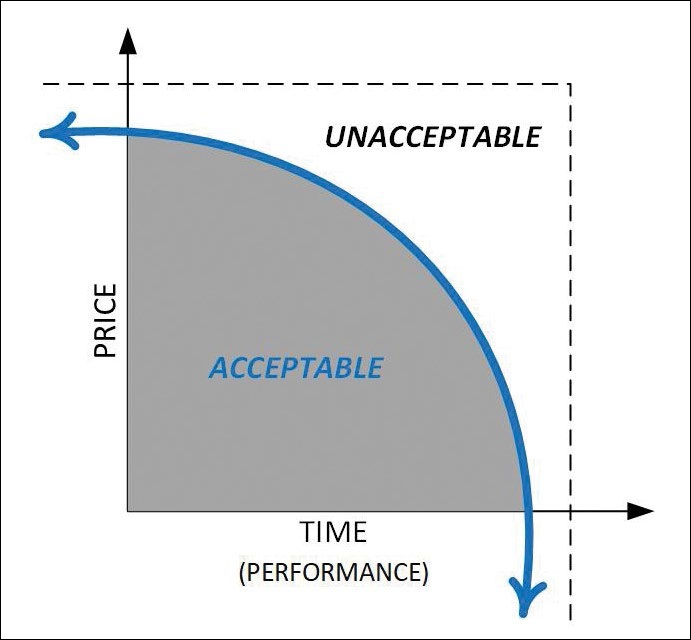

Resources must be balanced with performance. Here scalability is about resource usage associated with a single unit of work. Scalability, in this model, is about how resource usage and costs change when units of work grow in quantity or size. Scalability then becomes the “shape of the price-performance ratio curve,” as opposed to its value at any one point in the curve.

A price-to-performance ratio typically refers to a system’s (or product’s) ability to deliver performance for the price, capability or desire to pay. This falls into the proverbial “open checkbook” model, where cost becomes no object. However, there is a point where throwing in a boatload of cash ceases to deliver the performance desired in the time required. These factors impact the shape of that price-performance curve. See Fig. 1, where “time” can also be “performance.”

LINEARITY AND LATENCY

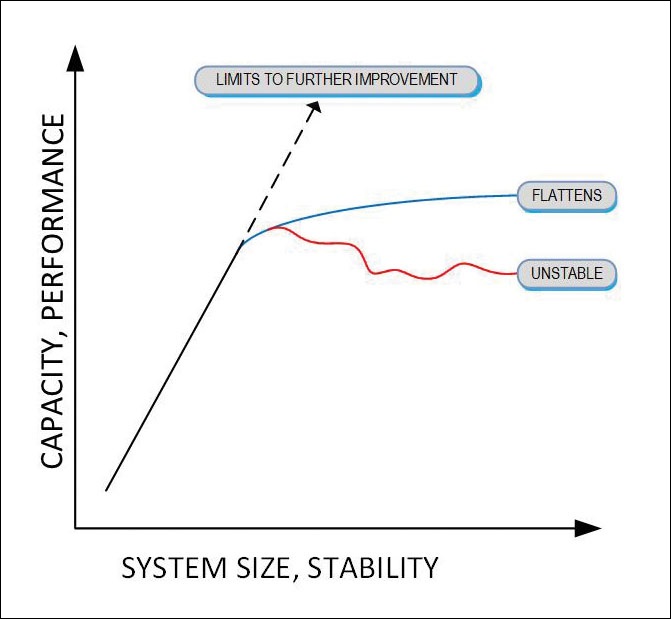

“Linear scaling” is sometimes depicted as a “straight line” model, its slope being determined by multiple factors—speed, throughput, latency, etc. In reality, systems utilizing technology simply won’t sustain a linear scaling model ad infinitum. Linear models will usually run at a given slope up to the point they fall over, dramatically change slope, become unstable or turn into a curve (Fig. 2).

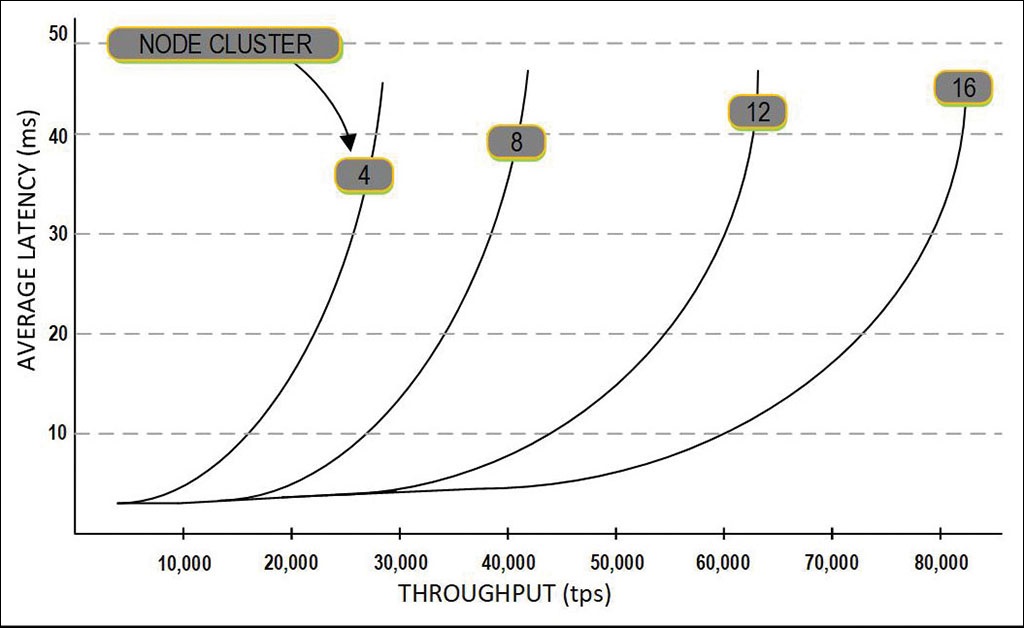

Whether in a cloud or an on-prem datacenter, one variable impacting scalability is latency. Low-latency performance for workloads must be balanced with consistency. When latency dramatically changes, the net-net performance value is lowered (Fig. 3). Latency change is countered based upon design, system size, architecture, and the demand placed upon other services sharing the same resources.

One metric in cloud computing is its ability to deliver scalable access to a large pool of computational, storage and network resources, commonly known as infrastructure-as-a-service (IaaS). With functional workflows for storage, asset management, and databases moving more into the cloud, services leveraging machine learning and artificial intelligence make better owner/operator sense when they’re not built on premises.

Before the factors of price-to-performance are put into the model, a reality check about value proposition should occur and before the checkbook is opened or the bank account runs dry. It’s easy to let an unconstrained operational model go “cloud-wild” without implementing a set of checkpoints that help decide whether or not the practicality of going down this path is returning the value needed to reach the intended goal.

While the system (MAM, storage or network) may be able to scale seamlessly and linearly to a point without notice or consequence, users need to clearly put binders around the system that meets the goals in the time needed and without bankrupting the farm. Think again; perhaps the workflow storage solution is better kept at home.

“Super scaling” has been attached to hyper-converged compute platforms for at least a decade. Super scaling evolved from converged architectures as databases and analytics—regardless of their user applications—continued to demand real-time analysis for the delivery of its information and best performance.

Practical growth in these spaces previously relied heavily on massively parallel processors set into a clustered structure. The inhibiting force to this approach, in other than cloud, was the ability to access the storage at an I/O rate that matches the power of the combined processors, without latency or choking.

In terms of basic storage statistics and specifications, the rotational speed of the HDD, areal density of the bits, and the ability for data to be placed (written) or removed (read) from the storage material itself is not the whole story. Findings show other factors constraining scalability and performance for the storage system.

Solid-state drives (SSD) built on flash technologies, have changed the storage-dimension from what it was when only HDDs were available. Today, modern applications continue to push the envelope of storage I/O, capacities and processing. Seamless scaling now occurs in multiple dimensions aimed at supporting demand, change, data growth and adaptive user/workflows, all the time driving the question “cloud or datacenter/on-prem.” For the enterprise, direct attached SSDs (DAS) relay data to and from its servers, which strive to support an ever-expanding requirement set of transaction processing, data analytics and more.

DEMISE OF LEGACY DAS

DAS is successful because of the way it connects flash SSDs via PCIe, and continues to be a mainstream choice spanning over a dozen years. Unfortunately, the DAS+SSD scalability limit is nearly on the doorstep, to be relieved only by the comparatively recent technologies of non-volatile memory express (NVMe). Nonetheless, new methodologies still hold the constraints DAS had from infancy. Technically, if you only added a “shared-storage” model, then the value-proposition for NVMe became significantly diminished. Advancements in bus and I/O speeds changes that perspective, incorporating the principles of clustering and parallel processing for the storage environment.

Highly parallel, scale-out clustered applications require low-latency, high-performance shared storage capabilities. The latest change developed to address this weakness is that of the now standardized NVMe over Fabric (NVMe-oF). Fabrics, such in Fibre Channel or SANs, are software-defined resource topologies shared through interconnecting switches.

While all-flash arrays (AFA) changed storage models forever, its use of PCIe SSDs showed performance limitations when directly employed in application servers. Resources in this model became under-utilized to say the least—according to some, the net storage utilization averages between 30% and 40% and lower, in some cases.

Another issue in performance scaling is consistency. Some services, when run on a clustered server environment, can bring applications to a crawl. As services perform snapshots, cloning or other actions commanding CPU cycles, their functions take resources away from storage management activities—slowing data I/O and transfers.

Coupled with excessive data movement, complex operations and poor performance from large-footprint silicon devices—the negative impact on storage management cycles means that storage I/O becomes uncontrollable or variable to the point where errors develop, read/write cycles fluctuate or data becomes unavailable or worse, corrupted.

Recent improvements in scalable architectures, including storage, are supplementing advancements in physical storage footprints improving the capabilities to deliver data at the rates needed for workflows such as ultra-high definition (UHD), HDR/SDR and even the potential for uncompressed, high bitrate IP-flows (ST 2110) on servers and virtual machines.

In future storage articles, the depths of NVMe-oF will be expanded. In the meantime, if you’re looking at a storage refresh, investigate the (relatively discreet) manufacturers who are leveraging these new bus and data management technologies.

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor to TV Technology, focusing on storage and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.