ATSC 3.0 Audio: Structure and Metadata

Think about how we consume television now. In our house, cable is simply the pipe that delivers high-speed internet, and content arrives via a combination of over-the-air antenna and a handful of streaming services. We rely on smart devices to locate and play what we watch because we no longer know when it airs. Into this world of distributed, multisourced content our nextgeneration television delivery system is being born, a world where broadcast runs the risk of becoming an afterthought, at least until the internet service goes down.

Both the promise and challenge of ATSC 3.0 is to merge broadcasting into this internet- centric media landscape and, in the process, deliver high-quality content with properly delivered mixes to every device. Accomplishing this requires changing virtually every technology currently in use that delivers television to the home and brings with it new structure and expanded metadata.

Information about the audio elements of ATSC 3.0 can be found in three documents that make up the finalized ATSC A/342 standard. Part one covers the elements common to all Next Generation Audio systems, part two specifies AC-4, and MPEG-H is detailed in part three. These documents contain virtually all the currently available information regarding what audio engineers will be dealing with in ATSC 3.0. In this column, we’ll look at the structure of the common elements as they relate to AC-4, some of the new metadata parameters, and briefly touch on a few things that remain unanswered.

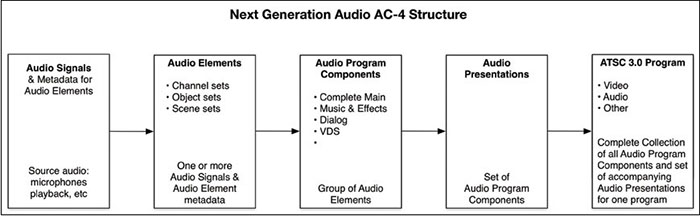

Fig. 1: In the Next-Generation Audio world, the ATSC A/342 standard treats audio as data.NGA STRUCTURE

Let’s start by looking at the audio data structure of NGA systems. Currently, when prepping a mix for television, audio is the source and audio is the final output, whether it’s a stem, submix, iso, or full mix. In the NGA world, audio is treated more like data, and in fact that’s how A/342 refers to it, as audio data (see Fig. 1). Source audio is now officially referred to as “Audio Signals” and each signal may or may not have metadata associated with it. The Audio Signals and their metadata are formatted into either Channel-Based, Object-Based, or Scene-Based sets called Audio Elements.

Channel-Based Audio Elements are traditional-style, fixed output mixes that can be anything from single-channel mono to immersive 7.1+4. Object-based elements consist of Audio Objects with positional metadata that allow them to be placed—statically or dynamically—almost anywhere in the sound field. Scene-based elements model an actual or simulated sound field.

Audio Elements also carry positional or personalization metadata as well as rendering information that helps the system format audio data for the final receiving device. Groups of Audio Elements make up Audio Program Components which may consist of Complete Main mixes, Music and Effects submixes, Dialog only submixes, Video Description Services, and other audio feeds and mixes which are delivered in one or multiple audio elementary streams.

Finally, single or combinations of Audio Program Components constitute an Audio Presentation, which is the audio portion of an ATSC 3.0 program. There can be more than one Audio Presentation per program but one must be designated as the default presentation. By design this layered structure provides the flexibility necessary to deliver immersive audio, personalized content, and emergency alert information to the listener.

NEW METADATA PARAMETERS

There are several new metadata parameters in addition to extensions of current ones. Control parameters for dialog now include Dialog Enhancement to give users more control of independent and premixed dialog to help improve intelligibility of content after it reaches the home.

Dynamic Range Control (DRC) keeps existing E-AC-3 elementary modes but adds modes that properly render audio to the seemingly limitless variety of target devices such as portable audio systems, flat screen televisions, and home theaters.

Loudness metadata has been expanded to include parameters for true peak and maximum true peak, relative gated loudness and speech gated loudness, dialog gating type, momentary and maximum momentary loudness, and short-term and maximum short-term loudness.

Intelligent Loudness Management (metadata) in AC-4 means the system can now verify whether associated metadata matches audio content and then pass along to decoding devices that the loudness metadata is correct so no changes are made to the final audio. If the system is unable to validate loudness metadata, a real-time loudness leveler can be enabled to ensure loudness standards are met.

Extensible metadata is supported in AC-4, allowing user data, third-party metadata, and application data to also be carried in the bit stream while alternative metadata can be associated with objects, in addition to their regular metadata, to enable presentations to create different versions of the object for their use.

CONTENT DELIVERY

There are two types of presentation streams and two types of decoding modes available in AC-4:

An Advanced Single-Stream presentation enables a single stream to carry multiple Audio Program Components inside it, whether those components are part of the same presentation or from multiple presentations. All mixes, submixes, and versions of a presentation may be carried in the single stream or the stream may be used to carry several different, possibly even unrelated, programs.

Multi-Stream Hybrid presentations send Audio Program Components over multiple paths, with the primary component delivered via broadcast and other components delivered via a secondary path of some sort, of which broadband is an option. Core Decoding delivers a simple, complete audio presentation to target devices—such as phones and televisions—that have simple playback capabilities. Full Decoding mode delivers complete presentations to devices like home theaters that have more complex decoding capabilities.

Fig. 2: This graphic represents one possible option for a 7.14 control room speaker setup. Actual control room speaker layouts have not been specified by the ATSC yet. The documents that make up A/342 give us an amazing amount of information, far beyond what is presented here, but there are still gaps. For instance, proper placement of speakers for 7.1+4 setups for television audio mix rooms remains unspecified and the entire rendering process needs to be clarified (Fig. 2). Fortunately, there are training initiatives taking shape from manufacturers and professional organizations geared to help mixers and technical staff get a handle on NGA and prepare for rollout, which may make this the most challenging and exciting time to be a television mixer since the switch to digital.

Jay Yeary is a broadcast engineer and consultant who specializes in audio. He is an AES Fellow and a member of SBE, SMPTE, and TAB. He can be contacted through TV Technology magazine or attransientaudiolabs.com

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.