Automated Captioning Is Here to Stay

Automation has been infused into innumerable elements of our daily lives. From production and assembly lines to broadcast facilities around the world, the transition to automated processes and workflows now have deep roots, and have forever changed the way we work, shop and entertain.

A common concern across all appliances of automation is the reduction, or outright elimination, of the human element. While the transition from manual to automated operations will undoubtedly remove human error in many cases, there are certainly more sensitive tasks where the argument for maintaining a manual workflow remains strong.

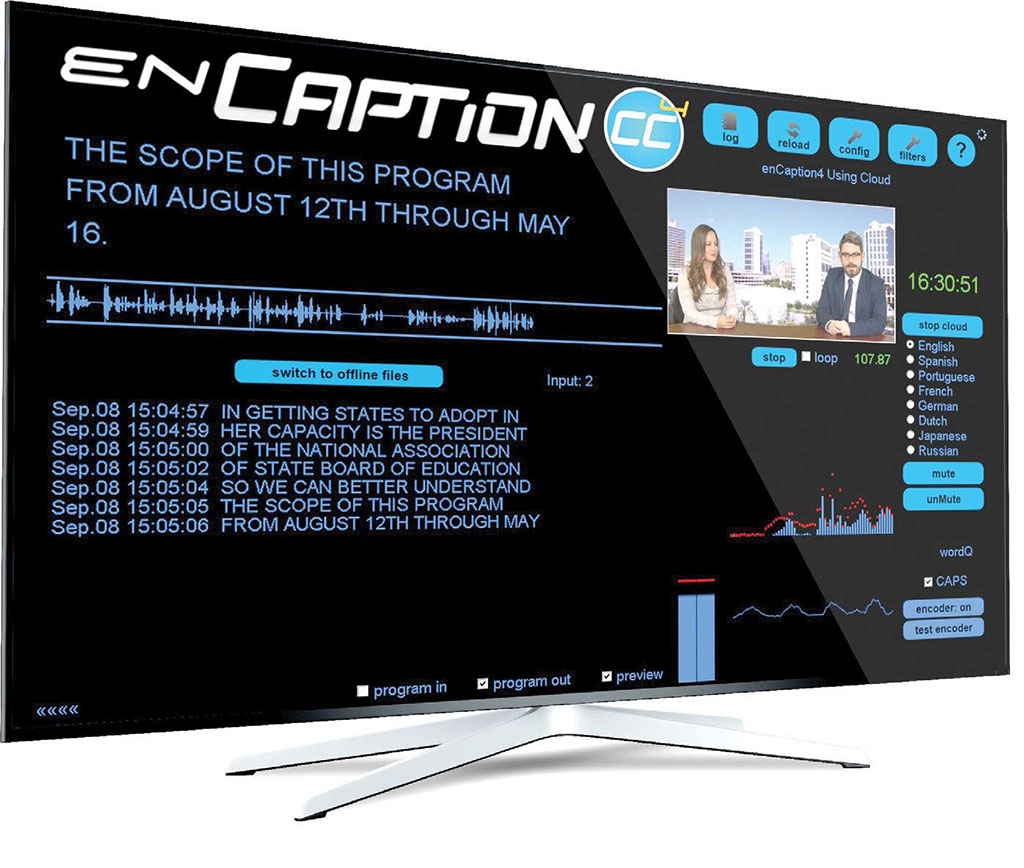

In the broadcast space, the transition to automated closed-captioning workflows is one topic that breeds intense discussion both for and against. However, the technology has advanced enough to instill confidence with broadcasters in many of today’s top DMAs, clearly representing the future of this important application.

EVOLUTION OF SPEECH-TO-TEXT

Speech recognition dates back to the 1950s, with modest first steps focused on digits and the most basic English words. With consumer services such as Siri and Alexa continuing to improve with each product generation, it’s clear that speed and accuracy in speech-to-text recognition has come a long way. So goes the same with automated captioning technology, which benefits today from the strengths of modern artificial intelligence.

[For an opposing view, read: Is It Live, Or Is It Automated Speech Recognition?]

While different mandates on closed-captioning in broadcast television exist around the world, the unifying purpose ensures that deaf and hearing-impaired viewers can fully understand and enjoy the shows they watch. Beyond the hearing impaired, statistics show that one in six viewers worldwide prefer to receive closed captions with their content.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Production and transmission of live, manual closed captioning has long been challenged by high costs, availability, varied latency, and inconsistent accuracy rates. And it’s true that the transition to more automated, software-defined captioning workflows introduced a new series of challenges.

For example, while automatic speech recognition removes the cost and staffing concerns of manual captioning, the performance of early-generation servers and processors demonstrated accuracy and latency problems. These issues were especially magnified for broadcasters that must now deliver accurate closed captioning across a multichannel, multilingual, multistandard and multiplatform media landscape.

These concerns are rapidly diminishing. The accuracy of speech-to-text conversion across multiple languages continues to improve with the emergence of powerful, deep neural network advances. In fact, accuracy across today’s strongest platforms has been raised to 90 percent or higher. The statistical algorithms associated with these advances, coupled with larger multilingual databases to mine, more effectively interpret—and accurately spell out—the speech coming through the air feed or mix-minus microphone.

Meanwhile, the faster and more powerful processing of computing engines within automated captioning technology has significantly reduced the latency to near real-time. This achievement is particularly impressive given that automated captions took between 30–60 seconds on many systems as recently as one or two generations ago.

Additionally, as closed-captioning software matures, emerging applications to eliminate crosstalk, improve speaker identification and ignore interruptions are improving the overall quality and experience for hearing impaired viewers.

MARCHING FORWARD

Many of the above improvements are related to recent breakthroughs in machine learning technology, which have enabled a deep neural network approach to voice recognition. Machine learning not only strengthens accuracy, it also provides value through detection of different languages and the different ways that people speak.

That intelligence as it relates to different dialects will provide an overall boost to accuracy in closed captioning. Consider a live news operation, where on-premise, automated captioning software now directly integrates with newsroom computer systems without the need for a network connection. This will now help broadcasters strengthen availability—no concerns about a network outage taking the system down—and take advantage of news scripts and rundowns to learn and validate the spelling of local names and terminology. Both of these points were once major and justified arguments against automated captioning.

Automated captioning also enables the applications to be achieved efficiently on a larger scale—costs are lowered due to the transition from human stenographers to computer automation. And as there is a need to caption a growing amount of content, there is an economy of scale that drives the cost down even further as broadcasters automate these processes.

As systems grow more reliable and broadcasters grow more comfortable with the technology, they will also find new efficiencies and opportunities along the way. For one, broadcasters that need to cut into a regularly scheduled program with breaking news or weather alerts will no longer be forced to find qualified (and expensive) live captioners on short notice.

Improvements in captioning technology have also been timely around emerging needs, including networks tasked with captioning large libraries of prerecorded content. As more systems move to software-defined platforms, the captioning workflow for prerecorded and/or long-form content has been greatly simplified. Post-production staff can essentially drag-and-drop video files into a file-based workflow that extracts the audio track for text conversion. These files can then be delivered in various lengths and formats for a TV broadcast, the web, mobile and other platforms.

And with multiplatform reach, broadcasters also have opportunities to caption live and on-demand streams, ensuring that hearing-impaired and multilingual audiences watching online are properly served as well. The future of this technology is very exciting, especially with the knowledge that we’re really just beginning to reap the fruits of this technology.

Ken Frommert is president of ENCO.