Automated Data Anomaly Detection

The growth in data is, has and continues to be a topic that influences how much and what types of storage architectures, etc., are selected and for what applications. Besides determining the storage architecture itself, another element which is affected by the continued growth in data storage is the analysis of the data to insure that anomalies are identified, located, and corrected.

Data analysis has traditionally been confined to IT departments and systems administrators. For those experiencing the dynamic growth of what is referred to as “big data,” these administrative teams must now preside over indescribable amounts of unstructured data. According to industry analysts, much of this data—in the context of media files and other information—has been largely overlooked; and therefore remains uncategorized, cataloged or in many cases still remain in numerous raw (original) formats including analog or digital videotape and film.

Traditionally, unstructured data was comprised of documents such as PowerPoint presentations, spreadsheets, social media collections used in marketing, newscasts and other trending data. Production and/or project emails among coworkers, production teams, approval management, etc., all contribute to the “unstructured/big data” domain. The explosion of video, which is largely unstructured, into all areas of information technology has complicated management and identification of anomalies, which can infect the integrity of the content immediately and well into the future.

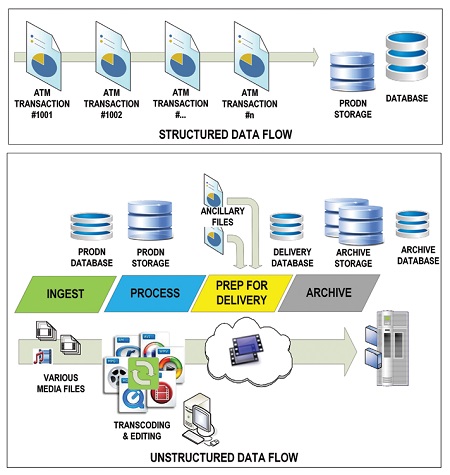

Fig. 1: Generic workflows for media systems

CONVENTIONAL ACTIVITIES

All of this content is most likely considered valuable information. However, since the data is used throughout production processes, it should probably become part of the permanent archive for the production or project. Conventional media asset management systems have, for the most part, focused on the video cataloging, search and production aspects of the project life cycle. Functionality and broadly speaking, these workflows include: ingest, processing, preparation for distribution and archive (Fig. 1).

When you expand the requirements to include email, graphics, scripts, production schedules, approvals, rights management, etc., the system tasked with managing these extended sets of data might be called a digital asset management system. Specialists in these areas might argue where the boundaries are, and may indeed add other layers, for example, “production asset management;” and from the business side might be called “business process management.” Whatever or however one divides the subjects, all these data sets—when looked at holistically—make up the broadening dimensions of what is now called “big data.”

Managing myriad files, file formats, versions, and such, especially when much of the data is unstructured, becomes a systemwide issue, irrespective of the scale of the enterprise itself. Some manufacturers of MAM/PAM systems place constraints on just how far they take the descriptions or types of files they can include in their management solution set.

Others like to address all the issues under the umbrella of a DAM; which allow the end-user to add or modify modules, which include planning, prediction, trending, reporting and orchestration. What you need, whether as an individual or an enterprise, must be carefully analyzed, product/modules selected and assembled into an all-encompassing system, which often requires customization between ancillary components that may or may not be available from the DAM-provider’s shopping cart.

CORRUPTION, SLOWDOWNS AND PIRACY

Irrespective of the depth and breadth of the asset management solution set are issues related to monitoring, troubleshooting and analyzing data whereby systems (humans or machines) look for changes, which could result in data corruption, inconsistency, slowdowns in performance, piracy, etc. When data anomalies are located, sometimes they lead to breaches in security whereby data flow processes change abruptly; such as when large numbers of random files are transferred from one storage system to another without following rules or procedures established by the MAM/DAM or other machine- controlled systems.

Of the two major forms of data, “structured” data lends itself to easier analysis because the information (and its flow) consists of well-defined and expected sets of content within each record. Structured data are items like order entry, ATM transactions and such. In structured data, messages usually contain an expected set or sequence of information; for example, the IP address of the client, the URL being requested, the code sequence or the kind of browser being used. Because the events are machine-readable, i.e., the event can be easily parsed into fields (such as in a database) and then are made sense of easily by a computer program, the structure is generally consistent and predictable.

Fig. 2: Structured vs. unstructured data flows

When dealing with “unstructured” data, the content is more free form, with the meaning of the text within an event being somewhat arbitrary (see the generic comparison of structured versus unstructured data flows in Fig. 2). For example, it is hard for a machine to predict search decisions, content frame boundaries, or whether an accompanying document is related to a single element or many elements.

Often the data set information may be nothing more than a timestamp and then all the rest of the information. The timestamp can be database-anchored, but the remaining information can—and usually does—relate to many other locations, storage sources, storage destinations, processes, etc.

The type and range of unstructured data is arbitrary, thus making it much more difficult to utilize machine-reading approaches (such as search or advanced analysis) that can derive meaning. The result often means that humans must visually sort through the data looking for the changes that could indicate the cause of performance issues or even a security breach. Relying on human analysis of large data sets is risky, often leading to missing anomalous messages that could have provided the exact information one was trying to extract from the data. Automated analysis on unstructured data is also difficult, however, it can be accomplished if there is an additional step inserted.

STRUCTURE TO UNSTRUCTURED DATA

By bringing structure to the unstructured data via dynamic classification (sometimes called “categorization”), tasks that a properly configured MAM or DAM are designed to do, the identification of data anomalies becomes easier.

Advanced machine learning algorithms are employed in this process. Once unstructured data is classified, algorithms can then identify message similarities and assign like messages to one of the dynamically generated category names.

Once this background task is completed, users can begin to analyze events for anomalies by baselining, trending or locating deviations in the rate of the events by each category over time.

The concepts employed in this type of analysis take time to set up. Once started, they begin the collecting of category trending information. Upon completion of the initial data runs, the now “categorized-unstructured data” can be treated in similar fashion to that of structured data.

The automated analysis of unstructured data provides time savings. It can help mitigate humans from the chores of data mining by automatically providing them with awareness into behaviors or changes in recorded data. Diagnostic analysis times (i.e., forensics) are shortened considerably through this process.

The concepts of data analysis and anomaly detection are a growing science. Enterprise- class systems serving many locations and which utilize centralized (or cloudbased) architectures may find value in applying these techniques and technologies to their operational practices, especially when sensitive or compartmentalized information structures are involved.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about this and other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.