A/V Fingerprinting—Transporting, Binding and Applications

Audio and video fingerprints are bits of data derived from certain characteristics of each type of content. The algorithms for generating fingerprints, in Part 1 (See “Book ‘Em Danno: A/V Fingerprinting”), have been developed as part of a proposed SMPTE standard from the SMPTE Drafting Group (24TB-01 DG Lip-sync). As of this writing, the standard is going through the approval cycle.

Other parts of the proposed standard deal with the container for the A/V fingerprint data (which packetizes the data), as well as how to transport and bind it to various digital formats.

For each video frame (or field in progressive), there is one byte for a video fingerprint and several bytes for the audio fingerprint, the number depending upon the video frame rate. For each frame, the video and audio fingerprints associated with that frame are bundled together into a defined container, with one container per frame.

The container is additional data that wraps around the fingerprint data and contains information as to protocol version, sequence count, status bits, ID descriptor and checksum.

Once the fingerprint data is containerized, it can be sent along with and bound to the audio and video to wherever it’s going. The fingerprint by design is not part of the audio or video content itself. Rather it’s a separate piece of data with the audio and video content remaining unchanged.

GENERIC MECHANISM

Currently the proposed SMPTE standard covers fingerprint binding in SDI, MPEG-2 transport stream and Internet UDP/IP. Work is continuing on binding in file-based formats.

For SDI transport, the fingerprint container would be carried in a standard ST291 vertical ancillary (VANC) packet.

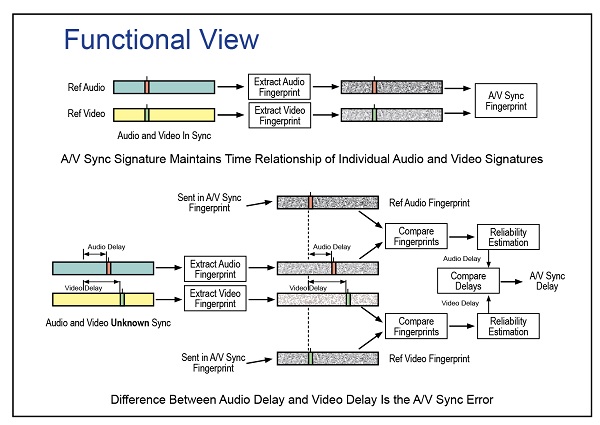

A functional view of how A/V fingerprints can be used to determine lip sync errors. Courtesy of SMPTE. “This is a generic mechanism to take a chunk of data and attach it to the stream,” said Paul Briscoe, chair of the SMPTE Drafting Group (24TB-01 DG Lip-sync). A common use for VANC is for embedded digital audio; the fingerprint data would be another packet in this space. The fingerprint ancillary stream will have its own unique registered data identifier (DID) and secondary data identifier (SDID).

For MPEG-2 transport streams, the fingerprint container would be inserted as private user data, as defined by the MPEG-2 TS standard. It would have its own unique packet identifier (PID), an MPEG-2 registration address.

With both SDI and MPEG-2, the fingerprint data is inherently bound to the video and audio essence, with MPEG-2 via maps. However, with IP transport, fingerprint data is indirectly bound to the essence.

For IP transport, the fingerprint data is put into a raw user datagram protocol (UDP) packet with an IP address and an ID descriptor. These relate to the audio and video streams so that a receiver can pull them out and re-associate them with their corresponding audio and video.

UDP is the simplest type of Internet packet, according to Briscoe. It’s generic, one-way, transmit-only, and is not acknowledged by a receiver. It’s very possible that fingerprint packets could get lost or be received out of order, but as we’ll see in a minute, that’s generally not a problem.

FILE BINDING

Binding fingerprint data to files is more involved. “The file binding aspect is about to become the primary focus of the work,” Briscoe said. “We have a proposal that everyone feels is viable and is based on the fingerprinting standard we’re completing now. It’s basically a definition of how to bind those fingerprints to files.”

It’s important to note that the same fingerprint container is used for all binding applications. “The fingerprint bucket [the container] looks the same whether in SDI or in a file,” Briscoe said. “The payload is the same, so you can go between media very easily. You don’t have to take it apart and decode it [when going between different media].”

Briscoe compared transporting fingerprint data to a letter (the fingerprint data) inside an envelope (the container). That letter can be transported by FedEx, UPS or USPS Express Mail, for example, each with its own outside envelope, unique to each carrier. That outside envelope is analogous to the specific packet wrapper for SDI VANC, MPEG-2 TS or UDP/IP.

FREEDOM OF CHOICE

How the audio and video fingerprints get used in practice is not part of the proposed SMPTE standard. Nor are any consumer standards to bring applications for fingerprints to the home end-user, although liaisons have been established with CEA, DVB other organizations, Briscoe said.

“We are building a toolset that people can use to build applications and leaving the feature-space stuff to innovative designers,” Briscoe said. “Accordingly, we are standardizing the bits that need to be nailed down. There may ultimately be utility in creating an Engineering Guideline or Recommended Practice, which informs people further. Should it become evident that we need to standardize other aspects, we would do so.”

Applications will be left to developers and manufacturers, but here’s a typical scenario:

Content that’s known to be in A/V sync is fingerprinted. It’s important to remember that the audio and video must be in sync in the first place when fingerprinted as this technology will not fix sync problems if they weren’t right in the first place.

Once the fingerprints are derived they are bound to the source. At the content’s destination, fingerprints are derived from the locally received content, a set for video and another set for audio. Video and audio fingerprints are correlated to the source fingerprints. This establishes delay times for each type of signal. The difference in delay between audio and video is the lip sync error.

What happens at this point would be up to the application. It can automatically make corrections, a useful feature in a home viewing system, or it can warn an operator that there is an error; indicate what it is, but make no automatic corrections. Or it can correct sync and refingerprint before the signal is sent down the line.

Fingerprinting technology is pretty robust, as stress tests during the working group’s activities showed. “The fingerprint algorithm that we’ve chosen uses specific measures of video and audio that are intended to be minimally impacted by various processing, such as geometrical scaling, loss of detail and so on,” Briscoe said.

And if some fingerprints get lost along the way? Lip sync doesn’t generally change over the course of a piece of program, Briscoe said. As long as some are received, the correlation can still happen, and adjustments made if necessary.

“You don’t need a fingerprint for every frame,” Briscoe said. “You can lose fingerprints, [and when they return] you can start measuring again to see if there’s a change.”

Once the SMPTE standards are approved as expected, it will be interesting to see what applications result. It sounds like we may finally have a handle on how to detect and correct lip sync errors.

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.