Cloudy—Not at the Edge

At this point in the technical evolution, we’re firmly in what might be called the “cloud computing era.” Yet, there is a somewhat interesting and possibly equally mysterious transition that is changing the location and the value proposition of the cloud.

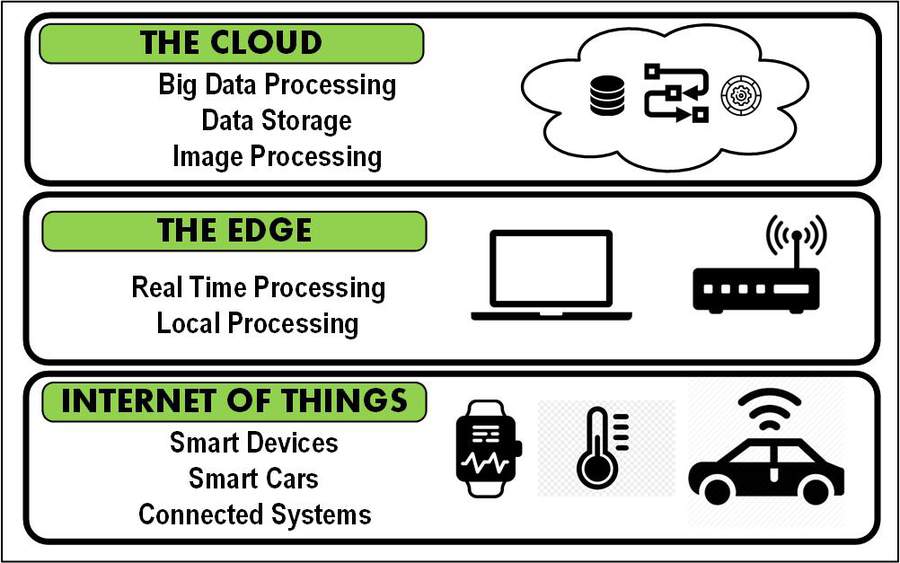

Many of the new applications thought to be ideal for cloud computing may actually be occurring closer to the source of the data, that is at the “edge.”

At the personal level, many of us use products and services that are powered by intelligence that is found in the cloud. We place content in the cloud and we pull content from the cloud. These well-known products include those from Google (Chromecast), Amazon (Echo) and Apple (TV). Centralized services are familiar to many—those by Gmail, DropBox, Adobe Creative Cloud and Autodesk AutoCAD. These organizations use cloud services to store, backup, protect and interchange data and are always looking into new methods to achieve better security, decrease latency and reduce internet bandwidth traffic.

The “edge” is a relatively recent buzzword; similar in context to the cloud and the Internet of Things (IoT). Maybe “the edge” is not yet as familiar as AI and VR/AR, but it has similar importance to other technological capabilities that circle our universe and impact our lives on a daily basis. And people trust these services—we allow them to routinely collect our data and utilize it in ways that we can’t possibly manage ourselves. We let these companies “own” that data (unless you’re doing the GDRP thing), and we recognize that it’s literally impossible to halt the external/additional uses of our data due to myriad reasons, privileges or end-user-license-agreements (EULAs) that we graciously sign and accept before landing a single piece of data in their repository.

CLOUD DEPENDENCY

A significant number of companies have openly adopted the use of the cloud and rely on the hosting, machine language (ML), infrastructure and the power offered by the cloud. Nonetheless, the use of the cloud—as expected—continues to ebb and flow with the needs and capabilities of the technology ecosystem. Fundamentally, because of evolution, compute and other dependencies, we are beginning to see that certain functionalities and capabilities are now moving from the “public” cloud to what is known as “the edge.”

To see where this is headed, we start by looking at what “the edge” and “edge computing” is about. Basically, edge computing are those computational properties that are performed or “live” as close to the source or information gathering points of the data as is possible or practical.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

But why do this when the cloud can do this work, probably faster and more efficiently than at the edge? There are multiple reasons and rationale for this, some influenced by technological evolution and others because the ecosystem, in whole or in part, allows this to happen.

PRIVACY

Families, institutions, enterprises, small businesses and individuals are increasingly more concerned about data privacy (and piracy). Many of you have likely had your credit card hacked at least once. While we may trust the cloud, and the firms providing the service—one can only wonder not if, but when something will be compromised.

If only the least amount and most applicable data needed is sent to the cloud service, then certain (unknown) risks might be mitigated. If only that data that absolutely needed to go to the cloud were encrypted locally, contained a biometric key and was sent over a protected channel—possibly using blockchain technologies—the user could feel better protected. To do this means that some of the compute process should be brought to the edge.

At least one major smartphone manufacturer does just that. They brought what was once only available as cloud-based computing out to the edge, creating a powerful change in how compute power is distributed.

SECURITY

We’ve heard about poorly managed IoT devices because the processing needed at the source was either absent or relegated to a location that passed through other less secure channels (e.g., the public internet) before reaching the cloud service-center destination. Today, browsers located at the edge, which have moved to the “evergreen” model, are finding success when employing edge computing principles in terms of increased security and better use of bandwidth.

“Evergreen” refers to services that are comprised of components that are always up to date. Evergreen IT encompasses services that are employed at the user level and at all the underlying infrastructures, whether localized, at the edge or in a cloud. Edge computing can help manage security by bringing only the needed information to the (public) cloud. Browser and cloud providers are working on OS and certified microcontrollers that will manage the types and depth of information that is cloud-bound.

BANDWIDTH

Proponents of edge services (for computing) believe that bandwidth can also be saved. That is, the bandwidth needed when to get every piece of data from host (user) to the cloud can be reduced if some or much of the compute efforts are done before going to the cloud. Artificial intelligence is helping to enable this. Say for example, in a camera security model, you are monitoring only one source (i.e., one camera) and carried only that full image to the cloud; there might not be much savings by edge “computing.” However, if you have several sites (i.e., multiple cameras), you could gain substantial bandwidth savings by combining the data—as smaller images from each of the individual cameras—into a single multiviewer and then transporting a single “composite” image to the cloud.

If you added AI at the edge to detect, for example, any movement—as in a motion detection security function—and only then switch the camera from one of several images on a screen to a single, full-screen size—then you’ve eliminated issues with having to continually look at every image as well as send only what is needed to the cloud for storage or other functions. Once any movement stops, the multiviewer reverts to all the cameras on a single raster. The AI function might also cache the other non-active images to a local (memory) store and hold it until reviewed by the user later.

LATENCY

Issues associated with bandwidth utilization may also be equated to minimizing latency. Major data information companies such as Google and Apple are working hard to localize the compute issues using AI to help control bandwidth and data-traffic demands. Furthermore, if you use “off-line first” techniques—i.e., you open the app on your mobile device without first connecting to the internet—then you conserve bandwidth without compromising performance.

Pay attention to these up and coming active changes when you consider IoT for your home security system or other monitoring features including thermostats, fire detection, etc. When appropriately managed, edge computing solutions aided by AI can help control security and return answers faster and more reliably.

Karl Paulsen is CTO atDiversifiedand a SMPTE Fellow. He is a frequent contributor toTV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.