Content preparation for adaptive-bit-rate video

Today’s media landscape is radically more diverse than just a few years ago. The delivery of consistently acceptable image and sound quality is taken for granted by viewers, despite uncertain or fluctuating bandwidth. Adaptive-bit-rate (ABR) streaming technology makes this possible.

What is ABR streaming?

ABR streaming is a delivery technology designed to provide consistent, high-quality viewing in situations where bandwidth may fluctuate, and where viewers may be on a wide range of devices.

Prior to ABR streaming, Web or mobile video delivery was typically done by encoding a single downloadable file or stream at a fixed bit rate and frame size. Viewers could buffer some of the video, and then simultaneously download and play it back. This delivery model was similar to cable transmission, where a single bit rate is transmitted over a reliable medium.

Unfortunately, transmission mediums for Web and mobile devices are unreliable, and bandwidths vary. During fixed-rate video playback, viewers with low bandwidth suffer from excessive buffering (delaying playback). To compensate, providers have tended to encode at lower bit rates, punishing viewers with high bandwidth. Even then, any fluctuations in bandwidth can cause buffering delays.

To solve this problem, ABR streaming content is encoded into multiple layers, each potentially a different bit rate, frame size and/or frame rate. These layers are combined into a single package that represents the original content. ABR players switch between layers depending upon the device and available bandwidth, to ensure consistent high-quality playback.

For example, a single ABR package might include six layers, each encoded at progressively higher bit rates. As a viewer watches content on his/her mobile phone during a train ride, the player will adaptively switch between low bit rates and high bit rates, depending upon the connectivity of the device.

How does it work?

Most ABR streaming technologies use standard Web protocol (HTTP delivery) to send video. This offers advantages over specialized streaming protocols such as RTSP or RTP, as HTTP-based delivery works immediately on Internet networks and can take advantage of edge technologies designed to cache HTTP requests.

During playback, video and audio are delivered via HTTP in small fragments, each representing some small amount of video, typically between 3 and 10 seconds in length. Each content package includes multiple layers, and each layer may include many fragments. For example, an hour-long movie may have 12 layers, each with a thousand fragments. The player is provided with a package manifest file outlining which layers are available and the location of the fragments for each layer.

During playback, the player requests and downloads a fragment from a layer. While the fragment is played, the connection speed is monitored, and the player may opt to switch layers, either increasing or decreasing the video bit rate based upon the connection speed. Players may also choose layers with different frame sizes or frame rates to optimize the visual experience for the device. This adaptive behavior is what ensures consistent playback regardless of connection speed or device.

There are several different ABR streaming technologies available: Apple HTTP Live Streaming (or “Apple HLS”), Adobe HTTP Dynamic Streaming (or “Adobe HDS”), Microsoft HTTP Smooth Streaming, and more recently MPEG Dynamic Adaptive Streaming over HTTP (or “MPEG DASH”). Each technology requires a complete ecosystem. The content must be prepared correctly, and the correct player must be used. All of the technologies work fundamentally in the same manner, using HTTP for content delivery in fragments.

Where these technologies differ is largely related to the structure of the underlying packages. For example, HLS for older versions of iOS requires a separate file for each video fragment. In contrast, most other packages store fragments for a layer in a single file, allowing the player to download fragments using HTTP byte range requests, which download a small part of a larger file.

Other differences in ABR technology relate to the viewer experience. Apple HLS, for example, provides for a dedicated key frame layer, allowing users to scrub through the video quickly. Other packages allow an audio-only stream with a poster image for extreme low-bit-rate situations.

Preparing content

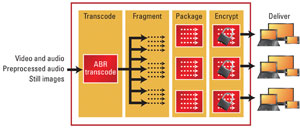

Figure 1. ABR production workflow

Preparing ABR content takes several steps. First, the desired packaging and layer structures need to be identified. Next, content must be encoded, checked for quality, packaged, encrypted and delivered. (See Figure 1.)

Choosing packaging and profiles

Packaging choice is generally driven by what devices must be supported. Not every device supports players for every type of ABR streaming technology. As a result, one should catalogue both the devices and the players that will be supported. The necessary packaging will naturally become apparent as a result.

The selection of optimal bit rates, frame sizes and frame resolutions will vary depending upon device types, connection types and encoding technology. Apple and Adobe provide excellent starting points with suggested profiles suitable for their ecosystems. However, practically speaking, the entire catalogue of devices, expected network connections and network costs must be considered when designing layers.

With these considerations, layer design is a balancing act between frame size, bit rate and quality. However, the actual encoding technology used may have the biggest effect upon quality. For example, one study performed by the MSU Graphics & Media Lab showed that the use of x264 encoding technology saved necessary bit rates by as much as 50 percent compared to other H.264 encoding technologies at the same quality level. As a result, it is recommended that layers be designed while performing actual encoding tests with the final encoding technology.

Most packages, however, generally contain between 16 and 24 layers. Part of layer design will require a reduction in the number of layers. It is best to select a few common native display frame sizes (such as 1080p) and then encode multiple bit rates to those frame sizes. Doing so will avoid unnecessary performance degradation on players that use software scaling (particularly important for Adobe HDS).

Encoding, packaging, delivery and DRM

Each layer will require that a complete H.264 stream be encoded. With 16 to 24 layers, encoding an ABR package can easily require 20 times the processing power needed for a single H.264 stream. Fortunately, highly parallelized multirate H.264 encoding technology exists that re-uses information across the different streams. When combined with GPU acceleration, today’s encoding systems can offer 10 or 20 times the speed of CPU-based systems.

When preparing for multiple devices, an important aspect of encoding is transmuxing, the ability to re-use encoded H.264 streams across multiple package types. This prevents having to re-encode the same bit rates simply to package the video differently.

With on-demand content, it is important to perform QC checks on the different video streams. QC may be performed visually or by using automated tools that measure quality across all of the streams.

On-demand content often requires user authentication and protection prior to playback, which requires digital rights management (DRM). When using DRM, the video must be encrypted during packaging, typically using AES 128-bit encryption. DRM systems typically have subtle requirements for how the encryption is performed by the encoder or packager, and it is important to validate that the two are compatible.

Finally, content delivery will be performed, either as a compressed TAR file or in the native package form. Where possible, it is recommended that the entire production process (ingest, encoding, transmuxing, packaging, quality control, encryption and delivery) be combined into a single automated workflow. Manual steps will significantly slow production time and may result in errors. It is also recommended that the ABR production process be combined with non-ABR production into a single automated system. This reduces system maintenance costs, offers a single view into the overall content production for all distribution channels and allows workflow efficiencies such as unified metadata preparation and content preprocessing.

Conclusion

Preparing video for ABR streaming generally requires research up front to choose technologies and encoding profiles, and a well-integrated, accelerated encoding approach to ensure workflow efficiency. With today’s tools, it is possible to fully automate the ABR content production workflow with full integration into existing content preparation and delivery workflows.

—John Pallett is director of product marketing, Telestream.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.