Content replacement: New protocols enable flexibility in simulcast services.

"That diagram is upside down!” a broadcast engineer exclaimed as I was explaining how a personalized advertising-insertion solution worked for multi-screen simulcast.

I looked at the diagram that I had drawn, and I had put the client devices at the top. I realized the broadcast engineer would have drawn the video source and encoding components at the top.

This is not just pedantry. Web engineers tend to think user-/device-centric, whereas broadcast engineers tend to think more headend-centric. It’s called broadcast for a reason! However, these worlds are colliding, and — thanks to advances in online video delivery protocols, multi-screen encoding systems and cloud-based distribution services — it is now possible to perform advertising insertion or content replacement, and to tailor it to individual users. This article explains how this is possible.

HTTP-based streaming

Until recently, simulcast streaming to connected devices was performed using protocols like RTMP, RTSP and MMS. In 2009, when the iPhone 3GS was launched, iOS 3.0 included a new streaming protocol called HTTP Live Streaming (HLS), part of a new class of video delivery protocol.

HLS differed from its predecessors by relying only upon HTTP to carry video and flow control data to the device. It made the protocol far more firewall-friendly and easier to scale, as it required no specialist streaming server technology distributed throughout the Internet to deliver streams to end users. The regular HTTP caching proxies that serve as the backbone of all content delivery networks (CDNs) would suffice.

Apple was not alone in making this paradigm switch. Microsoft and Adobe also introduced their own protocols — SmoothStreaming and HDS, respectively. Today, work is ongoing to standardize these approaches into a single unified protocol, under a framework known as MPEG-DASH.

What is significant about all these is that they separate the control aspects of the protocol from the video data. They share the general concept that video data is encoded into chunks and placed onto an origin server or a CDN. To start a streaming session, client devices load a manifest file from that server that tells them what chunks to load and in what order. The infrastructure that serves the manifest can be completely separate from the infrastructure that serves the chunks.

The separation of these concerns provides a basis for dynamic content replacement, as it is possible to dynamically manipulate the manifest file to point the client device at an alternative sequence of video chunks that have been pre-encoded and placed on the CDN. The ability to swap chunks out in this way relies on the encoding workflow generating video chunks whose boundaries match possible replacement events.

Stream conditioning and ESAM

Multi-screen encoding workflows must deal with encoding the video, as well as packaging it for delivery into the protocols required by devices. Stream conditioning for dynamic content replacement is about ensuring that the encoding workflow knows when events at which replacement could occur, and ensuring that the video is processed correctly. It is important to emphasize that the replacement does not happen at this point: It is done closer to the end user.

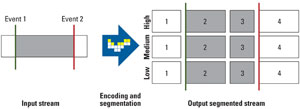

When the encoder is informed about a splice point, it starts a new group of pictures (GOP), and when this GOP is encountered downstream by the packager, a new video chunk is created, as shown in Figure 1. Broadcasters should be wary of how their encoder and packager handle edge cases, such as when a splice point comes just before or after where a natural GOP and video chunk boundary would have been, so that extremely small video chunks and GOPs are avoided.

Figure 1. When the encoder is informed about a splice point, it starts a new group of pictures (GOP), and when this GOP is encountered downstream by the packager, a new video chunk is created. However, broadcasters should be careful to avoid the creation of very small video chunks/GOPs.

Splice points can be signaled to the encoding workflow in-band or out-of-band. More and more multi-screen encoders are capable of handling SCTE-35 messages within an input MPEG TS to determine splice points. Most multi-screen encoders that support SCTE-35 handling also have either a proprietary HTTP-based API or support SCTE-104 for out-of-band splice-point signalling.

There has been a clear need to standardize stream conditioning workflows to allow interoperability between systems deployed for that purpose.

The Event Signaling and Management (ESAM) API specification — a new specification that emerged from the CableLabs Alternate Content working group — describes the relationship and messages that can be passed between a Placement Opportunity Information Server (POIS) and an encoder or packager. The POIS is responsible for identifying and managing the splice points, using the ESAM API supported by the encoder and packager to direct their operations.

The specification defines how both encoder and packager should converse with the POIS, but not how the POIS operates or how it decides on when the splice points should appear. This is considered implementation-specific, but could, for example, use live data from the broadcast automation system to instruct the encoder and packager. ESAM also permits hybrid workflows where the splice points are signaled in-band with SCTE-35 and then decorated with additional properties (including their duration) by the POIS server from out-of-band data sources.

The ESAM specification is relatively speaking brand new, but it is gathering support from encoder/packager vendors. Broadcasters building multi-screen encoding workflows today, even if dynamic content replacement is required initially, should ensure that an upgrade path to ESAM is available in their chosen vendor’s roadmap to ensure future-proofing.

User-centric ad insertion at the edge

On the output side of the encoding workflow, video chunks are placed onto the CDN, and a cloud-based service responsible for performing dynamic content replacement receives the manifest file. This means that the actual replacement of content — whether it is ad insertion or content occlusion for rights purposes — is performed, in network topology terms, close to the client device.

The mechanism, as described earlier, relies on the relatively lightweight manipulation of the part of the video delivery protocol that tells the client where to fetch the video chunks. This can be performed efficiently on such a scale as to permit decisions to be made for each individual user accessing the live stream. By performing the content replacement in the network, the client simply follows the video segments laid out to it by the content replacement service, and the transitions between live and replacement content are completely seamless. That makes it a broadcast experience — a seamless succession of content, advertising, promos and so on, with no freezes, blacks or buffering — but with the potential for user-centric addressability.

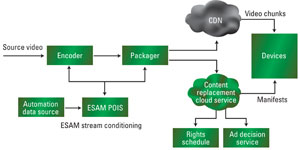

Figure 2. Today, video takes many paths to end-users’ devices. The overview above shows some of the technology that allows broadcasters to tailor their video content to their users. One method is content replacement.

Of course, the content replacement policy and user tracking through the integration of this content replacement service lies with the broadcaster’s choice of ad servers and rights management servers. SCTE-130 defines a series of interfaces which include the necessary interface between a content replacement service and an Ad Decision Service (ADS). In the Web world, Video Ad Serving Template (VAST) and Video Player Ad Interface Definition (VPAID) have emerged as generally analogous specifications.

The ability to tailor content down to the individual, by replacing material in stream, in simulcast, while retaining the broadcast-quality experience of seamless content, is a totally new concept. The commercial ecosystem that generates the need for focused ad targeting must now catch up with the technology that supports it. (See Figure 2.) And broadcasters should be prepared to turn the diagram the other way round.

—David Springall is CTO of Yospace.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.