Deduplication: Managing Disk Storage

The processes and practices for managing disk storage, including backup and protection, are continuing to evolve as the dependency upon sophisticated online and offline storage technologies expands.

While the simpler administrative tasks, such as defragmentation, are generally understood by most computer-savvy users, the more exotic tasks of maximizing data storage capacities, WAN/LAN bandwidth control, replication and backup, etc., are driving IT staffs to find new solutions that reduce costs and improve efficiencies.

There is relatively unexposed technology, which is becoming increasingly important to data management and whose prescription is a remedy for the moving media industry as well as for transactional data processes.

As digital workflows continue to depend upon single, large storage systems as their central repository for projects, the number of times that data is duplicated throughout the storage system increases.

This occurs as backup or protection copies are made during productions; as different versions prior to the approval process are added to the store; as collaborate and concurrent work is required; and as applications themselves create additional copies for use in rendering, compositing or transitional effects.

Often these duplicated files become unnecessary once the “final” version is created. Yet more often these files remain on the storage platform until someone (usually the overburdened administrator) cleans house and expunges the files to recover this now valuable storage space.

This is not unlike the early days of videoservers whereby the capacity on the entire system was a mere 12 hours of motion-JPEG content. Servers filled up with stale material; departments began to protect ‘their’ spots or promos, and operators had to continually remove current but dormant content just to fulfill the next day’s schedule of commercials or interstitial content.

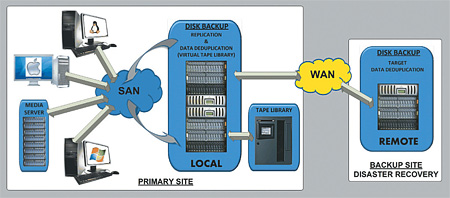

Backup and central storage costs are reduced when data deduplication is employed. The SAN handles all local production services. For backup and data reduction, a central repository comprised of a local disk backup and tape library replicates only a single unique data set to the remote (target) site over the WAN. Additional sites may then backup to a single remote site for protection over the same WAN. In high-performance storage platforms, special processes are used to manage the amount of content really required to be retained on the high-performance stores. Data migration (refer to July 8 issue “Selecting Storage Solutions of the Future) to less costly storage helps; yet backup and protection is rapidly becoming an important and essential practice for all forms of digital assets.

To aid in supporting backup, and to improve overall system performance (thus reduce costs), a relatively new process has exploded in the industry called “data deduplication.”

Used mainly in transaction and database server/storage and in backup protection platforms, data deduplication has recently found its way into the media server world and to enterprise class storage management solutions in a big way.

Data deduplication accomplishes a few things. First, it identifies redundant data that is already on the store. Next, it sends new location instructions to those applications needing that data. Then, it removes any redundant data, effectively placing it into a single location, as opposed to the location that the application first used to place that data.

Finally, as directed by the workflow policies set by the users or administrators, it flags the data locations appropriately so that critical copies can be made and, as necessary, migrated to lower-class storage, disaster recovery sites or archives, including virtual tape libraries.

Data deduplication’s goal is to reduce the amount of storage space required by significant amounts, and to improve the WAN/LAN performance by returning that bandwidth for more valuable purposes. This is beneficial from both a management and cost perspective. By controlling the number of times the same data resides on the store, one effectively increases the amount of data per storage unit. As peak capacities are approached, data deduplication effectively extends storage while allowing users to retain online data for longer time periods.

From the cost side, there is a reduction of the initial storage acquisition costs, and there is a longer interval between storage capacity upgrade requirements.

Data deduplication works in concert with existing backup applications and presents no performance impact when retrieving that backup data. When coupled with high-performance disk arrays, both the backup speeds and the data recovery processes are significantly accelerated.

As virtual machine proliferation continues, managing duplicated data and backup processes becomes more important. In some systems, as much as a 95 percent (20:1) disk capacity reduction can be realized.

This may further achieve up to a 50 percent reduction in the digital archive tape infrastructure, including the tape media and tape drives (statistics provided by NetApp using their VTL application).

Obviously this leads to a greener approach with lower cooling and power costs while improving the time to restore data from the archive or backup/protection store.

Deduplication is particularly applicable when using disk-based storage for backup or protection—a methodology that is accelerating as disk drive storage becomes less costly and as access/recovery time becomes most precious.

Policy-based deduplication processes can be set in an ‘inline’ mode (i.e., it happens as the data is arriving or during the backup process); in a ‘deferred’ mode (scheduled after the entire backup); or it can be completely turned ‘off’. Since deduplication is a highly CPU-intensive process, production workloads may require different policy modes be activated at differing time periods.

Even though we’ve only touched upon a fraction of the issues of storage management, protection and backup, the solutions for protecting the media assets while extending and conserving the cost of storage depend upon your workflow and the needs of your particular business.

From a hardware configuration perspective, if your (protected) data must be accessed hourly, then spinning disks are probably the better choice. If data is only accessed weekly, then consider a virtual tape library with a tape backup. When data is required at a monthly or greater time period, the tape-in-a-library or tape-in-a-vault scenario may be the answer. Any combination of these choices is possible.

When employing a central repository for backup or protection, or when there are more than a couple of sites that will utilize a central store and where a WAN is likely required, data deduplication becomes quite valuable as it will reduce the stress (and costs) placed on both the storage and the WAN/LAN environments overall.

Once again, it is becoming more apparent that the practices and technologies employed by the data industry cross over into the moving media domains. Expect that broadcasters and production companies will begin to use a very different set of suppliers for equipment and solutions as they migrate away from the all video-over-coax world and plunge headstrong into the world of managed data.

Karl Paulsen is chief technology officer for AZCAR Technologies. Contact him atkarl.paulsen@azcar.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.